mirror of https://github.com/apache/activemq.git

[AMQ-7502] Remove leveldb

This commit is contained in:

parent

fc0999cc87

commit

52a2bd446a

|

|

@ -16,14 +16,9 @@ activemq-unit-tests/KahaDB

|

||||||

activemq-unit-tests/broker

|

activemq-unit-tests/broker

|

||||||

activemq-unit-tests/derby.log

|

activemq-unit-tests/derby.log

|

||||||

activemq-unit-tests/derbyDb

|

activemq-unit-tests/derbyDb

|

||||||

activemq-unit-tests/LevelDB

|

|

||||||

activemq-unit-tests/networkedBroker

|

activemq-unit-tests/networkedBroker

|

||||||

activemq-unit-tests/shared

|

activemq-unit-tests/shared

|

||||||

activemq-data

|

activemq-data

|

||||||

activemq-leveldb-store/.cache

|

|

||||||

activemq-leveldb-store/.cache-main

|

|

||||||

activemq-leveldb-store/.cache-tests

|

|

||||||

activemq-leveldb-store/.tmpBin

|

|

||||||

activemq-runtime-config/src/main/resources/activemq.xsd

|

activemq-runtime-config/src/main/resources/activemq.xsd

|

||||||

activemq-amqp/amqp-trace.txt

|

activemq-amqp/amqp-trace.txt

|

||||||

data/

|

data/

|

||||||

|

|

|

||||||

|

|

@ -59,10 +59,6 @@

|

||||||

<groupId>${project.groupId}</groupId>

|

<groupId>${project.groupId}</groupId>

|

||||||

<artifactId>activemq-jdbc-store</artifactId>

|

<artifactId>activemq-jdbc-store</artifactId>

|

||||||

</dependency>

|

</dependency>

|

||||||

<dependency>

|

|

||||||

<groupId>${project.groupId}</groupId>

|

|

||||||

<artifactId>activemq-leveldb-store</artifactId>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.apache.geronimo.specs</groupId>

|

<groupId>org.apache.geronimo.specs</groupId>

|

||||||

<artifactId>geronimo-annotation_1.0_spec</artifactId>

|

<artifactId>geronimo-annotation_1.0_spec</artifactId>

|

||||||

|

|

@ -109,7 +105,6 @@

|

||||||

<include>${project.groupId}:activemq-mqtt</include>

|

<include>${project.groupId}:activemq-mqtt</include>

|

||||||

<include>${project.groupId}:activemq-stomp</include>

|

<include>${project.groupId}:activemq-stomp</include>

|

||||||

<include>${project.groupId}:activemq-kahadb-store</include>

|

<include>${project.groupId}:activemq-kahadb-store</include>

|

||||||

<include>${project.groupId}:activemq-leveldb-store</include>

|

|

||||||

<include>${project.groupId}:activemq-jdbc-store</include>

|

<include>${project.groupId}:activemq-jdbc-store</include>

|

||||||

<include>org.apache.activemq.protobuf:activemq-protobuf</include>

|

<include>org.apache.activemq.protobuf:activemq-protobuf</include>

|

||||||

<include>org.fusesource.hawtbuf:hawtbuf</include>

|

<include>org.fusesource.hawtbuf:hawtbuf</include>

|

||||||

|

|

@ -314,13 +309,6 @@

|

||||||

<classifier>sources</classifier>

|

<classifier>sources</classifier>

|

||||||

<optional>true</optional>

|

<optional>true</optional>

|

||||||

</dependency>

|

</dependency>

|

||||||

<dependency>

|

|

||||||

<groupId>${project.groupId}</groupId>

|

|

||||||

<artifactId>activemq-leveldb-store</artifactId>

|

|

||||||

<version>${project.version}</version>

|

|

||||||

<classifier>sources</classifier>

|

|

||||||

<optional>true</optional>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.apache.activemq.protobuf</groupId>

|

<groupId>org.apache.activemq.protobuf</groupId>

|

||||||

<artifactId>activemq-protobuf</artifactId>

|

<artifactId>activemq-protobuf</artifactId>

|

||||||

|

|

|

||||||

|

|

@ -114,11 +114,6 @@

|

||||||

<artifactId>spring-context</artifactId>

|

<artifactId>spring-context</artifactId>

|

||||||

<scope>test</scope>

|

<scope>test</scope>

|

||||||

</dependency>

|

</dependency>

|

||||||

<dependency>

|

|

||||||

<groupId>org.apache.activemq</groupId>

|

|

||||||

<artifactId>activemq-leveldb-store</artifactId>

|

|

||||||

<scope>test</scope>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>org.apache.activemq.tooling</groupId>

|

<groupId>org.apache.activemq.tooling</groupId>

|

||||||

<artifactId>activemq-junit</artifactId>

|

<artifactId>activemq-junit</artifactId>

|

||||||

|

|

|

||||||

|

|

@ -90,6 +90,17 @@

|

||||||

<artifactId>activemq-jaas</artifactId>

|

<artifactId>activemq-jaas</artifactId>

|

||||||

<scope>test</scope>

|

<scope>test</scope>

|

||||||

</dependency>

|

</dependency>

|

||||||

|

<dependency>

|

||||||

|

<groupId>org.apache.activemq</groupId>

|

||||||

|

<artifactId>activemq-kahadb-store</artifactId>

|

||||||

|

<scope>test</scope>

|

||||||

|

</dependency>

|

||||||

|

<dependency>

|

||||||

|

<groupId>org.apache.commons</groupId>

|

||||||

|

<artifactId>commons-lang3</artifactId>

|

||||||

|

<version>${commons-lang-version}</version>

|

||||||

|

<scope>test</scope>

|

||||||

|

</dependency>

|

||||||

<dependency>

|

<dependency>

|

||||||

<groupId>junit</groupId>

|

<groupId>junit</groupId>

|

||||||

<artifactId>junit</artifactId>

|

<artifactId>junit</artifactId>

|

||||||

|

|

|

||||||

|

|

@ -1,122 +0,0 @@

|

||||||

/**

|

|

||||||

* Licensed to the Apache Software Foundation (ASF) under one or more

|

|

||||||

* contributor license agreements. See the NOTICE file distributed with

|

|

||||||

* this work for additional information regarding copyright ownership.

|

|

||||||

* The ASF licenses this file to You under the Apache License, Version 2.0

|

|

||||||

* (the "License"); you may not use this file except in compliance with

|

|

||||||

* the License. You may obtain a copy of the License at

|

|

||||||

*

|

|

||||||

* http://www.apache.org/licenses/LICENSE-2.0

|

|

||||||

*

|

|

||||||

* Unless required by applicable law or agreed to in writing, software

|

|

||||||

* distributed under the License is distributed on an "AS IS" BASIS,

|

|

||||||

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

|

||||||

* See the License for the specific language governing permissions and

|

|

||||||

* limitations under the License.

|

|

||||||

*/

|

|

||||||

package org.apache.activemq.bugs;

|

|

||||||

|

|

||||||

import static org.junit.Assert.assertNotNull;

|

|

||||||

|

|

||||||

import java.io.File;

|

|

||||||

import java.io.IOException;

|

|

||||||

import java.net.ServerSocket;

|

|

||||||

|

|

||||||

import javax.jms.Connection;

|

|

||||||

import javax.jms.DeliveryMode;

|

|

||||||

import javax.jms.JMSException;

|

|

||||||

import javax.jms.MessageConsumer;

|

|

||||||

import javax.jms.MessageProducer;

|

|

||||||

import javax.jms.Queue;

|

|

||||||

import javax.jms.Session;

|

|

||||||

import javax.net.ServerSocketFactory;

|

|

||||||

|

|

||||||

import org.apache.activemq.ActiveMQConnectionFactory;

|

|

||||||

import org.apache.activemq.broker.BrokerService;

|

|

||||||

import org.apache.activemq.broker.TransportConnector;

|

|

||||||

import org.apache.activemq.leveldb.LevelDBStore;

|

|

||||||

import org.junit.After;

|

|

||||||

import org.junit.Before;

|

|

||||||

import org.junit.Rule;

|

|

||||||

import org.junit.Test;

|

|

||||||

import org.junit.rules.TestName;

|

|

||||||

|

|

||||||

public class AMQ5816Test {

|

|

||||||

|

|

||||||

private static BrokerService brokerService;

|

|

||||||

|

|

||||||

@Rule public TestName name = new TestName();

|

|

||||||

|

|

||||||

private File dataDirFile;

|

|

||||||

private String connectionURI;

|

|

||||||

|

|

||||||

@Before

|

|

||||||

public void setUp() throws Exception {

|

|

||||||

|

|

||||||

dataDirFile = new File("target/" + name.getMethodName());

|

|

||||||

|

|

||||||

brokerService = new BrokerService();

|

|

||||||

brokerService.setBrokerName("LevelDBBroker");

|

|

||||||

brokerService.setPersistent(true);

|

|

||||||

brokerService.setUseJmx(false);

|

|

||||||

brokerService.setAdvisorySupport(false);

|

|

||||||

brokerService.setDeleteAllMessagesOnStartup(true);

|

|

||||||

brokerService.setDataDirectoryFile(dataDirFile);

|

|

||||||

|

|

||||||

TransportConnector connector = brokerService.addConnector("http://0.0.0.0:" + getFreePort());

|

|

||||||

|

|

||||||

LevelDBStore persistenceFactory = new LevelDBStore();

|

|

||||||

persistenceFactory.setDirectory(dataDirFile);

|

|

||||||

brokerService.setPersistenceAdapter(persistenceFactory);

|

|

||||||

brokerService.start();

|

|

||||||

brokerService.waitUntilStarted();

|

|

||||||

|

|

||||||

connectionURI = connector.getPublishableConnectString();

|

|

||||||

}

|

|

||||||

|

|

||||||

/**

|

|

||||||

* @throws java.lang.Exception

|

|

||||||

*/

|

|

||||||

@After

|

|

||||||

public void tearDown() throws Exception {

|

|

||||||

brokerService.stop();

|

|

||||||

brokerService.waitUntilStopped();

|

|

||||||

}

|

|

||||||

|

|

||||||

@Test

|

|

||||||

public void testSendPersistentMessage() throws JMSException {

|

|

||||||

ActiveMQConnectionFactory factory = new ActiveMQConnectionFactory(connectionURI);

|

|

||||||

Connection connection = factory.createConnection();

|

|

||||||

connection.start();

|

|

||||||

|

|

||||||

Session session = connection.createSession(false, Session.AUTO_ACKNOWLEDGE);

|

|

||||||

Queue queue = session.createQueue(name.getMethodName());

|

|

||||||

MessageProducer producer = session.createProducer(queue);

|

|

||||||

MessageConsumer consumer = session.createConsumer(queue);

|

|

||||||

|

|

||||||

producer.setDeliveryMode(DeliveryMode.PERSISTENT);

|

|

||||||

producer.send(session.createTextMessage());

|

|

||||||

|

|

||||||

assertNotNull(consumer.receive(5000));

|

|

||||||

}

|

|

||||||

|

|

||||||

protected int getFreePort() {

|

|

||||||

int port = 8161;

|

|

||||||

ServerSocket ss = null;

|

|

||||||

|

|

||||||

try {

|

|

||||||

ss = ServerSocketFactory.getDefault().createServerSocket(0);

|

|

||||||

port = ss.getLocalPort();

|

|

||||||

} catch (IOException e) { // ignore

|

|

||||||

} finally {

|

|

||||||

try {

|

|

||||||

if (ss != null ) {

|

|

||||||

ss.close();

|

|

||||||

}

|

|

||||||

} catch (IOException e) { // ignore

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

||||||

return port;

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

@ -1,97 +0,0 @@

|

||||||

<!--

|

|

||||||

|

|

||||||

Licensed to the Apache Software Foundation (ASF) under one or more

|

|

||||||

contributor license agreements. See the NOTICE file distributed with

|

|

||||||

this work for additional information regarding copyright ownership.

|

|

||||||

The ASF licenses this file to You under the Apache License, Version 2.0

|

|

||||||

(the "License"); you may not use this file except in compliance with

|

|

||||||

the License. You may obtain a copy of the License at

|

|

||||||

|

|

||||||

http://www.apache.org/licenses/LICENSE-2.0

|

|

||||||

|

|

||||||

Unless required by applicable law or agreed to in writing, software

|

|

||||||

distributed under the License is distributed on an "AS IS" BASIS,

|

|

||||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

|

||||||

See the License for the specific language governing permissions and

|

|

||||||

limitations under the License.

|

|

||||||

|

|

||||||

-->

|

|

||||||

|

|

||||||

<beans

|

|

||||||

xmlns="http://www.springframework.org/schema/beans"

|

|

||||||

xmlns:amq="http://activemq.apache.org/schema/core"

|

|

||||||

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

|

|

||||||

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-2.0.xsd

|

|

||||||

http://activemq.apache.org/schema/core http://activemq.apache.org/schema/core/activemq-core.xsd">

|

|

||||||

|

|

||||||

|

|

||||||

<broker xmlns="http://activemq.apache.org/schema/core"

|

|

||||||

brokerName="${broker-name}"

|

|

||||||

dataDirectory="${data}"

|

|

||||||

start="false">

|

|

||||||

|

|

||||||

<destinationPolicy>

|

|

||||||

<policyMap>

|

|

||||||

<policyEntries>

|

|

||||||

<policyEntry topic=">" producerFlowControl="true">

|

|

||||||

<pendingMessageLimitStrategy>

|

|

||||||

<constantPendingMessageLimitStrategy limit="1000"/>

|

|

||||||

</pendingMessageLimitStrategy>

|

|

||||||

</policyEntry>

|

|

||||||

<policyEntry queue=">" producerFlowControl="true" memoryLimit="1mb">

|

|

||||||

</policyEntry>

|

|

||||||

</policyEntries>

|

|

||||||

</policyMap>

|

|

||||||

</destinationPolicy>

|

|

||||||

|

|

||||||

<managementContext>

|

|

||||||

<managementContext createConnector="false"/>

|

|

||||||

</managementContext>

|

|

||||||

|

|

||||||

<persistenceAdapter>

|

|

||||||

<levelDB directory="${data}/leveldb"/>

|

|

||||||

</persistenceAdapter>

|

|

||||||

|

|

||||||

<plugins>

|

|

||||||

<jaasAuthenticationPlugin configuration="karaf" />

|

|

||||||

<authorizationPlugin>

|

|

||||||

<map>

|

|

||||||

<authorizationMap groupClass="org.apache.karaf.jaas.boot.principal.RolePrincipal">

|

|

||||||

<authorizationEntries>

|

|

||||||

<authorizationEntry queue=">" read="admin" write="admin" admin="admin"/>

|

|

||||||

<authorizationEntry topic=">" read="admin" write="admin" admin="admin"/>

|

|

||||||

<authorizationEntry topic="ActiveMQ.Advisory.>" read="admin" write="admin" admin="admin"/>

|

|

||||||

</authorizationEntries>

|

|

||||||

|

|

||||||

<tempDestinationAuthorizationEntry>

|

|

||||||

<tempDestinationAuthorizationEntry read="admin" write="admin" admin="admin"/>

|

|

||||||

</tempDestinationAuthorizationEntry>

|

|

||||||

</authorizationMap>

|

|

||||||

</map>

|

|

||||||

</authorizationPlugin>

|

|

||||||

</plugins>

|

|

||||||

|

|

||||||

<systemUsage>

|

|

||||||

<systemUsage>

|

|

||||||

<memoryUsage>

|

|

||||||

<memoryUsage limit="64 mb"/>

|

|

||||||

</memoryUsage>

|

|

||||||

<storeUsage>

|

|

||||||

<storeUsage limit="100 gb"/>

|

|

||||||

</storeUsage>

|

|

||||||

<tempUsage>

|

|

||||||

<tempUsage limit="50 gb"/>

|

|

||||||

</tempUsage>

|

|

||||||

</systemUsage>

|

|

||||||

</systemUsage>

|

|

||||||

|

|

||||||

<transportConnectors>

|

|

||||||

<transportConnector name="openwire" uri="tcp://0.0.0.0:61616?maximumConnections=1000"/>

|

|

||||||

<transportConnector name="http" uri="http://0.0.0.0:61626"/>

|

|

||||||

<transportConnector name="amqp" uri="amqp://0.0.0.0:61636?transport.transformer=jms"/>

|

|

||||||

<transportConnector name="ws" uri="ws://0.0.0.0:61646"/>

|

|

||||||

<transportConnector name="mqtt" uri="ws://0.0.0.0:61656"/>

|

|

||||||

</transportConnectors>

|

|

||||||

</broker>

|

|

||||||

|

|

||||||

</beans>

|

|

||||||

|

|

@ -71,6 +71,5 @@

|

||||||

<bundle dependency="true">mvn:com.fasterxml.jackson.core/jackson-core/${jackson-version}</bundle>

|

<bundle dependency="true">mvn:com.fasterxml.jackson.core/jackson-core/${jackson-version}</bundle>

|

||||||

<bundle dependency="true">mvn:com.fasterxml.jackson.core/jackson-databind/${jackson-databind-version}</bundle>

|

<bundle dependency="true">mvn:com.fasterxml.jackson.core/jackson-databind/${jackson-databind-version}</bundle>

|

||||||

<bundle dependency="true">mvn:com.fasterxml.jackson.core/jackson-annotations/${jackson-version}</bundle>

|

<bundle dependency="true">mvn:com.fasterxml.jackson.core/jackson-annotations/${jackson-version}</bundle>

|

||||||

<bundle dependency="true">mvn:org.scala-lang/scala-library/${scala-version}</bundle>

|

|

||||||

</feature>

|

</feature>

|

||||||

</features>

|

</features>

|

||||||

|

|

|

||||||

Binary file not shown.

|

Before Width: | Height: | Size: 11 KiB |

|

|

@ -1,639 +0,0 @@

|

||||||

<?xml version="1.0" encoding="UTF-8"?>

|

|

||||||

<!--

|

|

||||||

Licensed to the Apache Software Foundation (ASF) under one or more

|

|

||||||

contributor license agreements. See the NOTICE file distributed with

|

|

||||||

this work for additional information regarding copyright ownership.

|

|

||||||

The ASF licenses this file to You under the Apache License, Version 2.0

|

|

||||||

(the "License"); you may not use this file except in compliance with

|

|

||||||

the License. You may obtain a copy of the License at

|

|

||||||

|

|

||||||

http://www.apache.org/licenses/LICENSE-2.0

|

|

||||||

|

|

||||||

Unless required by applicable law or agreed to in writing, software

|

|

||||||

distributed under the License is distributed on an "AS IS" BASIS,

|

|

||||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

|

||||||

See the License for the specific language governing permissions and

|

|

||||||

limitations under the License.

|

|

||||||

-->

|

|

||||||

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

|

|

||||||

|

|

||||||

<modelVersion>4.0.0</modelVersion>

|

|

||||||

|

|

||||||

<parent>

|

|

||||||

<groupId>org.apache.activemq</groupId>

|

|

||||||

<artifactId>activemq-parent</artifactId>

|

|

||||||

<version>5.17.0-SNAPSHOT</version>

|

|

||||||

</parent>

|

|

||||||

|

|

||||||

<artifactId>activemq-leveldb-store</artifactId>

|

|

||||||

<packaging>jar</packaging>

|

|

||||||

|

|

||||||

<name>ActiveMQ :: LevelDB Store</name>

|

|

||||||

<description>ActiveMQ LevelDB Store Implementation</description>

|

|

||||||

|

|

||||||

<dependencies>

|

|

||||||

|

|

||||||

<!-- for scala support -->

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.scala-lang</groupId>

|

|

||||||

<artifactId>scala-library</artifactId>

|

|

||||||

<version>${scala-version}</version>

|

|

||||||

<scope>compile</scope>

|

|

||||||

</dependency>

|

|

||||||

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.apache.activemq</groupId>

|

|

||||||

<artifactId>activemq-broker</artifactId>

|

|

||||||

<scope>provided</scope>

|

|

||||||

</dependency>

|

|

||||||

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.slf4j</groupId>

|

|

||||||

<artifactId>slf4j-api</artifactId>

|

|

||||||

<scope>compile</scope>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.fusesource.hawtbuf</groupId>

|

|

||||||

<artifactId>hawtbuf-proto</artifactId>

|

|

||||||

<version>${hawtbuf-version}</version>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.fusesource.hawtdispatch</groupId>

|

|

||||||

<artifactId>hawtdispatch-scala-2.11</artifactId>

|

|

||||||

<version>${hawtdispatch-version}</version>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.iq80.leveldb</groupId>

|

|

||||||

<artifactId>leveldb-api</artifactId>

|

|

||||||

<version>${leveldb-version}</version>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.iq80.leveldb</groupId>

|

|

||||||

<artifactId>leveldb</artifactId>

|

|

||||||

<version>${leveldb-version}</version>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.fusesource.leveldbjni</groupId>

|

|

||||||

<artifactId>leveldbjni</artifactId>

|

|

||||||

<version>${leveldbjni-version}</version>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>com.google.guava</groupId>

|

|

||||||

<artifactId>guava</artifactId>

|

|

||||||

<version>${guava-version}</version>

|

|

||||||

</dependency>

|

|

||||||

|

|

||||||

<!-- Lets not include the JNI libs for now so that we can harden the pure java driver more -->

|

|

||||||

<!--

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.fusesource.leveldbjni</groupId>

|

|

||||||

<artifactId>leveldbjni-osx</artifactId>

|

|

||||||

<version>${leveldbjni-version}</version>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.fusesource.leveldbjni</groupId>

|

|

||||||

<artifactId>leveldbjni-linux32</artifactId>

|

|

||||||

<version>${leveldbjni-version}</version>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.fusesource.leveldbjni</groupId>

|

|

||||||

<artifactId>leveldbjni-linux64</artifactId>

|

|

||||||

<version>${leveldbjni-version}</version>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.fusesource.leveldbjni</groupId>

|

|

||||||

<artifactId>leveldbjni-win32</artifactId>

|

|

||||||

<version>${leveldbjni-version}</version>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.fusesource.leveldbjni</groupId>

|

|

||||||

<artifactId>leveldbjni-win64</artifactId>

|

|

||||||

<version>${leveldbjni-version}</version>

|

|

||||||

</dependency>

|

|

||||||

-->

|

|

||||||

|

|

||||||

<!-- For Replication -->

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.fusesource.hawtdispatch</groupId>

|

|

||||||

<artifactId>hawtdispatch-transport</artifactId>

|

|

||||||

<version>${hawtdispatch-version}</version>

|

|

||||||

<scope>provided</scope>

|

|

||||||

</dependency>

|

|

||||||

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.linkedin</groupId>

|

|

||||||

<artifactId>org.linkedin.zookeeper-impl</artifactId>

|

|

||||||

<version>${linkedin-zookeeper-version}</version>

|

|

||||||

<scope>provided</scope>

|

|

||||||

<exclusions>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>org.json</groupId>

|

|

||||||

<artifactId>json</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

</exclusions>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.linkedin</groupId>

|

|

||||||

<artifactId>org.linkedin.util-core</artifactId>

|

|

||||||

<version>${linkedin-zookeeper-version}</version>

|

|

||||||

<scope>provided</scope>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.apache.zookeeper</groupId>

|

|

||||||

<artifactId>zookeeper</artifactId>

|

|

||||||

<version>${zookeeper-version}</version>

|

|

||||||

<scope>provided</scope>

|

|

||||||

</dependency>

|

|

||||||

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.osgi</groupId>

|

|

||||||

<artifactId>osgi.core</artifactId>

|

|

||||||

<scope>provided</scope>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.osgi</groupId>

|

|

||||||

<artifactId>osgi.cmpn</artifactId>

|

|

||||||

<scope>provided</scope>

|

|

||||||

</dependency>

|

|

||||||

|

|

||||||

<dependency>

|

|

||||||

<groupId>commons-io</groupId>

|

|

||||||

<artifactId>commons-io</artifactId>

|

|

||||||

<scope>test</scope>

|

|

||||||

</dependency>

|

|

||||||

|

|

||||||

<!-- For Optional Snappy Compression -->

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.xerial.snappy</groupId>

|

|

||||||

<artifactId>snappy-java</artifactId>

|

|

||||||

<version>${snappy-version}</version>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.iq80.snappy</groupId>

|

|

||||||

<artifactId>snappy</artifactId>

|

|

||||||

<version>0.2</version>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>com.fasterxml.jackson.core</groupId>

|

|

||||||

<artifactId>jackson-core</artifactId>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>com.fasterxml.jackson.core</groupId>

|

|

||||||

<artifactId>jackson-annotations</artifactId>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>com.fasterxml.jackson.core</groupId>

|

|

||||||

<artifactId>jackson-databind</artifactId>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.apache.hadoop</groupId>

|

|

||||||

<artifactId>hadoop-core</artifactId>

|

|

||||||

<version>${hadoop-version}</version>

|

|

||||||

<scope>test</scope>

|

|

||||||

<exclusions>

|

|

||||||

<!-- hadoop's transative dependencies are such a pig -->

|

|

||||||

<exclusion>

|

|

||||||

<groupId>commons-beanutils</groupId>

|

|

||||||

<artifactId>commons-beanutils-core</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>commons-cli</groupId>

|

|

||||||

<artifactId>commons-cli</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>xmlenc</groupId>

|

|

||||||

<artifactId>xmlenc</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>commons-codec</groupId>

|

|

||||||

<artifactId>commons-codec</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>org.apache.commons</groupId>

|

|

||||||

<artifactId>commons-math</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>commons-net</groupId>

|

|

||||||

<artifactId>commons-net</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>commons-httpclient</groupId>

|

|

||||||

<artifactId>commons-httpclient</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>tomcat</groupId>

|

|

||||||

<artifactId>jasper-runtime</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>tomcat</groupId>

|

|

||||||

<artifactId>jasper-compiler</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>commons-el</groupId>

|

|

||||||

<artifactId>commons-el</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>net.java.dev.jets3t</groupId>

|

|

||||||

<artifactId>jets3t</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>net.sf.kosmosfs</groupId>

|

|

||||||

<artifactId>kfs</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>hsqldb</groupId>

|

|

||||||

<artifactId>hsqldb</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>oro</groupId>

|

|

||||||

<artifactId>oro</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>org.eclipse.jdt</groupId>

|

|

||||||

<artifactId>core</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>org.codehaus.jackson</groupId>

|

|

||||||

<artifactId>jackson-mapper-asl</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>org.mortbay.jetty</groupId>

|

|

||||||

<artifactId>jetty</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>org.mortbay.jetty</groupId>

|

|

||||||

<artifactId>jetty-util</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>org.mortbay.jetty</groupId>

|

|

||||||

<artifactId>jetty-api-2.1</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>org.mortbay.jetty</groupId>

|

|

||||||

<artifactId>jsp-2.1</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

<exclusion>

|

|

||||||

<groupId>org.mortbay.jetty</groupId>

|

|

||||||

<artifactId>jsp-api-2.1</artifactId>

|

|

||||||

</exclusion>

|

|

||||||

</exclusions>

|

|

||||||

</dependency>

|

|

||||||

|

|

||||||

<!-- Testing Dependencies -->

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.slf4j</groupId>

|

|

||||||

<artifactId>slf4j-log4j12</artifactId>

|

|

||||||

<scope>test</scope>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>log4j</groupId>

|

|

||||||

<artifactId>log4j</artifactId>

|

|

||||||

<scope>test</scope>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.apache.activemq</groupId>

|

|

||||||

<artifactId>activemq-broker</artifactId>

|

|

||||||

<type>test-jar</type>

|

|

||||||

<scope>test</scope>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.apache.activemq</groupId>

|

|

||||||

<artifactId>activemq-kahadb-store</artifactId>

|

|

||||||

<scope>test</scope>

|

|

||||||

</dependency>

|

|

||||||

|

|

||||||

<!-- Hadoop Testing Deps -->

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.apache.hadoop</groupId>

|

|

||||||

<artifactId>hadoop-test</artifactId>

|

|

||||||

<version>${hadoop-version}</version>

|

|

||||||

<scope>test</scope>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.apache.commons</groupId>

|

|

||||||

<artifactId>commons-lang3</artifactId>

|

|

||||||

<version>${commons-lang-version}</version>

|

|

||||||

<scope>test</scope>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.apache.commons</groupId>

|

|

||||||

<artifactId>commons-math</artifactId>

|

|

||||||

<version>2.2</version>

|

|

||||||

<scope>test</scope>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>org.scalatest</groupId>

|

|

||||||

<artifactId>scalatest_2.11</artifactId>

|

|

||||||

<version>${scalatest-version}</version>

|

|

||||||

<scope>test</scope>

|

|

||||||

</dependency>

|

|

||||||

<dependency>

|

|

||||||

<groupId>junit</groupId>

|

|

||||||

<artifactId>junit</artifactId>

|

|

||||||

<scope>test</scope>

|

|

||||||

</dependency>

|

|

||||||

</dependencies>

|

|

||||||

|

|

||||||

<build>

|

|

||||||

|

|

||||||

<plugins>

|

|

||||||

<plugin>

|

|

||||||

<groupId>net.alchim31.maven</groupId>

|

|

||||||

<artifactId>scala-maven-plugin</artifactId>

|

|

||||||

<version>${scala-plugin-version}</version>

|

|

||||||

<executions>

|

|

||||||

<execution>

|

|

||||||

<id>compile</id>

|

|

||||||

<goals>

|

|

||||||

<goal>compile</goal>

|

|

||||||

</goals>

|

|

||||||

<phase>compile</phase>

|

|

||||||

</execution>

|

|

||||||

<execution>

|

|

||||||

<id>test-compile</id>

|

|

||||||

<goals>

|

|

||||||

<goal>testCompile</goal>

|

|

||||||

</goals>

|

|

||||||

<phase>test-compile</phase>

|

|

||||||

</execution>

|

|

||||||

<execution>

|

|

||||||

<phase>process-resources</phase>

|

|

||||||

<goals>

|

|

||||||

<goal>compile</goal>

|

|

||||||

</goals>

|

|

||||||

</execution>

|

|

||||||

</executions>

|

|

||||||

<configuration>

|

|

||||||

<jvmArgs>

|

|

||||||

<jvmArg>-Xmx1024m</jvmArg>

|

|

||||||

<jvmArg>-Xss8m</jvmArg>

|

|

||||||

</jvmArgs>

|

|

||||||

<scalaVersion>${scala-version}</scalaVersion>

|

|

||||||

<args>

|

|

||||||

<arg>-deprecation</arg>

|

|

||||||

</args>

|

|

||||||

<compilerPlugins>

|

|

||||||

<!-- <compilerPlugin>

|

|

||||||

<groupId>org.fusesource.jvmassert</groupId>

|

|

||||||

<artifactId>jvmassert</artifactId>

|

|

||||||

<version>1.4</version>

|

|

||||||

</compilerPlugin> -->

|

|

||||||

</compilerPlugins>

|

|

||||||

</configuration>

|

|

||||||

</plugin>

|

|

||||||

<plugin>

|

|

||||||

<groupId>org.apache.maven.plugins</groupId>

|

|

||||||

<artifactId>maven-surefire-plugin</artifactId>

|

|

||||||

<configuration>

|

|

||||||

<!-- we must turn off the use of system class loader so our tests can find stuff - otherwise ScalaSupport compiler can't find stuff -->

|

|

||||||

<useSystemClassLoader>false</useSystemClassLoader>

|

|

||||||

<childDelegation>false</childDelegation>

|

|

||||||

<useFile>true</useFile>

|

|

||||||

<failIfNoTests>false</failIfNoTests>

|

|

||||||

<excludes>

|

|

||||||

<exclude>**/EnqueueRateScenariosTest.*</exclude>

|

|

||||||

<exclude>**/DFSLevelDB*.*</exclude>

|

|

||||||

</excludes>

|

|

||||||

</configuration>

|

|

||||||

</plugin>

|

|

||||||

<plugin>

|

|

||||||

<groupId>org.fusesource.hawtbuf</groupId>

|

|

||||||

<artifactId>hawtbuf-protoc</artifactId>

|

|

||||||

<version>${hawtbuf-version}</version>

|

|

||||||

<configuration>

|

|

||||||

<type>alt</type>

|

|

||||||

</configuration>

|

|

||||||

<executions>

|

|

||||||

<execution>

|

|

||||||

<goals>

|

|

||||||

<goal>compile</goal>

|

|

||||||

</goals>

|

|

||||||

</execution>

|

|

||||||

</executions>

|

|

||||||

</plugin>

|

|

||||||

<plugin>

|

|

||||||

<groupId>org.codehaus.mojo</groupId>

|

|

||||||

<artifactId>build-helper-maven-plugin</artifactId>

|

|

||||||

<executions>

|

|

||||||

<execution>

|

|

||||||

<id>add-source</id>

|

|

||||||

<phase>generate-sources</phase>

|

|

||||||

<goals>

|

|

||||||

<goal>add-source</goal>

|

|

||||||

</goals>

|

|

||||||

<configuration>

|

|

||||||

<sources>

|

|

||||||

<source>${basedir}/src/main/scala</source>

|

|

||||||

<source>${basedir}/target/generated-sources/proto</source>

|

|

||||||

</sources>

|

|

||||||

</configuration>

|

|

||||||

</execution>

|

|

||||||

<execution>

|

|

||||||

<id>add-test-source</id>

|

|

||||||

<phase>generate-test-sources</phase>

|

|

||||||

<goals>

|

|

||||||

<goal>add-test-source</goal>

|

|

||||||

</goals>

|

|

||||||

<configuration>

|

|

||||||

<sources>

|

|

||||||

<source>${basedir}/src/test/scala</source>

|

|

||||||

</sources>

|

|

||||||

</configuration>

|

|

||||||

</execution>

|

|

||||||

</executions>

|

|

||||||

</plugin>

|

|

||||||

</plugins>

|

|

||||||

<pluginManagement>

|

|

||||||

<plugins>

|

|

||||||

<!--This plugin's configuration is used to store Eclipse m2e settings only.

|

|

||||||

It has no influence on the Maven build itself.-->

|

|

||||||

<plugin>

|

|

||||||

<groupId>org.eclipse.m2e</groupId>

|

|

||||||

<artifactId>lifecycle-mapping</artifactId>

|

|

||||||

<version>1.0.0</version>

|

|

||||||

<configuration>

|

|

||||||

<lifecycleMappingMetadata>

|

|

||||||

<pluginExecutions>

|

|

||||||

<pluginExecution>

|

|

||||||

<pluginExecutionFilter>

|

|

||||||

<groupId>org.fusesource.hawtbuf</groupId>

|

|

||||||

<artifactId>hawtbuf-protoc</artifactId>

|

|

||||||

<versionRange>[${hawtbuf-version},)</versionRange>

|

|

||||||

<goals>

|

|

||||||

<goal>compile</goal>

|

|

||||||

</goals>

|

|

||||||

</pluginExecutionFilter>

|

|

||||||

<action>

|

|

||||||

<execute><runOnIncremental>true</runOnIncremental></execute>

|

|

||||||

<!--<ignore />-->

|

|

||||||

</action>

|

|

||||||

</pluginExecution>

|

|

||||||

<pluginExecution>

|

|

||||||

<pluginExecutionFilter>

|

|

||||||

<groupId>net.alchim31.maven</groupId>

|

|

||||||

<artifactId>scala-maven-plugin</artifactId>

|

|

||||||

<versionRange>[0.0.0,)</versionRange>

|

|

||||||

<goals>

|

|

||||||

<goal>compile</goal>

|

|

||||||

<goal>testCompile</goal>

|

|

||||||

</goals>

|

|

||||||

</pluginExecutionFilter>

|

|

||||||

<action>

|

|

||||||

<ignore />

|

|

||||||

</action>

|

|

||||||

</pluginExecution>

|

|

||||||

</pluginExecutions>

|

|

||||||

</lifecycleMappingMetadata>

|

|

||||||

</configuration>

|

|

||||||

</plugin>

|

|

||||||

<plugin>

|

|

||||||

<groupId>org.apache.maven.plugins</groupId>

|

|

||||||

<artifactId>maven-eclipse-plugin</artifactId>

|

|

||||||

<configuration>

|

|

||||||

<buildcommands>

|

|

||||||

<java.lang.String>org.scala-ide.sdt.core.scalabuilder</java.lang.String>

|

|

||||||

</buildcommands>

|

|

||||||

<projectnatures>

|

|

||||||

<nature>org.scala-ide.sdt.core.scalanature</nature>

|

|

||||||

<nature>org.eclipse.jdt.core.javanature</nature>

|

|

||||||

</projectnatures>

|

|

||||||

<sourceIncludes>

|

|

||||||

<sourceInclude>**/*.scala</sourceInclude>

|

|

||||||

</sourceIncludes>

|

|

||||||

</configuration>

|

|

||||||

</plugin>

|

|

||||||

</plugins>

|

|

||||||

</pluginManagement>

|

|

||||||

</build>

|

|

||||||

|

|

||||||

<profiles>

|

|

||||||

<profile>

|

|

||||||

<id>activemq.tests-sanity</id>

|

|

||||||

<activation>

|

|

||||||

<property>

|

|

||||||

<name>activemq.tests</name>

|

|

||||||

<value>smoke</value>

|

|

||||||

</property>

|

|

||||||

</activation>

|

|

||||||

<build>

|

|

||||||

<plugins>

|

|

||||||

<plugin>

|

|

||||||

<artifactId>maven-surefire-plugin</artifactId>

|

|

||||||

<configuration>

|

|

||||||

<includes>

|

|

||||||

<include>**/LevelDBStoreTest.*</include>

|

|

||||||

</includes>

|

|

||||||

</configuration>

|

|

||||||

</plugin>

|

|

||||||

</plugins>

|

|

||||||

</build>

|

|

||||||

</profile>

|

|

||||||

<profile>

|

|

||||||

<id>activemq.tests-autoTransport</id>

|

|

||||||

<activation>

|

|

||||||

<property>

|

|

||||||

<name>activemq.tests</name>

|

|

||||||

<value>autoTransport</value>

|

|

||||||

</property>

|

|

||||||

</activation>

|

|

||||||

<build>

|

|

||||||

<plugins>

|

|

||||||

<plugin>

|

|

||||||

<artifactId>maven-surefire-plugin</artifactId>

|

|

||||||

<configuration>

|

|

||||||

<excludes>

|

|

||||||

<exclude>**</exclude>

|

|

||||||

</excludes>

|

|

||||||

</configuration>

|

|

||||||

</plugin>

|

|

||||||

</plugins>

|

|

||||||

</build>

|

|

||||||

</profile>

|

|

||||||

|

|

||||||

<profile>

|

|

||||||

<id>activemq.tests.windows.excludes</id>

|

|

||||||

<activation>

|

|

||||||

<os>

|

|

||||||

<family>Windows</family>

|

|

||||||

</os>

|

|

||||||

</activation>

|

|

||||||

<build>

|

|

||||||

<plugins>

|

|

||||||

<plugin>

|

|

||||||

<artifactId>maven-surefire-plugin</artifactId>

|

|

||||||

<configuration>

|

|

||||||

<excludes combine.children="append">

|

|

||||||

<exclude>**/*.*</exclude>

|

|

||||||

</excludes>

|

|

||||||

</configuration>

|

|

||||||

</plugin>

|

|

||||||

</plugins>

|

|

||||||

</build>

|

|

||||||

</profile>

|

|

||||||

<profile>

|

|

||||||

<id>activemq.tests.solaris.excludes</id>

|

|

||||||

<activation>

|

|

||||||

<property>

|

|

||||||

<name>os.name</name>

|

|

||||||

<value>SunOS</value>

|

|

||||||

</property>

|

|

||||||

</activation>

|

|

||||||

<build>

|

|

||||||

<plugins>

|

|

||||||

<plugin>

|

|

||||||

<artifactId>maven-surefire-plugin</artifactId>

|

|

||||||

<configuration>

|

|

||||||

<excludes combine.children="append">

|

|

||||||

<exclude>**/*.*</exclude>

|

|

||||||

</excludes>

|

|

||||||

</configuration>

|

|

||||||

</plugin>

|

|

||||||

</plugins>

|

|

||||||

</build>

|

|

||||||

</profile>

|

|

||||||

<profile>

|

|

||||||

<id>activemq.tests.aix.excludes</id>

|

|

||||||

<activation>

|

|

||||||

<property>

|

|

||||||

<name>os.name</name>

|

|

||||||

<value>AIX</value>

|

|

||||||

</property>

|

|

||||||

</activation>

|

|

||||||

<build>

|

|

||||||

<plugins>

|

|

||||||

<plugin>

|

|

||||||

<artifactId>maven-surefire-plugin</artifactId>

|

|

||||||

<configuration>

|

|

||||||

<excludes combine.children="append">

|

|

||||||

<exclude>**/*.*</exclude>

|

|

||||||

</excludes>

|

|

||||||

</configuration>

|

|

||||||

</plugin>

|

|

||||||

</plugins>

|

|

||||||

</build>

|

|

||||||

</profile>

|

|

||||||

<profile>

|

|

||||||

<id>activemq.tests.hpux.excludes</id>

|

|

||||||

<activation>

|

|

||||||

<os>

|

|

||||||

<family>HP-UX</family>

|

|

||||||

</os>

|

|

||||||

</activation>

|

|

||||||

<build>

|

|

||||||

<plugins>

|

|

||||||

<plugin>

|

|

||||||

<artifactId>maven-surefire-plugin</artifactId>

|

|

||||||

<configuration>

|

|

||||||

<excludes combine.children="append">

|

|

||||||

<exclude>**/*.*</exclude>

|

|

||||||

</excludes>

|

|

||||||

</configuration>

|

|

||||||

</plugin>

|

|

||||||

</plugins>

|

|

||||||

</build>

|

|

||||||

</profile>

|

|

||||||

</profiles>

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</project>

|

|

||||||

|

|

@ -1,94 +0,0 @@

|

||||||

# The LevelDB Store

|

|

||||||

|

|

||||||

## Overview

|

|

||||||

|

|

||||||

The LevelDB Store is message store implementation that can be used in ActiveMQ messaging servers.

|

|

||||||

|

|

||||||

## LevelDB vs KahaDB

|

|

||||||

|

|

||||||

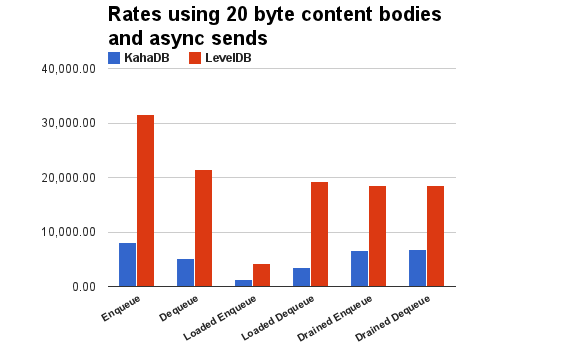

How is the LevelDB Store better than the default KahaDB store:

|

|

||||||

|

|

||||||

* It maitains fewer index entries per message than KahaDB which means it has a higher persistent throughput.

|

|

||||||

* Faster recovery when a broker restarts

|

|

||||||

* Since the broker tends to write and read queue entries sequentially, the LevelDB based index provide a much better performance than the B-Tree based indexes of KahaDB which increases throughput.

|

|

||||||

* Unlike the KahaDB indexes, the LevelDB indexes support concurrent read access which further improves read throughput.

|

|

||||||

* Pauseless data log file garbage collection cycles.

|

|

||||||

* It uses fewer read IO operations to load stored messages.

|

|

||||||

* If a message is copied to multiple queues (Typically happens if your using virtual topics with multiple

|

|

||||||

consumers), then LevelDB will only journal the payload of the message once. KahaDB will journal it multiple times.

|

|

||||||

* It exposes it's status via JMX for monitoring

|

|

||||||

* Supports replication to get High Availability

|

|

||||||

|

|

||||||

See the following chart to get an idea on how much better you can expect the LevelDB store to perform vs the KahaDB store:

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## How to Use with ActiveMQ

|

|

||||||

|

|

||||||

Update the broker configuration file and change `persistenceAdapter` elements

|

|

||||||

settings so that it uses the LevelDB store using the following spring XML

|

|

||||||

configuration example:

|

|

||||||

|

|

||||||

<persistenceAdapter>

|

|

||||||

<levelDB directory="${activemq.base}/data/leveldb" logSize="107374182"/>

|

|

||||||

</persistenceAdapter>

|

|

||||||

|

|

||||||

### Configuration / Property Reference

|

|

||||||

|

|

||||||

*TODO*

|

|

||||||

|

|

||||||

### JMX Attribute and Operation Reference

|

|

||||||

|

|

||||||

*TODO*

|

|

||||||

|

|

||||||

## Known Limitations

|

|

||||||

|

|

||||||

* The store does not do any dup detection of messages.

|

|

||||||

|

|

||||||

## Built in High Availability Support

|

|

||||||

|

|

||||||

You can also use a High Availability (HA) version of the LevelDB store which

|

|

||||||

works with Hadoop based file systems to achieve HA of your stored messages.

|

|

||||||

|

|

||||||

**Q:** What are the requirements?

|

|

||||||

**A:** An existing Hadoop 1.0.0 cluster

|

|

||||||

|

|

||||||

**Q:** How does it work during the normal operating cycle?

|

|

||||||

A: It uses HDFS to store a highly available copy of the local leveldb storage files. As local log files are being written to, it also maintains a mirror copy on HDFS. If you have sync enabled on the store, a HDFS file sync is performed instead of a local disk sync. When the index is check pointed, we upload any previously not uploaded leveldb .sst files to HDFS.

|

|

||||||

|

|

||||||

**Q:** What happens when a broker fails and we startup a new slave to take over?

|

|

||||||

**A:** The slave will download from HDFS the log files and the .sst files associated with the latest uploaded index. Then normal leveldb store recovery kicks in which updates the index using the log files.

|

|

||||||

|

|

||||||

**Q:** How do I use the HA version of the LevelDB store?

|

|

||||||

**A:** Update your activemq.xml to use a `persistenceAdapter` setting similar to the following:

|

|

||||||

|

|

||||||

<persistenceAdapter>

|

|

||||||

<bean xmlns="http://www.springframework.org/schema/beans"

|

|

||||||

class="org.apache.activemq.leveldb.HALevelDBStore">

|

|

||||||

|

|

||||||

<!-- File system URL to replicate to -->

|

|

||||||

<property name="dfsUrl" value="hdfs://hadoop-name-node"/>

|

|

||||||

<!-- Directory in the file system to store the data in -->

|

|

||||||

<property name="dfsDirectory" value="activemq"/>

|

|

||||||

|

|

||||||

<property name="directory" value="${activemq.base}/data/leveldb"/>

|

|

||||||

<property name="logSize" value="107374182"/>

|

|

||||||

<!-- <property name="sync" value="false"/> -->

|

|

||||||

</bean>

|

|

||||||

</persistenceAdapter>

|

|

||||||

|

|

||||||

Notice the implementation class name changes to 'HALevelDBStore'

|

|

||||||

Instead of using a 'dfsUrl' property you can instead also just load an existing Hadoop configuration file if it's available on your system, for example:

|

|

||||||

<property name="dfsConfig" value="/opt/hadoop-1.0.0/conf/core-site.xml"/>

|

|

||||||

|

|

||||||

**Q:** Who handles starting up the Slave?

|

|

||||||

**A:** You do. :) This implementation assumes master startup/elections are performed externally and that 2 brokers are never running against the same HDFS file path. In practice this means you need something like ZooKeeper to control starting new brokers to take over failed masters.

|

|

||||||

|

|

||||||

**Q:** Can this run against something other than HDFS?

|

|

||||||

**A:** It should be able to run with any Hadoop supported file system like CloudStore, S3, MapR, NFS, etc (Well at least in theory, I've only tested against HDFS).

|

|

||||||

|

|

||||||

**Q:** Can 'X' performance be optimized?

|

|

||||||

**A:** There are bunch of way to improve the performance of many of the things that current version of the store is doing. For example, aggregating the .sst files into an archive to make more efficient use of HDFS, concurrent downloading to improve recovery performance. Lazy downloading of the oldest log files to make recovery faster. Async HDFS writes to avoid blocking local updates. Running brokers in a warm 'standy' mode which keep downloading new log updates and applying index updates from the master as they get uploaded to HDFS to get faster failovers.

|

|

||||||

|

|

||||||

**Q:** Does the broker fail if HDFS fails?

|

|

||||||

**A:** Currently, yes. But it should be possible to make the master resilient to HDFS failures.

|

|

||||||

|

|

@ -1,208 +0,0 @@

|

||||||

/**

|

|

||||||

* Licensed to the Apache Software Foundation (ASF) under one or more

|

|

||||||

* contributor license agreements. See the NOTICE file distributed with

|

|

||||||

* this work for additional information regarding copyright ownership.

|

|

||||||

* The ASF licenses this file to You under the Apache License, Version 2.0

|

|

||||||

* (the "License"); you may not use this file except in compliance with

|

|

||||||

* the License. You may obtain a copy of the License at

|

|

||||||

*

|

|

||||||

* http://www.apache.org/licenses/LICENSE-2.0

|

|

||||||

*

|

|

||||||

* Unless required by applicable law or agreed to in writing, software

|

|

||||||

* distributed under the License is distributed on an "AS IS" BASIS,

|

|

||||||

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

|

||||||

* See the License for the specific language governing permissions and

|

|

||||||

* limitations under the License.

|

|

||||||

*/

|

|

||||||

package org.apache.activemq.leveldb;

|

|

||||||

|

|

||||||

import org.apache.activemq.store.PersistenceAdapter;

|

|

||||||

import org.apache.activemq.store.PersistenceAdapterFactory;

|

|

||||||

|

|

||||||

import java.io.File;

|

|

||||||

import java.io.IOException;

|

|

||||||

|

|

||||||

/**

|

|

||||||

* A factory which can create configured LevelDBStore objects.

|

|

||||||

*/

|

|

||||||

public class LevelDBStoreFactory implements PersistenceAdapterFactory {

|

|

||||||

|

|

||||||

private int asyncBufferSize = 1024*1024*4;

|

|

||||||

private File directory = new File("LevelDB");

|

|

||||||

private int flushDelay = 1000*5;

|

|

||||||

private int indexBlockRestartInterval = 16;

|

|

||||||

private int indexBlockSize = 4 * 1024;

|

|

||||||

private long indexCacheSize = 1024 * 1024 * 256L;

|

|

||||||

private String indexCompression = "snappy";

|

|

||||||

private String indexFactory = "org.fusesource.leveldbjni.JniDBFactory, org.iq80.leveldb.impl.Iq80DBFactory";

|

|

||||||

private int indexMaxOpenFiles = 1000;

|

|

||||||

private int indexWriteBufferSize = 1024*1024*6;

|

|

||||||

private String logCompression = "none";

|

|

||||||

private File logDirectory;

|

|

||||||

private long logSize = 1024 * 1024 * 100;

|

|

||||||

private boolean monitorStats;

|

|

||||||

private boolean paranoidChecks;

|

|

||||||

private boolean sync = true;

|

|

||||||

private boolean verifyChecksums;

|

|

||||||

|

|

||||||

|

|

||||||

@Override

|

|

||||||

public PersistenceAdapter createPersistenceAdapter() throws IOException {

|

|

||||||

LevelDBStore store = new LevelDBStore();

|

|

||||||

store.setVerifyChecksums(verifyChecksums);

|

|

||||||

store.setAsyncBufferSize(asyncBufferSize);

|

|

||||||

store.setDirectory(directory);

|

|

||||||

store.setFlushDelay(flushDelay);

|

|

||||||

store.setIndexBlockRestartInterval(indexBlockRestartInterval);

|

|

||||||

store.setIndexBlockSize(indexBlockSize);

|

|

||||||

store.setIndexCacheSize(indexCacheSize);

|

|

||||||

store.setIndexCompression(indexCompression);

|

|

||||||

store.setIndexFactory(indexFactory);

|

|

||||||

store.setIndexMaxOpenFiles(indexMaxOpenFiles);

|

|

||||||

store.setIndexWriteBufferSize(indexWriteBufferSize);

|

|

||||||

store.setLogCompression(logCompression);

|

|

||||||

store.setLogDirectory(logDirectory);

|

|

||||||

store.setLogSize(logSize);

|

|

||||||

store.setMonitorStats(monitorStats);

|

|

||||||

store.setParanoidChecks(paranoidChecks);

|

|

||||||

store.setSync(sync);

|

|

||||||

return store;

|

|

||||||

}

|

|

||||||

|

|

||||||

public int getAsyncBufferSize() {

|

|

||||||

return asyncBufferSize;

|

|

||||||

}

|

|

||||||

|

|

||||||

public void setAsyncBufferSize(int asyncBufferSize) {

|

|

||||||

this.asyncBufferSize = asyncBufferSize;

|

|

||||||

}

|

|

||||||

|

|

||||||

public File getDirectory() {

|

|

||||||

return directory;

|

|

||||||

}

|

|

||||||

|

|

||||||

public void setDirectory(File directory) {

|

|

||||||

this.directory = directory;

|

|

||||||

}

|

|

||||||

|

|

||||||

public int getFlushDelay() {

|

|

||||||

return flushDelay;

|

|

||||||

}

|

|

||||||

|

|

||||||

public void setFlushDelay(int flushDelay) {

|

|

||||||

this.flushDelay = flushDelay;

|

|

||||||

}

|

|

||||||

|

|

||||||

public int getIndexBlockRestartInterval() {

|

|

||||||

return indexBlockRestartInterval;

|

|

||||||

}

|

|

||||||

|

|

||||||

public void setIndexBlockRestartInterval(int indexBlockRestartInterval) {

|

|

||||||

this.indexBlockRestartInterval = indexBlockRestartInterval;

|

|

||||||

}

|

|

||||||

|

|

||||||

public int getIndexBlockSize() {

|

|

||||||

return indexBlockSize;

|

|

||||||

}

|

|

||||||

|

|

||||||

public void setIndexBlockSize(int indexBlockSize) {

|

|

||||||

this.indexBlockSize = indexBlockSize;

|

|

||||||

}

|

|

||||||

|

|

||||||

public long getIndexCacheSize() {

|

|

||||||

return indexCacheSize;

|

|

||||||

}

|

|

||||||

|

|

||||||

public void setIndexCacheSize(long indexCacheSize) {

|

|

||||||

this.indexCacheSize = indexCacheSize;

|

|

||||||

}

|

|

||||||

|

|

||||||

public String getIndexCompression() {

|

|

||||||

return indexCompression;

|

|

||||||

}

|

|

||||||

|

|

||||||

public void setIndexCompression(String indexCompression) {

|

|

||||||

this.indexCompression = indexCompression;

|

|

||||||

}

|

|

||||||

|

|

||||||

public String getIndexFactory() {

|

|

||||||

return indexFactory;

|

|

||||||

}

|

|

||||||

|

|

||||||

public void setIndexFactory(String indexFactory) {

|

|

||||||

this.indexFactory = indexFactory;

|

|

||||||

}

|

|

||||||

|

|

||||||

public int getIndexMaxOpenFiles() {

|

|

||||||

return indexMaxOpenFiles;

|