mirror of https://github.com/apache/activemq.git

Fixes AMQ-4080: Integrate the Fusesource LevelDB module into the ActiveMQ build.

git-svn-id: https://svn.apache.org/repos/asf/activemq/trunk@1389882 13f79535-47bb-0310-9956-ffa450edef68

This commit is contained in:

parent

f3c9c74334

commit

b20d5411d1

|

|

@ -1056,6 +1056,8 @@

|

|||

</execution>

|

||||

</executions>

|

||||

</plugin>

|

||||

<!-- disabled until the xbean 3.11.2 plugin is released -->

|

||||

<!--

|

||||

<plugin>

|

||||

<groupId>org.apache.xbean</groupId>

|

||||

<artifactId>maven-xbean-plugin</artifactId>

|

||||

|

|

@ -1064,6 +1066,9 @@

|

|||

<execution>

|

||||

<phase>process-classes</phase>

|

||||

<configuration>

|

||||

<includes>

|

||||

<include>${basedir}/../activemq-leveldb/src/main/scala</include>

|

||||

</includes>

|

||||

<strictXsdOrder>false</strictXsdOrder>

|

||||

<namespace>http://activemq.apache.org/schema/core</namespace>

|

||||

<schema>${basedir}/target/classes/activemq.xsd</schema>

|

||||

|

|

@ -1084,6 +1089,7 @@

|

|||

</dependency>

|

||||

</dependencies>

|

||||

</plugin>

|

||||

-->

|

||||

<plugin>

|

||||

<groupId>org.codehaus.mojo</groupId>

|

||||

<artifactId>cobertura-maven-plugin</artifactId>

|

||||

|

|

@ -1222,5 +1228,47 @@

|

|||

</plugins>

|

||||

</build>

|

||||

</profile>

|

||||

<!-- To generate the XBean meta-data, run: mvn -P xbean-generate clean process-classes -->

|

||||

<profile>

|

||||

<id>xbean-generate</id>

|

||||

<build>

|

||||

<plugins>

|

||||

<plugin>

|

||||

<groupId>org.apache.xbean</groupId>

|

||||

<artifactId>maven-xbean-plugin</artifactId>

|

||||

<version>3.11.2-SNAPSHOT</version>

|

||||

<executions>

|

||||

<execution>

|

||||

<phase>process-classes</phase>

|

||||

<configuration>

|

||||

<includes>

|

||||

<include>${basedir}/../activemq-leveldb/src/main/java</include>

|

||||

</includes>

|

||||

<classPathIncludes>

|

||||

<classPathInclude>${basedir}/../activemq-leveldb/target/classes</classPathInclude>

|

||||

</classPathIncludes>

|

||||

<strictXsdOrder>false</strictXsdOrder>

|

||||

<namespace>http://activemq.apache.org/schema/core</namespace>

|

||||

<schema>${basedir}/src/main/resources/activemq.xsd</schema>

|

||||

<outputDir>${basedir}/src/main/resources</outputDir>

|

||||

<generateSpringSchemasFile>false</generateSpringSchemasFile>

|

||||

<excludedClasses>org.apache.activemq.broker.jmx.AnnotatedMBean,org.apache.activemq.broker.jmx.DestinationViewMBean</excludedClasses>

|

||||

</configuration>

|

||||

<goals>

|

||||

<goal>mapping</goal>

|

||||

</goals>

|

||||

</execution>

|

||||

</executions>

|

||||

<dependencies>

|

||||

<dependency>

|

||||

<groupId>com.thoughtworks.qdox</groupId>

|

||||

<artifactId>qdox</artifactId>

|

||||

<version>1.12</version>

|

||||

</dependency>

|

||||

</dependencies>

|

||||

</plugin>

|

||||

</plugins>

|

||||

</build>

|

||||

</profile>

|

||||

</profiles>

|

||||

</project>

|

||||

|

|

|

|||

|

|

@ -0,0 +1,381 @@

|

|||

# NOTE: this file is autogenerated by Apache XBean

|

||||

|

||||

# beans

|

||||

abortSlowConsumerStrategy = org.apache.activemq.broker.region.policy.AbortSlowConsumerStrategy

|

||||

|

||||

amqPersistenceAdapter = org.apache.activemq.store.amq.AMQPersistenceAdapter

|

||||

amqPersistenceAdapter.indexPageSize.propertyEditor = org.apache.activemq.util.MemoryPropertyEditor

|

||||

amqPersistenceAdapter.maxCheckpointMessageAddSize.propertyEditor = org.apache.activemq.util.MemoryIntPropertyEditor

|

||||

amqPersistenceAdapter.maxFileLength.propertyEditor = org.apache.activemq.util.MemoryPropertyEditor

|

||||

amqPersistenceAdapter.maxReferenceFileLength.propertyEditor = org.apache.activemq.util.MemoryPropertyEditor

|

||||

|

||||

amqPersistenceAdapterFactory = org.apache.activemq.store.amq.AMQPersistenceAdapterFactory

|

||||

|

||||

authenticationUser = org.apache.activemq.security.AuthenticationUser

|

||||

org.apache.activemq.security.AuthenticationUser(java.lang.String,java.lang.String,java.lang.String).parameterNames = username password groups

|

||||

|

||||

authorizationEntry = org.apache.activemq.security.AuthorizationEntry

|

||||

|

||||

authorizationMap = org.apache.activemq.security.DefaultAuthorizationMap

|

||||

org.apache.activemq.security.DefaultAuthorizationMap(java.util.List).parameterNames = authorizationEntries

|

||||

|

||||

authorizationPlugin = org.apache.activemq.security.AuthorizationPlugin

|

||||

org.apache.activemq.security.AuthorizationPlugin(org.apache.activemq.security.AuthorizationMap).parameterNames = map

|

||||

|

||||

axionJDBCAdapter = org.apache.activemq.store.jdbc.adapter.AxionJDBCAdapter

|

||||

|

||||

blobJDBCAdapter = org.apache.activemq.store.jdbc.adapter.BlobJDBCAdapter

|

||||

|

||||

broker = org.apache.activemq.xbean.XBeanBrokerService

|

||||

broker.initMethod = afterPropertiesSet

|

||||

broker.destroyMethod = destroy

|

||||

broker.advisorySupport.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

broker.deleteAllMessagesOnStartup.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

broker.passiveSlave.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

broker.persistent.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

broker.schedulerSupport.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

broker.shutdownOnSlaveFailure.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

broker.systemExitOnShutdown.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

broker.useJmx.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

broker.waitForSlave.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

|

||||

brokerService = org.apache.activemq.broker.BrokerService

|

||||

brokerService.advisorySupport.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

brokerService.deleteAllMessagesOnStartup.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

brokerService.passiveSlave.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

brokerService.persistent.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

brokerService.schedulerSupport.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

brokerService.shutdownOnSlaveFailure.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

brokerService.systemExitOnShutdown.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

brokerService.useJmx.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

brokerService.waitForSlave.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

|

||||

bytesJDBCAdapter = org.apache.activemq.store.jdbc.adapter.BytesJDBCAdapter

|

||||

|

||||

cachedLDAPAuthorizationMap = org.apache.activemq.security.CachedLDAPAuthorizationMap

|

||||

|

||||

commandAgent = org.apache.activemq.broker.util.CommandAgent

|

||||

commandAgent.initMethod = start

|

||||

commandAgent.destroyMethod = stop

|

||||

|

||||

compositeDemandForwardingBridge = org.apache.activemq.network.CompositeDemandForwardingBridge

|

||||

org.apache.activemq.network.CompositeDemandForwardingBridge(org.apache.activemq.network.NetworkBridgeConfiguration,org.apache.activemq.transport.Transport,org.apache.activemq.transport.Transport).parameterNames = configuration localBroker remoteBroker

|

||||

|

||||

compositeQueue = org.apache.activemq.broker.region.virtual.CompositeQueue

|

||||

|

||||

compositeTopic = org.apache.activemq.broker.region.virtual.CompositeTopic

|

||||

|

||||

conditionalNetworkBridgeFilterFactory = org.apache.activemq.network.ConditionalNetworkBridgeFilterFactory

|

||||

|

||||

connectionDotFilePlugin = org.apache.activemq.broker.view.ConnectionDotFilePlugin

|

||||

|

||||

connectionFactory = org.apache.activemq.spring.ActiveMQConnectionFactory

|

||||

connectionFactory.initMethod = afterPropertiesSet

|

||||

|

||||

constantPendingMessageLimitStrategy = org.apache.activemq.broker.region.policy.ConstantPendingMessageLimitStrategy

|

||||

|

||||

database-locker = org.apache.activemq.store.jdbc.DefaultDatabaseLocker

|

||||

|

||||

db2JDBCAdapter = org.apache.activemq.store.jdbc.adapter.DB2JDBCAdapter

|

||||

|

||||

defaultIOExceptionHandler = org.apache.activemq.util.DefaultIOExceptionHandler

|

||||

|

||||

defaultJDBCAdapter = org.apache.activemq.store.jdbc.adapter.DefaultJDBCAdapter

|

||||

|

||||

defaultNetworkBridgeFilterFactory = org.apache.activemq.network.DefaultNetworkBridgeFilterFactory

|

||||

|

||||

defaultUsageCapacity = org.apache.activemq.usage.DefaultUsageCapacity

|

||||

|

||||

demandForwardingBridge = org.apache.activemq.network.DemandForwardingBridge

|

||||

org.apache.activemq.network.DemandForwardingBridge(org.apache.activemq.network.NetworkBridgeConfiguration,org.apache.activemq.transport.Transport,org.apache.activemq.transport.Transport).parameterNames = configuration localBroker remoteBroker

|

||||

|

||||

destinationDotFilePlugin = org.apache.activemq.broker.view.DestinationDotFilePlugin

|

||||

|

||||

destinationEntry = org.apache.activemq.filter.DefaultDestinationMapEntry

|

||||

|

||||

destinationPathSeparatorPlugin = org.apache.activemq.broker.util.DestinationPathSeparatorBroker

|

||||

|

||||

discardingDLQBrokerPlugin = org.apache.activemq.plugin.DiscardingDLQBrokerPlugin

|

||||

|

||||

fileCursor = org.apache.activemq.broker.region.policy.FilePendingSubscriberMessageStoragePolicy

|

||||

|

||||

fileDurableSubscriberCursor = org.apache.activemq.broker.region.policy.FilePendingDurableSubscriberMessageStoragePolicy

|

||||

|

||||

fileQueueCursor = org.apache.activemq.broker.region.policy.FilePendingQueueMessageStoragePolicy

|

||||

|

||||

filteredDestination = org.apache.activemq.broker.region.virtual.FilteredDestination

|

||||

|

||||

filteredKahaDB = org.apache.activemq.store.kahadb.FilteredKahaDBPersistenceAdapter

|

||||

org.apache.activemq.store.kahadb.FilteredKahaDBPersistenceAdapter(org.apache.activemq.command.ActiveMQDestination,org.apache.activemq.store.kahadb.KahaDBPersistenceAdapter).parameterNames = destination adapter

|

||||

|

||||

fixedCountSubscriptionRecoveryPolicy = org.apache.activemq.broker.region.policy.FixedCountSubscriptionRecoveryPolicy

|

||||

|

||||

fixedSizedSubscriptionRecoveryPolicy = org.apache.activemq.broker.region.policy.FixedSizedSubscriptionRecoveryPolicy

|

||||

|

||||

forcePersistencyModeBroker = org.apache.activemq.plugin.ForcePersistencyModeBroker

|

||||

org.apache.activemq.plugin.ForcePersistencyModeBroker(org.apache.activemq.broker.Broker).parameterNames = next

|

||||

|

||||

forcePersistencyModeBrokerPlugin = org.apache.activemq.plugin.ForcePersistencyModeBrokerPlugin

|

||||

|

||||

forwardingBridge = org.apache.activemq.network.ForwardingBridge

|

||||

org.apache.activemq.network.ForwardingBridge(org.apache.activemq.transport.Transport,org.apache.activemq.transport.Transport).parameterNames = localBroker remoteBroker

|

||||

|

||||

hsqldb-jdbc-adapter = org.apache.activemq.store.jdbc.adapter.HsqldbJDBCAdapter

|

||||

|

||||

imageBasedJDBCAdaptor = org.apache.activemq.store.jdbc.adapter.ImageBasedJDBCAdaptor

|

||||

|

||||

inboundQueueBridge = org.apache.activemq.network.jms.InboundQueueBridge

|

||||

org.apache.activemq.network.jms.InboundQueueBridge(java.lang.String).parameterNames = inboundQueueName

|

||||

|

||||

inboundTopicBridge = org.apache.activemq.network.jms.InboundTopicBridge

|

||||

org.apache.activemq.network.jms.InboundTopicBridge(java.lang.String).parameterNames = inboundTopicName

|

||||

|

||||

individualDeadLetterStrategy = org.apache.activemq.broker.region.policy.IndividualDeadLetterStrategy

|

||||

|

||||

informixJDBCAdapter = org.apache.activemq.store.jdbc.adapter.InformixJDBCAdapter

|

||||

|

||||

jDBCIOExceptionHandler = org.apache.activemq.store.jdbc.JDBCIOExceptionHandler

|

||||

|

||||

jaasAuthenticationPlugin = org.apache.activemq.security.JaasAuthenticationPlugin

|

||||

|

||||

jaasCertificateAuthenticationPlugin = org.apache.activemq.security.JaasCertificateAuthenticationPlugin

|

||||

|

||||

jaasDualAuthenticationPlugin = org.apache.activemq.security.JaasDualAuthenticationPlugin

|

||||

|

||||

jdbcPersistenceAdapter = org.apache.activemq.store.jdbc.JDBCPersistenceAdapter

|

||||

org.apache.activemq.store.jdbc.JDBCPersistenceAdapter(javax.sql.DataSource,org.apache.activemq.wireformat.WireFormat).parameterNames = ds wireFormat

|

||||

|

||||

jmsQueueConnector = org.apache.activemq.network.jms.JmsQueueConnector

|

||||

|

||||

jmsTopicConnector = org.apache.activemq.network.jms.JmsTopicConnector

|

||||

|

||||

journalPersistenceAdapter = org.apache.activemq.store.journal.JournalPersistenceAdapter

|

||||

org.apache.activemq.store.journal.JournalPersistenceAdapter(org.apache.activeio.journal.Journal,org.apache.activemq.store.PersistenceAdapter,org.apache.activemq.thread.TaskRunnerFactory).parameterNames = journal longTermPersistence taskRunnerFactory

|

||||

|

||||

journalPersistenceAdapterFactory = org.apache.activemq.store.journal.JournalPersistenceAdapterFactory

|

||||

journalPersistenceAdapterFactory.journalLogFileSize.propertyEditor = org.apache.activemq.util.MemoryIntPropertyEditor

|

||||

|

||||

journaledJDBC = org.apache.activemq.store.PersistenceAdapterFactoryBean

|

||||

journaledJDBC.journalLogFileSize.propertyEditor = org.apache.activemq.util.MemoryIntPropertyEditor

|

||||

|

||||

kahaDB = org.apache.activemq.store.kahadb.KahaDBPersistenceAdapter

|

||||

kahaDB.indexCacheSize.propertyEditor = org.apache.activemq.util.MemoryPropertyEditor

|

||||

kahaDB.indexWriteBatchSize.propertyEditor = org.apache.activemq.util.MemoryPropertyEditor

|

||||

kahaDB.journalMaxFileLength.propertyEditor = org.apache.activemq.util.MemoryIntPropertyEditor

|

||||

kahaDB.journalMaxWriteBatchSize.propertyEditor = org.apache.activemq.util.MemoryPropertyEditor

|

||||

|

||||

kahaPersistenceAdapter = org.apache.activemq.store.kahadaptor.KahaPersistenceAdapter

|

||||

kahaPersistenceAdapter.maxDataFileLength.propertyEditor = org.apache.activemq.util.MemoryPropertyEditor

|

||||

org.apache.activemq.store.kahadaptor.KahaPersistenceAdapter(java.util.concurrent.atomic.AtomicLong).parameterNames = size

|

||||

|

||||

lDAPAuthorizationMap = org.apache.activemq.security.LDAPAuthorizationMap

|

||||

org.apache.activemq.security.LDAPAuthorizationMap(java.util.Map).parameterNames = options

|

||||

|

||||

lastImageSubscriptionRecoveryPolicy = org.apache.activemq.broker.region.policy.LastImageSubscriptionRecoveryPolicy

|

||||

|

||||

ldapNetworkConnector = org.apache.activemq.network.LdapNetworkConnector

|

||||

ldapNetworkConnector.prefetchSize.propertyEditor = org.apache.activemq.util.MemoryIntPropertyEditor

|

||||

|

||||

lease-database-locker = org.apache.activemq.store.jdbc.LeaseDatabaseLocker

|

||||

|

||||

levelDB = org.apache.activemq.store.leveldb.LevelDBPersistenceAdapter

|

||||

|

||||

loggingBrokerPlugin = org.apache.activemq.broker.util.LoggingBrokerPlugin

|

||||

loggingBrokerPlugin.initMethod = afterPropertiesSet

|

||||

|

||||

mKahaDB = org.apache.activemq.store.kahadb.MultiKahaDBPersistenceAdapter

|

||||

mKahaDB.journalMaxFileLength.propertyEditor = org.apache.activemq.util.MemoryIntPropertyEditor

|

||||

mKahaDB.journalWriteBatchSize.propertyEditor = org.apache.activemq.util.MemoryIntPropertyEditor

|

||||

|

||||

managementContext = org.apache.activemq.broker.jmx.ManagementContext

|

||||

managementContext.connectorPort.propertyEditor = org.apache.activemq.util.MemoryIntPropertyEditor

|

||||

managementContext.createConnector.propertyEditor = org.apache.activemq.util.BooleanEditor

|

||||

managementContext.rmiServerPort.propertyEditor = org.apache.activemq.util.MemoryIntPropertyEditor

|

||||

org.apache.activemq.broker.jmx.ManagementContext(javax.management.MBeanServer).parameterNames = server

|

||||

|

||||

masterConnector = org.apache.activemq.broker.ft.MasterConnector

|

||||

org.apache.activemq.broker.ft.MasterConnector(java.lang.String).parameterNames = remoteUri

|

||||

|

||||

maxdb-jdbc-adapter = org.apache.activemq.store.jdbc.adapter.MaxDBJDBCAdapter

|

||||

|

||||

memoryPersistenceAdapter = org.apache.activemq.store.memory.MemoryPersistenceAdapter

|

||||

|

||||

memoryUsage = org.apache.activemq.usage.MemoryUsage

|

||||

memoryUsage.limit.propertyEditor = org.apache.activemq.util.MemoryPropertyEditor

|

||||

memoryUsage.percentUsageMinDelta.propertyEditor = org.apache.activemq.util.MemoryPropertyEditor

|

||||

org.apache.activemq.usage.MemoryUsage(org.apache.activemq.usage.MemoryUsage).parameterNames = parent

|

||||

org.apache.activemq.usage.MemoryUsage(java.lang.String).parameterNames = name

|

||||

org.apache.activemq.usage.MemoryUsage(org.apache.activemq.usage.MemoryUsage,java.lang.String).parameterNames = parent name

|

||||

org.apache.activemq.usage.MemoryUsage(org.apache.activemq.usage.MemoryUsage,java.lang.String,float).parameterNames = parent name portion

|

||||

|

||||

messageGroupHashBucketFactory = org.apache.activemq.broker.region.group.MessageGroupHashBucketFactory

|

||||

|

||||

mirroredQueue = org.apache.activemq.broker.region.virtual.MirroredQueue

|

||||

|

||||

multicastNetworkConnector = org.apache.activemq.network.MulticastNetworkConnector

|

||||

multicastNetworkConnector.prefetchSize.propertyEditor = org.apache.activemq.util.MemoryIntPropertyEditor

|

||||

org.apache.activemq.network.MulticastNetworkConnector(java.net.URI).parameterNames = remoteURI

|

||||

|

||||

multicastTraceBrokerPlugin = org.apache.activemq.broker.util.MulticastTraceBrokerPlugin

|

||||

|

||||

mysql-jdbc-adapter = org.apache.activemq.store.jdbc.adapter.MySqlJDBCAdapter

|

||||

|

||||

networkConnector = org.apache.activemq.network.DiscoveryNetworkConnector

|

||||

networkConnector.prefetchSize.propertyEditor = org.apache.activemq.util.MemoryIntPropertyEditor

|

||||

org.apache.activemq.network.DiscoveryNetworkConnector(java.net.URI).parameterNames = discoveryURI

|

||||

|

||||

noSubscriptionRecoveryPolicy = org.apache.activemq.broker.region.policy.NoSubscriptionRecoveryPolicy

|

||||

|

||||

oldestMessageEvictionStrategy = org.apache.activemq.broker.region.policy.OldestMessageEvictionStrategy

|

||||

|

||||

oldestMessageWithLowestPriorityEvictionStrategy = org.apache.activemq.broker.region.policy.OldestMessageWithLowestPriorityEvictionStrategy

|

||||

|

||||

oracleBlobJDBCAdapter = org.apache.activemq.store.jdbc.adapter.OracleBlobJDBCAdapter

|

||||

|

||||

oracleJDBCAdapter = org.apache.activemq.store.jdbc.adapter.OracleJDBCAdapter

|

||||

|

||||

outboundQueueBridge = org.apache.activemq.network.jms.OutboundQueueBridge

|

||||

org.apache.activemq.network.jms.OutboundQueueBridge(java.lang.String).parameterNames = outboundQueueName

|

||||

|

||||

outboundTopicBridge = org.apache.activemq.network.jms.OutboundTopicBridge

|

||||

org.apache.activemq.network.jms.OutboundTopicBridge(java.lang.String).parameterNames = outboundTopicName

|

||||

|

||||

pListStore = org.apache.activemq.store.kahadb.plist.PListStore

|

||||

|

||||

policyEntry = org.apache.activemq.broker.region.policy.PolicyEntry

|

||||

policyEntry.memoryLimit.propertyEditor = org.apache.activemq.util.MemoryPropertyEditor

|

||||

|

||||

policyMap = org.apache.activemq.broker.region.policy.PolicyMap

|

||||

|

||||

postgresql-jdbc-adapter = org.apache.activemq.store.jdbc.adapter.PostgresqlJDBCAdapter

|

||||

|

||||

prefetchPolicy = org.apache.activemq.ActiveMQPrefetchPolicy

|

||||

|

||||

prefetchRatePendingMessageLimitStrategy = org.apache.activemq.broker.region.policy.PrefetchRatePendingMessageLimitStrategy

|

||||

|

||||

priorityNetworkDispatchPolicy = org.apache.activemq.broker.region.policy.PriorityNetworkDispatchPolicy

|

||||

|

||||

proxyConnector = org.apache.activemq.proxy.ProxyConnector

|

||||

|

||||

queryBasedSubscriptionRecoveryPolicy = org.apache.activemq.broker.region.policy.QueryBasedSubscriptionRecoveryPolicy

|

||||

|

||||

queue = org.apache.activemq.command.ActiveMQQueue

|

||||

org.apache.activemq.command.ActiveMQQueue(java.lang.String).parameterNames = name

|

||||

|

||||

queueDispatchSelector = org.apache.activemq.broker.region.QueueDispatchSelector

|

||||

org.apache.activemq.broker.region.QueueDispatchSelector(org.apache.activemq.command.ActiveMQDestination).parameterNames = destination

|

||||

|

||||

reconnectionPolicy = org.apache.activemq.network.jms.ReconnectionPolicy

|

||||

|

||||

redeliveryPlugin = org.apache.activemq.broker.util.RedeliveryPlugin

|

||||

|

||||

redeliveryPolicy = org.apache.activemq.RedeliveryPolicy

|

||||

|

||||

redeliveryPolicyMap = org.apache.activemq.broker.region.policy.RedeliveryPolicyMap

|

||||

|

||||

roundRobinDispatchPolicy = org.apache.activemq.broker.region.policy.RoundRobinDispatchPolicy

|

||||

|

||||

shared-file-locker = org.apache.activemq.store.SharedFileLocker

|

||||

|

||||

sharedDeadLetterStrategy = org.apache.activemq.broker.region.policy.SharedDeadLetterStrategy

|

||||

|

||||

simpleAuthenticationPlugin = org.apache.activemq.security.SimpleAuthenticationPlugin

|

||||

org.apache.activemq.security.SimpleAuthenticationPlugin(java.util.List).parameterNames = users

|

||||

|

||||

simpleAuthorizationMap = org.apache.activemq.security.SimpleAuthorizationMap

|

||||

org.apache.activemq.security.SimpleAuthorizationMap(org.apache.activemq.filter.DestinationMap,org.apache.activemq.filter.DestinationMap,org.apache.activemq.filter.DestinationMap).parameterNames = writeACLs readACLs adminACLs

|

||||

|

||||

simpleDispatchPolicy = org.apache.activemq.broker.region.policy.SimpleDispatchPolicy

|

||||

|

||||

simpleDispatchSelector = org.apache.activemq.broker.region.policy.SimpleDispatchSelector

|

||||

org.apache.activemq.broker.region.policy.SimpleDispatchSelector(org.apache.activemq.command.ActiveMQDestination).parameterNames = destination

|

||||

|

||||

simpleJmsMessageConvertor = org.apache.activemq.network.jms.SimpleJmsMessageConvertor

|

||||

|

||||

simpleMessageGroupMapFactory = org.apache.activemq.broker.region.group.SimpleMessageGroupMapFactory

|

||||

|

||||

sslContext = org.apache.activemq.spring.SpringSslContext

|

||||

sslContext.initMethod = afterPropertiesSet

|

||||

|

||||

statements = org.apache.activemq.store.jdbc.Statements

|

||||

|

||||

statisticsBrokerPlugin = org.apache.activemq.plugin.StatisticsBrokerPlugin

|

||||

|

||||

storeCursor = org.apache.activemq.broker.region.policy.StorePendingQueueMessageStoragePolicy

|

||||

|

||||

storeDurableSubscriberCursor = org.apache.activemq.broker.region.policy.StorePendingDurableSubscriberMessageStoragePolicy

|

||||

|

||||

storeUsage = org.apache.activemq.usage.StoreUsage

|

||||

storeUsage.limit.propertyEditor = org.apache.activemq.util.MemoryPropertyEditor

|

||||

storeUsage.percentUsageMinDelta.propertyEditor = org.apache.activemq.util.MemoryPropertyEditor

|

||||

org.apache.activemq.usage.StoreUsage(java.lang.String,org.apache.activemq.store.PersistenceAdapter).parameterNames = name store

|

||||

org.apache.activemq.usage.StoreUsage(org.apache.activemq.usage.StoreUsage,java.lang.String).parameterNames = parent name

|

||||

|

||||

streamJDBCAdapter = org.apache.activemq.store.jdbc.adapter.StreamJDBCAdapter

|

||||

|

||||

strictOrderDispatchPolicy = org.apache.activemq.broker.region.policy.StrictOrderDispatchPolicy

|

||||

|

||||

sybase-jdbc-adapter = org.apache.activemq.store.jdbc.adapter.SybaseJDBCAdapter

|

||||

|

||||

systemUsage = org.apache.activemq.usage.SystemUsage

|

||||

org.apache.activemq.usage.SystemUsage(java.lang.String,org.apache.activemq.store.PersistenceAdapter,org.apache.activemq.store.kahadb.plist.PListStore).parameterNames = name adapter tempStore

|

||||

org.apache.activemq.usage.SystemUsage(org.apache.activemq.usage.SystemUsage,java.lang.String).parameterNames = parent name

|

||||

|

||||

taskRunnerFactory = org.apache.activemq.thread.TaskRunnerFactory

|

||||

org.apache.activemq.thread.TaskRunnerFactory(java.lang.String).parameterNames = name

|

||||

org.apache.activemq.thread.TaskRunnerFactory(java.lang.String,int,boolean,int,boolean).parameterNames = name priority daemon maxIterationsPerRun dedicatedTaskRunner

|

||||

org.apache.activemq.thread.TaskRunnerFactory(java.lang.String,int,boolean,int,boolean,int).parameterNames = name priority daemon maxIterationsPerRun dedicatedTaskRunner maxThreadPoolSize

|

||||

|

||||

tempDestinationAuthorizationEntry = org.apache.activemq.security.TempDestinationAuthorizationEntry

|

||||

|

||||

tempQueue = org.apache.activemq.command.ActiveMQTempQueue

|

||||

org.apache.activemq.command.ActiveMQTempQueue(java.lang.String).parameterNames = name

|

||||

org.apache.activemq.command.ActiveMQTempQueue(org.apache.activemq.command.ConnectionId,long).parameterNames = connectionId sequenceId

|

||||

|

||||

tempTopic = org.apache.activemq.command.ActiveMQTempTopic

|

||||

org.apache.activemq.command.ActiveMQTempTopic(java.lang.String).parameterNames = name

|

||||

org.apache.activemq.command.ActiveMQTempTopic(org.apache.activemq.command.ConnectionId,long).parameterNames = connectionId sequenceId

|

||||

|

||||

tempUsage = org.apache.activemq.usage.TempUsage

|

||||

tempUsage.limit.propertyEditor = org.apache.activemq.util.MemoryPropertyEditor

|

||||

tempUsage.percentUsageMinDelta.propertyEditor = org.apache.activemq.util.MemoryPropertyEditor

|

||||

org.apache.activemq.usage.TempUsage(java.lang.String,org.apache.activemq.store.kahadb.plist.PListStore).parameterNames = name store

|

||||

org.apache.activemq.usage.TempUsage(org.apache.activemq.usage.TempUsage,java.lang.String).parameterNames = parent name

|

||||

|

||||

timeStampingBrokerPlugin = org.apache.activemq.broker.util.TimeStampingBrokerPlugin

|

||||

|

||||

timedSubscriptionRecoveryPolicy = org.apache.activemq.broker.region.policy.TimedSubscriptionRecoveryPolicy

|

||||

|

||||

topic = org.apache.activemq.command.ActiveMQTopic

|

||||

org.apache.activemq.command.ActiveMQTopic(java.lang.String).parameterNames = name

|

||||

|

||||

traceBrokerPathPlugin = org.apache.activemq.broker.util.TraceBrokerPathPlugin

|

||||

|

||||

transact-database-locker = org.apache.activemq.store.jdbc.adapter.TransactDatabaseLocker

|

||||

|

||||

transact-jdbc-adapter = org.apache.activemq.store.jdbc.adapter.TransactJDBCAdapter

|

||||

|

||||

transportConnector = org.apache.activemq.broker.TransportConnector

|

||||

org.apache.activemq.broker.TransportConnector(org.apache.activemq.transport.TransportServer).parameterNames = server

|

||||

|

||||

udpTraceBrokerPlugin = org.apache.activemq.broker.util.UDPTraceBrokerPlugin

|

||||

|

||||

uniquePropertyMessageEvictionStrategy = org.apache.activemq.broker.region.policy.UniquePropertyMessageEvictionStrategy

|

||||

|

||||

usageCapacity = org.apache.activemq.usage.UsageCapacity

|

||||

|

||||

virtualDestinationInterceptor = org.apache.activemq.broker.region.virtual.VirtualDestinationInterceptor

|

||||

|

||||

virtualSelectorCacheBrokerPlugin = org.apache.activemq.plugin.SubQueueSelectorCacheBrokerPlugin

|

||||

|

||||

virtualTopic = org.apache.activemq.broker.region.virtual.VirtualTopic

|

||||

|

||||

vmCursor = org.apache.activemq.broker.region.policy.VMPendingSubscriberMessageStoragePolicy

|

||||

|

||||

vmDurableCursor = org.apache.activemq.broker.region.policy.VMPendingDurableSubscriberMessageStoragePolicy

|

||||

|

||||

vmQueueCursor = org.apache.activemq.broker.region.policy.VMPendingQueueMessageStoragePolicy

|

||||

|

||||

xaConnectionFactory = org.apache.activemq.spring.ActiveMQXAConnectionFactory

|

||||

xaConnectionFactory.initMethod = afterPropertiesSet

|

||||

|

||||

|

|

@ -0,0 +1,3 @@

|

|||

#Generated by xbean-spring

|

||||

#Tue Sep 25 10:20:04 EDT 2012

|

||||

http\://activemq.apache.org/schema/core=org.apache.xbean.spring.context.v2.XBeanNamespaceHandler

|

||||

File diff suppressed because it is too large

Load Diff

File diff suppressed because it is too large

Load Diff

File diff suppressed because it is too large

Load Diff

Binary file not shown.

|

After Width: | Height: | Size: 11 KiB |

|

|

@ -0,0 +1,434 @@

|

|||

<?xml version="1.0" encoding="UTF-8"?>

|

||||

<!--

|

||||

Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

contributor license agreements. See the NOTICE file distributed with

|

||||

this work for additional information regarding copyright ownership.

|

||||

The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

(the "License"); you may not use this file except in compliance with

|

||||

the License. You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software

|

||||

distributed under the License is distributed on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

-->

|

||||

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

|

||||

|

||||

<modelVersion>4.0.0</modelVersion>

|

||||

|

||||

<parent>

|

||||

<groupId>org.apache.activemq</groupId>

|

||||

<artifactId>activemq-parent</artifactId>

|

||||

<version>5.7-SNAPSHOT</version>

|

||||

</parent>

|

||||

|

||||

<artifactId>activemq-leveldb</artifactId>

|

||||

<packaging>jar</packaging>

|

||||

|

||||

<name>ActiveMQ :: LevelDB</name>

|

||||

<description>ActiveMQ LevelDB based store</description>

|

||||

|

||||

<dependencies>

|

||||

|

||||

<!-- for scala support -->

|

||||

<dependency>

|

||||

<groupId>org.scala-lang</groupId>

|

||||

<artifactId>scala-library</artifactId>

|

||||

<version>${scala-version}</version>

|

||||

<scope>compile</scope>

|

||||

</dependency>

|

||||

|

||||

<dependency>

|

||||

<groupId>org.apache.activemq</groupId>

|

||||

<artifactId>activemq-core</artifactId>

|

||||

<version>5.7-SNAPSHOT</version>

|

||||

<scope>provided</scope>

|

||||

</dependency>

|

||||

|

||||

<dependency>

|

||||

<groupId>org.fusesource.hawtbuf</groupId>

|

||||

<artifactId>hawtbuf-proto</artifactId>

|

||||

<version>${hawtbuf-version}</version>

|

||||

</dependency>

|

||||

|

||||

<dependency>

|

||||

<groupId>org.fusesource.hawtdispatch</groupId>

|

||||

<artifactId>hawtdispatch-scala</artifactId>

|

||||

<version>${hawtdispatch-version}</version>

|

||||

</dependency>

|

||||

|

||||

<dependency>

|

||||

<groupId>org.iq80.leveldb</groupId>

|

||||

<artifactId>leveldb</artifactId>

|

||||

<version>0.2</version>

|

||||

</dependency>

|

||||

|

||||

<dependency>

|

||||

<groupId>org.fusesource.leveldbjni</groupId>

|

||||

<artifactId>leveldbjni-osx</artifactId>

|

||||

<version>1.3</version>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>org.fusesource.leveldbjni</groupId>

|

||||

<artifactId>leveldbjni-linux32</artifactId>

|

||||

<version>1.3</version>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>org.fusesource.leveldbjni</groupId>

|

||||

<artifactId>leveldbjni-linux64</artifactId>

|

||||

<version>1.3</version>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>org.fusesource.leveldbjni</groupId>

|

||||

<artifactId>leveldbjni-win32</artifactId>

|

||||

<version>1.3</version>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>org.fusesource.leveldbjni</groupId>

|

||||

<artifactId>leveldbjni-win64</artifactId>

|

||||

<version>1.3</version>

|

||||

</dependency>

|

||||

|

||||

<!-- For Optional Snappy Compression -->

|

||||

<dependency>

|

||||

<groupId>org.xerial.snappy</groupId>

|

||||

<artifactId>snappy-java</artifactId>

|

||||

<version>1.0.3</version>

|

||||

</dependency>

|

||||

|

||||

<dependency>

|

||||

<groupId>org.iq80.snappy</groupId>

|

||||

<artifactId>snappy</artifactId>

|

||||

<version>0.2</version>

|

||||

<optional>true</optional>

|

||||

</dependency>

|

||||

|

||||

<dependency>

|

||||

<groupId>org.codehaus.jackson</groupId>

|

||||

<artifactId>jackson-core-asl</artifactId>

|

||||

<version>${jackson-version}</version>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>org.codehaus.jackson</groupId>

|

||||

<artifactId>jackson-mapper-asl</artifactId>

|

||||

<version>${jackson-version}</version>

|

||||

</dependency>

|

||||

|

||||

<dependency>

|

||||

<groupId>org.apache.hadoop</groupId>

|

||||

<artifactId>hadoop-core</artifactId>

|

||||

<version>${hadoop-version}</version>

|

||||

<exclusions>

|

||||

<!-- hadoop's transative dependencies are such a pig -->

|

||||

<exclusion>

|

||||

<groupId>commons-cli</groupId>

|

||||

<artifactId>commons-cli</artifactId>

|

||||

</exclusion>

|

||||

<exclusion>

|

||||

<groupId>xmlenc</groupId>

|

||||

<artifactId>xmlenc</artifactId>

|

||||

</exclusion>

|

||||

<exclusion>

|

||||

<groupId>commons-codec</groupId>

|

||||

<artifactId>commons-codec</artifactId>

|

||||

</exclusion>

|

||||

<exclusion>

|

||||

<groupId>org.apache.commons</groupId>

|

||||

<artifactId>commons-math</artifactId>

|

||||

</exclusion>

|

||||

<exclusion>

|

||||

<groupId>commons-net</groupId>

|

||||

<artifactId>commons-net</artifactId>

|

||||

</exclusion>

|

||||

<exclusion>

|

||||

<groupId>commons-httpclient</groupId>

|

||||

<artifactId>commons-httpclient</artifactId>

|

||||

</exclusion>

|

||||

<exclusion>

|

||||

<groupId>tomcat</groupId>

|

||||

<artifactId>jasper-runtime</artifactId>

|

||||

</exclusion>

|

||||

<exclusion>

|

||||

<groupId>tomcat</groupId>

|

||||

<artifactId>jasper-compiler</artifactId>

|

||||

</exclusion>

|

||||

<exclusion>

|

||||

<groupId>commons-el</groupId>

|

||||

<artifactId>commons-el</artifactId>

|

||||

</exclusion>

|

||||

<exclusion>

|

||||

<groupId>net.java.dev.jets3t</groupId>

|

||||

<artifactId>jets3t</artifactId>

|

||||

</exclusion>

|

||||

<exclusion>

|

||||

<groupId>net.sf.kosmosfs</groupId>

|

||||

<artifactId>kfs</artifactId>

|

||||

</exclusion>

|

||||

<exclusion>

|

||||

<groupId>hsqldb</groupId>

|

||||

<artifactId>hsqldb</artifactId>

|

||||

</exclusion>

|

||||

<exclusion>

|

||||

<groupId>oro</groupId>

|

||||

<artifactId>oro</artifactId>

|

||||

</exclusion>

|

||||

<exclusion>

|

||||

<groupId>org.eclipse.jdt</groupId>

|

||||

<artifactId>core</artifactId>

|

||||

</exclusion>

|

||||

</exclusions>

|

||||

</dependency>

|

||||

|

||||

<!-- Testing Dependencies -->

|

||||

<dependency>

|

||||

<groupId>org.apache.activemq</groupId>

|

||||

<artifactId>activemq-core</artifactId>

|

||||

<version>5.7-SNAPSHOT</version>

|

||||

<type>test-jar</type>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>org.apache.activemq</groupId>

|

||||

<artifactId>activemq-console</artifactId>

|

||||

<version>5.7-SNAPSHOT</version>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

|

||||

<!-- Hadoop Testing Deps -->

|

||||

<dependency>

|

||||

<groupId>org.apache.hadoop</groupId>

|

||||

<artifactId>hadoop-test</artifactId>

|

||||

<version>${hadoop-version}</version>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>commons-lang</groupId>

|

||||

<artifactId>commons-lang</artifactId>

|

||||

<version>2.6</version>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>org.mortbay.jetty</groupId>

|

||||

<artifactId>jetty</artifactId>

|

||||

<version>6.1.26</version>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>org.mortbay.jetty</groupId>

|

||||

<artifactId>jetty-util</artifactId>

|

||||

<version>6.1.26</version>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>tomcat</groupId>

|

||||

<artifactId>jasper-runtime</artifactId>

|

||||

<version>5.5.12</version>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>tomcat</groupId>

|

||||

<artifactId>jasper-compiler</artifactId>

|

||||

<version>5.5.12</version>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>org.mortbay.jetty</groupId>

|

||||

<artifactId>jsp-api-2.1</artifactId>

|

||||

<version>6.1.14</version>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>org.mortbay.jetty</groupId>

|

||||

<artifactId>jsp-2.1</artifactId>

|

||||

<version>6.1.14</version>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>org.apache.commons</groupId>

|

||||

<artifactId>commons-math</artifactId>

|

||||

<version>2.2</version>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

|

||||

<dependency>

|

||||

<groupId>org.scalatest</groupId>

|

||||

<artifactId>scalatest_2.9.1</artifactId>

|

||||

<version>${scalatest-version}</version>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>junit</groupId>

|

||||

<artifactId>junit</artifactId>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

|

||||

</dependencies>

|

||||

|

||||

<build>

|

||||

|

||||

<plugins>

|

||||

<plugin>

|

||||

<groupId>org.scala-tools</groupId>

|

||||

<artifactId>maven-scala-plugin</artifactId>

|

||||

<version>${scala-plugin-version}</version>

|

||||

<executions>

|

||||

<execution>

|

||||

<id>compile</id>

|

||||

<goals><goal>compile</goal> </goals>

|

||||

<phase>compile</phase>

|

||||

</execution>

|

||||

<execution>

|

||||

<id>test-compile</id>

|

||||

<goals>

|

||||

<goal>testCompile</goal>

|

||||

</goals>

|

||||

<phase>test-compile</phase>

|

||||

</execution>

|

||||

<execution>

|

||||

<phase>process-resources</phase>

|

||||

<goals>

|

||||

<goal>compile</goal>

|

||||

</goals>

|

||||

</execution>

|

||||

</executions>

|

||||

|

||||

<configuration>

|

||||

<jvmArgs>

|

||||

<jvmArg>-Xmx1024m</jvmArg>

|

||||

<jvmArg>-Xss8m</jvmArg>

|

||||

</jvmArgs>

|

||||

<scalaVersion>${scala-version}</scalaVersion>

|

||||

<args>

|

||||

<arg>-deprecation</arg>

|

||||

</args>

|

||||

<compilerPlugins>

|

||||

<compilerPlugin>

|

||||

<groupId>org.fusesource.jvmassert</groupId>

|

||||

<artifactId>jvmassert</artifactId>

|

||||

<version>1.1</version>

|

||||

</compilerPlugin>

|

||||

</compilerPlugins>

|

||||

</configuration>

|

||||

</plugin>

|

||||

|

||||

<plugin>

|

||||

<groupId>org.apache.maven.plugins</groupId>

|

||||

<artifactId>maven-surefire-plugin</artifactId>

|

||||

|

||||

<configuration>

|

||||

<!-- we must turn off the use of system class loader so our tests can find stuff - otherwise ScalaSupport compiler can't find stuff -->

|

||||

<useSystemClassLoader>false</useSystemClassLoader>

|

||||

<!--forkMode>pertest</forkMode-->

|

||||

<childDelegation>false</childDelegation>

|

||||

<useFile>true</useFile>

|

||||

<failIfNoTests>false</failIfNoTests>

|

||||

</configuration>

|

||||

</plugin>

|

||||

<plugin>

|

||||

<groupId>org.fusesource.hawtbuf</groupId>

|

||||

<artifactId>hawtbuf-protoc</artifactId>

|

||||

<version>${hawtbuf-version}</version>

|

||||

<configuration>

|

||||

<type>alt</type>

|

||||

</configuration>

|

||||

<executions>

|

||||

<execution>

|

||||

<goals>

|

||||

<goal>compile</goal>

|

||||

</goals>

|

||||

</execution>

|

||||

</executions>

|

||||

</plugin>

|

||||

<plugin>

|

||||

<groupId>org.fusesource.mvnplugins</groupId>

|

||||

<artifactId>maven-uberize-plugin</artifactId>

|

||||

<version>1.14</version>

|

||||

<executions>

|

||||

<execution>

|

||||

<id>all</id>

|

||||

<phase>package</phase>

|

||||

<goals><goal>uberize</goal></goals>

|

||||

</execution>

|

||||

</executions>

|

||||

<configuration>

|

||||

<uberArtifactAttached>true</uberArtifactAttached>

|

||||

<uberClassifierName>uber</uberClassifierName>

|

||||

<artifactSet>

|

||||

<includes>

|

||||

<include>org.scala-lang:scala-library</include>

|

||||

<include>org.fusesource.hawtdispatch:hawtdispatch</include>

|

||||

<include>org.fusesource.hawtdispatch:hawtdispatch-scala</include>

|

||||

<include>org.fusesource.hawtbuf:hawtbuf</include>

|

||||

<include>org.fusesource.hawtbuf:hawtbuf-proto</include>

|

||||

|

||||

<include>org.iq80.leveldb:leveldb-api</include>

|

||||

|

||||

<!--

|

||||

<include>org.iq80.leveldb:leveldb</include>

|

||||

<include>org.xerial.snappy:snappy-java</include>

|

||||

<include>com.google.guava:guava</include>

|

||||

-->

|

||||

<include>org.xerial.snappy:snappy-java</include>

|

||||

|

||||

<include>org.fusesource.leveldbjni:leveldbjni</include>

|

||||

<include>org.fusesource.leveldbjni:leveldbjni-osx</include>

|

||||

<include>org.fusesource.leveldbjni:leveldbjni-linux32</include>

|

||||

<include>org.fusesource.leveldbjni:leveldbjni-linux64</include>

|

||||

<include>org.fusesource.hawtjni:hawtjni-runtime</include>

|

||||

|

||||

<!-- include bits need to access hdfs as a client -->

|

||||

<include>org.apache.hadoop:hadoop-core</include>

|

||||

<include>commons-configuration:commons-configuration</include>

|

||||

<include>org.codehaus.jackson:jackson-mapper-asl</include>

|

||||

<include>org.codehaus.jackson:jackson-core-asl</include>

|

||||

|

||||

</includes>

|

||||

</artifactSet>

|

||||

</configuration>

|

||||

</plugin>

|

||||

<plugin>

|

||||

<groupId>org.apache.felix</groupId>

|

||||

<artifactId>maven-bundle-plugin</artifactId>

|

||||

<configuration>

|

||||

<classifier>bundle</classifier>

|

||||

<excludeDependencies />

|

||||

<instructions>

|

||||

<Bundle-SymbolicName>${project.groupId}.${project.artifactId}</Bundle-SymbolicName>

|

||||

<Fragment-Host>org.apache.activemq.activemq-core</Fragment-Host>

|

||||

<Export-Package>

|

||||

org.apache.activemq.leveldb*;version=${project.version};-noimport:=;-split-package:=merge-last,

|

||||

</Export-Package>

|

||||

<Embed-Dependency>*;inline=**;artifactId=

|

||||

hawtjni-runtime|hawtbuf|hawtbuf-proto|hawtdispatch|hawtdispatch-scala|scala-library|

|

||||

leveldb-api|leveldbjni|leveldbjni-osx|leveldbjni-linux32|leveldbjni-linux64|

|

||||

hadoop-core|commons-configuration|jackson-mapper-asl|jackson-core-asl|commons-lang</Embed-Dependency>

|

||||

<Embed-Transitive>true</Embed-Transitive>

|

||||

<Import-Package>*;resolution:=optional</Import-Package>

|

||||

</instructions>

|

||||

</configuration>

|

||||

<executions>

|

||||

<execution>

|

||||

<id>bundle</id>

|

||||

<phase>package</phase>

|

||||

<goals>

|

||||

<goal>bundle</goal>

|

||||

</goals>

|

||||

</execution>

|

||||

</executions>

|

||||

</plugin>

|

||||

<plugin>

|

||||

<groupId>org.apache.maven.plugins</groupId>

|

||||

<artifactId>maven-surefire-plugin</artifactId>

|

||||

<configuration>

|

||||

<forkMode>always</forkMode>

|

||||

<excludes>

|

||||

<exclude>**/EnqueueRateScenariosTest.*</exclude>

|

||||

</excludes>

|

||||

</configuration>

|

||||

</plugin>

|

||||

</plugins>

|

||||

</build>

|

||||

</project>

|

||||

|

|

@ -0,0 +1,95 @@

|

|||

# The LevelDB Store

|

||||

|

||||

## Overview

|

||||

|

||||

The LevelDB Store is message store implementation that can be used in ActiveMQ messaging servers.

|

||||

|

||||

## LevelDB vs KahaDB

|

||||

|

||||

How is the LevelDB Store better than the default KahaDB store:

|

||||

|

||||

* It maitains fewer index entries per message than KahaDB which means it has a higher persistent throughput.

|

||||

* Faster recovery when a broker restarts

|

||||

* Since the broker tends to write and read queue entries sequentially, the LevelDB based index provide a much better performance than the B-Tree based indexes of KahaDB which increases throughput.

|

||||

* Unlike the KahaDB indexes, the LevelDB indexes support concurrent read access which further improves read throughput.

|

||||

* Pauseless data log file garbage collection cycles.

|

||||

* It uses fewer read IO operations to load stored messages.

|

||||

* If a message is copied to multiple queues (Typically happens if your using virtual topics with multiple

|

||||

consumers), then LevelDB will only journal the payload of the message once. KahaDB will journal it multiple times.

|

||||

* It exposes it's status via JMX for monitoring

|

||||

* Supports replication to get High Availability

|

||||

|

||||

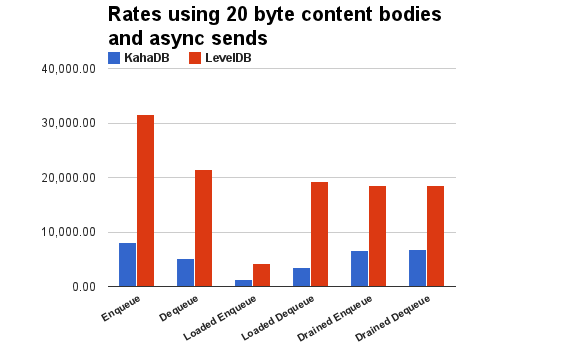

See the following chart to get an idea on how much better you can expect the LevelDB store to perform vs the KahaDB store:

|

||||

|

||||

|

||||

|

||||

## How to Use with ActiveMQ

|

||||

|

||||

Update the broker configuration file and change `persistenceAdapter` elements

|

||||

settings so that it uses the LevelDB store using the following spring XML

|

||||

configuration example:

|

||||

|

||||

<persistenceAdapter>

|

||||

<levelDB directory="${activemq.base}/data/leveldb" logSize="107374182"/>

|

||||

</persistenceAdapter>

|

||||

|

||||

### Configuration / Property Reference

|

||||

|

||||

*TODO*

|

||||

|

||||

### JMX Attribute and Operation Reference

|

||||

|

||||

*TODO*

|

||||

|

||||

## Known Limitations

|

||||

|

||||

* XA Transactions not supported yet

|

||||

* The store does not do any dup detection of messages.

|

||||

|

||||

## Built in High Availability Support

|

||||

|

||||

You can also use a High Availability (HA) version of the LevelDB store which

|

||||

works with Hadoop based file systems to achive HA of your stored messages.

|

||||

|

||||

**Q:** What are the requirements?

|

||||

**A:** An existing Hadoop 1.0.0 cluster

|

||||

|

||||

**Q:** How does it work during the normal operating cycle?

|

||||

A: It uses HDFS to store a highly available copy of the local leveldb storage files. As local log files are being written to, it also maintains a mirror copy on HDFS. If you have sync enabled on the store, a HDFS file sync is performed instead of a local disk sync. When the index is check pointed, we upload any previously not uploaded leveldb .sst files to HDFS.

|

||||

|

||||

**Q:** What happens when a broker fails and we startup a new slave to take over?

|

||||

**A:** The slave will download from HDFS the log files and the .sst files associated with the latest uploaded index. Then normal leveldb store recovery kicks in which updates the index using the log files.

|

||||

|

||||

**Q:** How do I use the HA version of the LevelDB store?

|

||||

**A:** Update your activemq.xml to use a `persistenceAdapter` setting similar to the following:

|

||||

|

||||

<persistenceAdapter>

|

||||

<bean xmlns="http://www.springframework.org/schema/beans"

|

||||

class="org.apache.activemq.leveldb.HALevelDBStore">

|

||||

|

||||

<!-- File system URL to replicate to -->

|

||||

<property name="dfsUrl" value="hdfs://hadoop-name-node"/>

|

||||

<!-- Directory in the file system to store the data in -->

|

||||

<property name="dfsDirectory" value="activemq"/>

|

||||

|

||||

<property name="directory" value="${activemq.base}/data/leveldb"/>

|

||||

<property name="logSize" value="107374182"/>

|

||||

<!-- <property name="sync" value="false"/> -->

|

||||

</bean>

|

||||

</persistenceAdapter>

|

||||

|

||||

Notice the implementation class name changes to 'HALevelDBStore'

|

||||

Instead of using a 'dfsUrl' property you can instead also just load an existing Hadoop configuration file if it's available on your system, for example:

|

||||

<property name="dfsConfig" value="/opt/hadoop-1.0.0/conf/core-site.xml"/>

|

||||

|

||||

**Q:** Who handles starting up the Slave?

|

||||

**A:** You do. :) This implementation assumes master startup/elections are performed externally and that 2 brokers are never running against the same HDFS file path. In practice this means you need something like ZooKeeper to control starting new brokers to take over failed masters.

|

||||

|

||||

**Q:** Can this run against something other than HDFS?

|

||||

**A:** It should be able to run with any Hadoop supported file system like CloudStore, S3, MapR, NFS, etc (Well at least in theory, I've only tested against HDFS).

|

||||

|

||||

**Q:** Can 'X' performance be optimized?

|

||||

**A:** There are bunch of way to improve the performance of many of the things that current version of the store is doing. For example, aggregating the .sst files into an archive to make more efficient use of HDFS, concurrent downloading to improve recovery performance. Lazy downloading of the oldest log files to make recovery faster. Async HDFS writes to avoid blocking local updates. Running brokers in a warm 'standy' mode which keep downloading new log updates and applying index updates from the master as they get uploaded to HDFS to get faster failovers.

|

||||

|

||||

**Q:** Does the broker fail if HDFS fails?

|

||||

**A:** Currently, yes. But it should be possible to make the master resilient to HDFS failures.

|

||||

|

|

@ -16,7 +16,7 @@

|

|||

*/

|

||||

package org.apache.activemq.store.leveldb;

|

||||

|

||||

import org.fusesource.mq.leveldb.LevelDBStore;

|

||||

import org.apache.activemq.leveldb.LevelDBStore;

|

||||

|

||||

|

||||

/**

|

||||

|

|

@ -0,0 +1,56 @@

|

|||

// Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

// contributor license agreements. See the NOTICE file distributed with

|

||||

// this work for additional information regarding copyright ownership.

|

||||

// The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

// (the "License"); you may not use this file except in compliance with

|

||||

// the License. You may obtain a copy of the License at

|

||||

//

|

||||

// http://www.apache.org/licenses/LICENSE-2.0

|

||||

//

|

||||

// Unless required by applicable law or agreed to in writing, software

|

||||

// distributed under the License is distributed on an "AS IS" BASIS,

|

||||

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

// See the License for the specific language governing permissions and

|

||||

// limitations under the License.

|

||||

//

|

||||

package org.apache.activemq.leveldb.record;

|

||||

|

||||

option java_multiple_files = true;

|

||||

|

||||

//

|

||||

// We create a collection record for each

|

||||

// transaction, queue, topic.

|

||||

//

|

||||

message CollectionKey {

|

||||

required int64 key = 1;

|

||||

}

|

||||

message CollectionRecord {

|

||||

optional int64 key = 1;

|

||||

optional int32 type = 2;

|

||||

optional bytes meta = 3 [java_override_type = "Buffer"];

|

||||

}

|

||||

|

||||

//

|

||||

// We create a entry record for each message, subscription,

|

||||

// and subscription position.

|

||||

//

|

||||

message EntryKey {

|

||||

required int64 collection_key = 1;

|

||||

required bytes entry_key = 2 [java_override_type = "Buffer"];

|

||||

}

|

||||

message EntryRecord {

|

||||

optional int64 collection_key = 1;

|

||||

optional bytes entry_key = 2 [java_override_type = "Buffer"];

|

||||

optional int64 value_location = 3;

|

||||

optional int32 value_length = 4;

|

||||

optional bytes value = 5 [java_override_type = "Buffer"];

|

||||

optional bytes meta = 6 [java_override_type = "Buffer"];

|

||||

}

|

||||

|

||||

message SubscriptionRecord {

|

||||

optional int64 topic_key = 1;

|

||||

optional string client_id = 2;

|

||||

optional string subscription_name = 3;

|

||||

optional string selector = 4;

|

||||

optional string destination_name = 5;

|

||||

}

|

||||

|

|

@ -0,0 +1,139 @@

|

|||

/**

|

||||

* Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

* contributor license agreements. See the NOTICE file distributed with

|

||||

* this work for additional information regarding copyright ownership.

|

||||

* The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

* (the "License"); you may not use this file except in compliance with

|

||||

* the License. You may obtain a copy of the License at

|

||||

*

|

||||

* http://www.apache.org/licenses/LICENSE-2.0

|

||||

*

|

||||

* Unless required by applicable law or agreed to in writing, software

|

||||

* distributed under the License is distributed on an "AS IS" BASIS,

|

||||

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

* See the License for the specific language governing permissions and

|

||||

* limitations under the License.

|

||||

*/

|

||||

package org.apache.activemq

|

||||

|

||||

import java.nio.ByteBuffer

|

||||

import org.fusesource.hawtbuf.Buffer

|

||||

import org.xerial.snappy.{Snappy => Xerial}

|

||||

import org.iq80.snappy.{Snappy => Iq80}

|

||||

|

||||

/**

|

||||

* <p>

|

||||

* A Snappy abstraction which attempts uses the iq80 implementation and falls back

|

||||

* to the xerial Snappy implementation it cannot be loaded. You can change the

|

||||

* load order by setting the 'leveldb.snappy' system property. Example:

|

||||

*

|

||||

* <code>

|

||||

* -Dleveldb.snappy=xerial,iq80

|

||||

* </code>

|

||||

*

|

||||

* The system property can also be configured with the name of a class which

|

||||

* implements the Snappy.SPI interface.

|

||||

* </p>

|

||||

*

|

||||

* @author <a href="http://hiramchirino.com">Hiram Chirino</a>

|

||||

*/

|

||||

package object leveldb {

|

||||

|

||||

final val Snappy = {

|

||||

var attempt:SnappyTrait = null

|

||||

System.getProperty("leveldb.snappy", "iq80,xerial").split(",").foreach { x =>

|

||||

if( attempt==null ) {

|

||||

try {

|

||||

var name = x.trim();

|

||||

name = name.toLowerCase match {

|

||||

case "xerial" => "org.apache.activemq.leveldb.XerialSnappy"

|

||||

case "iq80" => "org.apache.activemq.leveldb.IQ80Snappy"

|

||||

case _ => name

|

||||

}

|

||||

attempt = Thread.currentThread().getContextClassLoader().loadClass(name).newInstance().asInstanceOf[SnappyTrait];

|

||||

attempt.compress("test")

|

||||

} catch {

|

||||

case x =>

|

||||

attempt = null

|

||||

}

|

||||

}

|

||||

}

|

||||

attempt

|

||||

}

|

||||

|

||||

|

||||

trait SnappyTrait {

|

||||

|

||||

def uncompressed_length(input: Buffer):Int

|

||||

def uncompress(input: Buffer, output:Buffer): Int

|

||||

|

||||

def max_compressed_length(length: Int): Int

|

||||

def compress(input: Buffer, output: Buffer): Int

|

||||

|

||||

def compress(input: Buffer):Buffer = {

|

||||

val compressed = new Buffer(max_compressed_length(input.length))

|

||||

compressed.length = compress(input, compressed)

|

||||

compressed

|

||||

}

|

||||

|

||||

def compress(text: String): Buffer = {

|

||||

val uncompressed = new Buffer(text.getBytes("UTF-8"))

|

||||

val compressed = new Buffer(max_compressed_length(uncompressed.length))

|

||||

compressed.length = compress(uncompressed, compressed)

|

||||

return compressed

|

||||

}

|

||||

|

||||

def uncompress(input: Buffer):Buffer = {

|

||||

val uncompressed = new Buffer(uncompressed_length(input))

|

||||

uncompressed.length = uncompress(input, uncompressed)

|

||||

uncompressed

|

||||

}

|

||||

|

||||

def uncompress(compressed: ByteBuffer, uncompressed: ByteBuffer): Int = {

|

||||

val input = if (compressed.hasArray) {

|

||||

new Buffer(compressed.array, compressed.arrayOffset + compressed.position, compressed.remaining)

|

||||

} else {

|

||||

val t = new Buffer(compressed.remaining)

|

||||

compressed.mark

|

||||

compressed.get(t.data)

|

||||

compressed.reset

|

||||

t

|

||||

}

|

||||

|

||||

val output = if (uncompressed.hasArray) {

|

||||

new Buffer(uncompressed.array, uncompressed.arrayOffset + uncompressed.position, uncompressed.capacity()-uncompressed.position)

|

||||

} else {

|

||||

new Buffer(uncompressed_length(input))

|

||||

}

|

||||

|

||||

output.length = uncompress(input, output)

|

||||

|

||||

if (uncompressed.hasArray) {

|

||||

uncompressed.limit(uncompressed.position + output.length)

|

||||

} else {

|

||||

val p = uncompressed.position

|

||||

uncompressed.limit(uncompressed.capacity)

|

||||

uncompressed.put(output.data, output.offset, output.length)

|

||||

uncompressed.flip.position(p)

|

||||

}

|

||||

return output.length

|

||||

}

|

||||

}

|

||||

}

|

||||

package leveldb {

|

||||

class XerialSnappy extends SnappyTrait {

|

||||

override def uncompress(compressed: ByteBuffer, uncompressed: ByteBuffer) = Xerial.uncompress(compressed, uncompressed)

|

||||

def uncompressed_length(input: Buffer) = Xerial.uncompressedLength(input.data, input.offset, input.length)

|

||||

def uncompress(input: Buffer, output: Buffer) = Xerial.uncompress(input.data, input.offset, input.length, output.data, output.offset)

|

||||

def max_compressed_length(length: Int) = Xerial.maxCompressedLength(length)

|

||||

def compress(input: Buffer, output: Buffer) = Xerial.compress(input.data, input.offset, input.length, output.data, output.offset)

|

||||

override def compress(text: String) = new Buffer(Xerial.compress(text))

|

||||

}

|

||||

|

||||

class IQ80Snappy extends SnappyTrait {

|

||||

def uncompressed_length(input: Buffer) = Iq80.getUncompressedLength(input.data, input.offset)

|

||||

def uncompress(input: Buffer, output: Buffer): Int = Iq80.uncompress(input.data, input.offset, input.length, output.data, output.offset)

|

||||

def compress(input: Buffer, output: Buffer): Int = Iq80.compress(input.data, input.offset, input.length, output.data, output.offset)

|

||||

def max_compressed_length(length: Int) = Iq80.maxCompressedLength(length)

|

||||

}

|

||||

}

|

||||

|

|

@ -0,0 +1,735 @@

|

|||

/**

|

||||

* Licensed to the Apache Software Foundation (ASF) under one or more

|

||||

* contributor license agreements. See the NOTICE file distributed with

|

||||

* this work for additional information regarding copyright ownership.

|

||||

* The ASF licenses this file to You under the Apache License, Version 2.0

|

||||

* (the "License"); you may not use this file except in compliance with

|

||||

* the License. You may obtain a copy of the License at

|

||||

*

|

||||

* http://www.apache.org/licenses/LICENSE-2.0

|

||||

*

|

||||

* Unless required by applicable law or agreed to in writing, software

|

||||

* distributed under the License is distributed on an "AS IS" BASIS,

|

||||

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

* See the License for the specific language governing permissions and

|

||||