Aggregations: Renaming reducers to Pipeline Aggregators

@ -23,7 +23,7 @@ it is often easier to break them into two main families:

|

|||||||

<<search-aggregations-metrics, _Metric_>>::

|

<<search-aggregations-metrics, _Metric_>>::

|

||||||

Aggregations that keep track and compute metrics over a set of documents.

|

Aggregations that keep track and compute metrics over a set of documents.

|

||||||

|

|

||||||

<<search-aggregations-reducer, _Reducer_>>::

|

<<search-aggregations-pipeline, _Pipeline_>>::

|

||||||

Aggregations that aggregate the output of other aggregations and their associated metrics

|

Aggregations that aggregate the output of other aggregations and their associated metrics

|

||||||

|

|

||||||

The interesting part comes next. Since each bucket effectively defines a document set (all documents belonging to

|

The interesting part comes next. Since each bucket effectively defines a document set (all documents belonging to

|

||||||

@ -100,6 +100,6 @@ include::aggregations/metrics.asciidoc[]

|

|||||||

|

|

||||||

include::aggregations/bucket.asciidoc[]

|

include::aggregations/bucket.asciidoc[]

|

||||||

|

|

||||||

include::aggregations/reducer.asciidoc[]

|

include::aggregations/pipeline.asciidoc[]

|

||||||

|

|

||||||

include::aggregations/misc.asciidoc[]

|

include::aggregations/misc.asciidoc[]

|

||||||

|

|||||||

@ -1,39 +1,39 @@

|

|||||||

[[search-aggregations-reducer]]

|

[[search-aggregations-pipeline]]

|

||||||

|

|

||||||

== Reducer Aggregations

|

== Pipeline Aggregations

|

||||||

|

|

||||||

coming[2.0.0]

|

coming[2.0.0]

|

||||||

|

|

||||||

experimental[]

|

experimental[]

|

||||||

|

|

||||||

Reducer aggregations work on the outputs produced from other aggregations rather than from document sets, adding

|

Pipeline aggregations work on the outputs produced from other aggregations rather than from document sets, adding

|

||||||

information to the output tree. There are many different types of reducer, each computing different information from

|

information to the output tree. There are many different types of pipeline aggregation, each computing different information from

|

||||||

other aggregations, but these types can broken down into two families:

|

other aggregations, but these types can broken down into two families:

|

||||||

|

|

||||||

_Parent_::

|

_Parent_::

|

||||||

A family of reducer aggregations that is provided with the output of its parent aggregation and is able

|

A family of pipeline aggregations that is provided with the output of its parent aggregation and is able

|

||||||

to compute new buckets or new aggregations to add to existing buckets.

|

to compute new buckets or new aggregations to add to existing buckets.

|

||||||

|

|

||||||

_Sibling_::

|

_Sibling_::

|

||||||

Reducer aggregations that are provided with the output of a sibling aggregation and are able to compute a

|

Pipeline aggregations that are provided with the output of a sibling aggregation and are able to compute a

|

||||||

new aggregation which will be at the same level as the sibling aggregation.

|

new aggregation which will be at the same level as the sibling aggregation.

|

||||||

|

|

||||||

Reducer aggregations can reference the aggregations they need to perform their computation by using the `buckets_paths`

|

Pipeline aggregations can reference the aggregations they need to perform their computation by using the `buckets_paths`

|

||||||

parameter to indicate the paths to the required metrics. The syntax for defining these paths can be found in the

|

parameter to indicate the paths to the required metrics. The syntax for defining these paths can be found in the

|

||||||

<<bucket-path-syntax, `buckets_path` Syntax>> section below.

|

<<bucket-path-syntax, `buckets_path` Syntax>> section below.

|

||||||

|

|

||||||

Reducer aggregations cannot have sub-aggregations but depending on the type it can reference another reducer in the `buckets_path`

|

Pipeline aggregations cannot have sub-aggregations but depending on the type it can reference another pipeline in the `buckets_path`

|

||||||

allowing reducers to be chained. For example, you can chain together two derivatives to calculate the second derivative

|

allowing pipeline aggregations to be chained. For example, you can chain together two derivatives to calculate the second derivative

|

||||||

(e.g. a derivative of a derivative).

|

(e.g. a derivative of a derivative).

|

||||||

|

|

||||||

NOTE: Because reducer aggregations only add to the output, when chaining reducer aggregations the output of each reducer will be

|

NOTE: Because pipeline aggregations only add to the output, when chaining pipeline aggregations the output of each pipeline aggregation

|

||||||

included in the final output.

|

will be included in the final output.

|

||||||

|

|

||||||

[[bucket-path-syntax]]

|

[[bucket-path-syntax]]

|

||||||

[float]

|

[float]

|

||||||

=== `buckets_path` Syntax

|

=== `buckets_path` Syntax

|

||||||

|

|

||||||

Most reducers require another aggregation as their input. The input aggregation is defined via the `buckets_path`

|

Most pipeline aggregations require another aggregation as their input. The input aggregation is defined via the `buckets_path`

|

||||||

parameter, which follows a specific format:

|

parameter, which follows a specific format:

|

||||||

|

|

||||||

--------------------------------------------------

|

--------------------------------------------------

|

||||||

@ -47,7 +47,7 @@ PATH := <AGG_NAME>[<AGG_SEPARATOR><AGG_NAME>]*[<METRIC_SEPARATOR

|

|||||||

For example, the path `"my_bucket>my_stats.avg"` will path to the `avg` value in the `"my_stats"` metric, which is

|

For example, the path `"my_bucket>my_stats.avg"` will path to the `avg` value in the `"my_stats"` metric, which is

|

||||||

contained in the `"my_bucket"` bucket aggregation.

|

contained in the `"my_bucket"` bucket aggregation.

|

||||||

|

|

||||||

Paths are relative from the position of the reducer; they are not absolute paths, and the path cannot go back "up" the

|

Paths are relative from the position of the pipeline aggregation; they are not absolute paths, and the path cannot go back "up" the

|

||||||

aggregation tree. For example, this moving average is embedded inside a date_histogram and refers to a "sibling"

|

aggregation tree. For example, this moving average is embedded inside a date_histogram and refers to a "sibling"

|

||||||

metric `"the_sum"`:

|

metric `"the_sum"`:

|

||||||

|

|

||||||

@ -73,7 +73,7 @@ metric `"the_sum"`:

|

|||||||

<1> The metric is called `"the_sum"`

|

<1> The metric is called `"the_sum"`

|

||||||

<2> The `buckets_path` refers to the metric via a relative path `"the_sum"`

|

<2> The `buckets_path` refers to the metric via a relative path `"the_sum"`

|

||||||

|

|

||||||

`buckets_path` is also used for Sibling reducer aggregations, where the aggregation is "next" to a series of buckets

|

`buckets_path` is also used for Sibling pipeline aggregations, where the aggregation is "next" to a series of buckets

|

||||||

instead of embedded "inside" them. For example, the `max_bucket` aggregation uses the `buckets_path` to specify

|

instead of embedded "inside" them. For example, the `max_bucket` aggregation uses the `buckets_path` to specify

|

||||||

a metric embedded inside a sibling aggregation:

|

a metric embedded inside a sibling aggregation:

|

||||||

|

|

||||||

@ -109,7 +109,7 @@ a metric embedded inside a sibling aggregation:

|

|||||||

==== Special Paths

|

==== Special Paths

|

||||||

|

|

||||||

Instead of pathing to a metric, `buckets_path` can use a special `"_count"` path. This instructs

|

Instead of pathing to a metric, `buckets_path` can use a special `"_count"` path. This instructs

|

||||||

the reducer to use the document count as it's input. For example, a moving average can be calculated on the document

|

the pipeline aggregation to use the document count as it's input. For example, a moving average can be calculated on the document

|

||||||

count of each bucket, instead of a specific metric:

|

count of each bucket, instead of a specific metric:

|

||||||

|

|

||||||

[source,js]

|

[source,js]

|

||||||

@ -141,7 +141,7 @@ There are a couple of reasons why the data output by the enclosing histogram may

|

|||||||

on the enclosing histogram or with a query matching only a small number of documents)

|

on the enclosing histogram or with a query matching only a small number of documents)

|

||||||

|

|

||||||

Where there is no data available in a bucket for a given metric it presents a problem for calculating the derivative value for both

|

Where there is no data available in a bucket for a given metric it presents a problem for calculating the derivative value for both

|

||||||

the current bucket and the next bucket. In the derivative reducer aggregation has a `gap policy` parameter to define what the behavior

|

the current bucket and the next bucket. In the derivative pipeline aggregation has a `gap policy` parameter to define what the behavior

|

||||||

should be when a gap in the data is found. There are currently two options for controlling the gap policy:

|

should be when a gap in the data is found. There are currently two options for controlling the gap policy:

|

||||||

|

|

||||||

_skip_::

|

_skip_::

|

||||||

@ -154,9 +154,9 @@ _insert_zeros_::

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

include::reducer/avg-bucket-aggregation.asciidoc[]

|

include::pipeline/avg-bucket-aggregation.asciidoc[]

|

||||||

include::reducer/derivative-aggregation.asciidoc[]

|

include::pipeline/derivative-aggregation.asciidoc[]

|

||||||

include::reducer/max-bucket-aggregation.asciidoc[]

|

include::pipeline/max-bucket-aggregation.asciidoc[]

|

||||||

include::reducer/min-bucket-aggregation.asciidoc[]

|

include::pipeline/min-bucket-aggregation.asciidoc[]

|

||||||

include::reducer/sum-bucket-aggregation.asciidoc[]

|

include::pipeline/sum-bucket-aggregation.asciidoc[]

|

||||||

include::reducer/movavg-aggregation.asciidoc[]

|

include::pipeline/movavg-aggregation.asciidoc[]

|

||||||

@ -1,7 +1,7 @@

|

|||||||

[[search-aggregations-reducer-avg-bucket-aggregation]]

|

[[search-aggregations-pipeline-avg-bucket-aggregation]]

|

||||||

=== Avg Bucket Aggregation

|

=== Avg Bucket Aggregation

|

||||||

|

|

||||||

A sibling reducer aggregation which calculates the (mean) average value of a specified metric in a sibling aggregation.

|

A sibling pipeline aggregation which calculates the (mean) average value of a specified metric in a sibling aggregation.

|

||||||

The specified metric must be numeric and the sibling aggregation must be a multi-bucket aggregation.

|

The specified metric must be numeric and the sibling aggregation must be a multi-bucket aggregation.

|

||||||

|

|

||||||

==== Syntax

|

==== Syntax

|

||||||

@ -1,7 +1,7 @@

|

|||||||

[[search-aggregations-reducer-derivative-aggregation]]

|

[[search-aggregations-pipeline-derivative-aggregation]]

|

||||||

=== Derivative Aggregation

|

=== Derivative Aggregation

|

||||||

|

|

||||||

A parent reducer aggregation which calculates the derivative of a specified metric in a parent histogram (or date_histogram)

|

A parent pipeline aggregation which calculates the derivative of a specified metric in a parent histogram (or date_histogram)

|

||||||

aggregation. The specified metric must be numeric and the enclosing histogram must have `min_doc_count` set to `0` (default

|

aggregation. The specified metric must be numeric and the enclosing histogram must have `min_doc_count` set to `0` (default

|

||||||

for `histogram` aggregations).

|

for `histogram` aggregations).

|

||||||

|

|

||||||

@ -112,8 +112,8 @@ would be $/month assuming the `price` field has units of $.

|

|||||||

|

|

||||||

==== Second Order Derivative

|

==== Second Order Derivative

|

||||||

|

|

||||||

A second order derivative can be calculated by chaining the derivative reducer aggregation onto the result of another derivative

|

A second order derivative can be calculated by chaining the derivative pipeline aggregation onto the result of another derivative

|

||||||

reducer aggregation as in the following example which will calculate both the first and the second order derivative of the total

|

pipeline aggregation as in the following example which will calculate both the first and the second order derivative of the total

|

||||||

monthly sales:

|

monthly sales:

|

||||||

|

|

||||||

[source,js]

|

[source,js]

|

||||||

@ -1,7 +1,7 @@

|

|||||||

[[search-aggregations-reducer-max-bucket-aggregation]]

|

[[search-aggregations-pipeline-max-bucket-aggregation]]

|

||||||

=== Max Bucket Aggregation

|

=== Max Bucket Aggregation

|

||||||

|

|

||||||

A sibling reducer aggregation which identifies the bucket(s) with the maximum value of a specified metric in a sibling aggregation

|

A sibling pipeline aggregation which identifies the bucket(s) with the maximum value of a specified metric in a sibling aggregation

|

||||||

and outputs both the value and the key(s) of the bucket(s). The specified metric must be numeric and the sibling aggregation must

|

and outputs both the value and the key(s) of the bucket(s). The specified metric must be numeric and the sibling aggregation must

|

||||||

be a multi-bucket aggregation.

|

be a multi-bucket aggregation.

|

||||||

|

|

||||||

@ -1,7 +1,7 @@

|

|||||||

[[search-aggregations-reducer-min-bucket-aggregation]]

|

[[search-aggregations-pipeline-min-bucket-aggregation]]

|

||||||

=== Min Bucket Aggregation

|

=== Min Bucket Aggregation

|

||||||

|

|

||||||

A sibling reducer aggregation which identifies the bucket(s) with the minimum value of a specified metric in a sibling aggregation

|

A sibling pipeline aggregation which identifies the bucket(s) with the minimum value of a specified metric in a sibling aggregation

|

||||||

and outputs both the value and the key(s) of the bucket(s). The specified metric must be numeric and the sibling aggregation must

|

and outputs both the value and the key(s) of the bucket(s). The specified metric must be numeric and the sibling aggregation must

|

||||||

be a multi-bucket aggregation.

|

be a multi-bucket aggregation.

|

||||||

|

|

||||||

@ -1,4 +1,4 @@

|

|||||||

[[search-aggregations-reducers-movavg-reducer]]

|

[[search-aggregations-pipeline-movavg-aggregation]]

|

||||||

=== Moving Average Aggregation

|

=== Moving Average Aggregation

|

||||||

|

|

||||||

Given an ordered series of data, the Moving Average aggregation will slide a window across the data and emit the average

|

Given an ordered series of data, the Moving Average aggregation will slide a window across the data and emit the average

|

||||||

@ -109,14 +109,14 @@ track the data and only smooth out small scale fluctuations:

|

|||||||

|

|

||||||

[[movavg_10window]]

|

[[movavg_10window]]

|

||||||

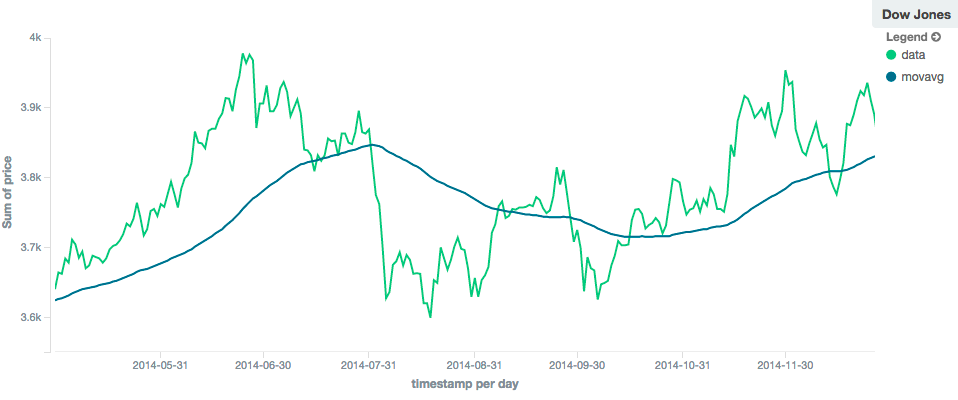

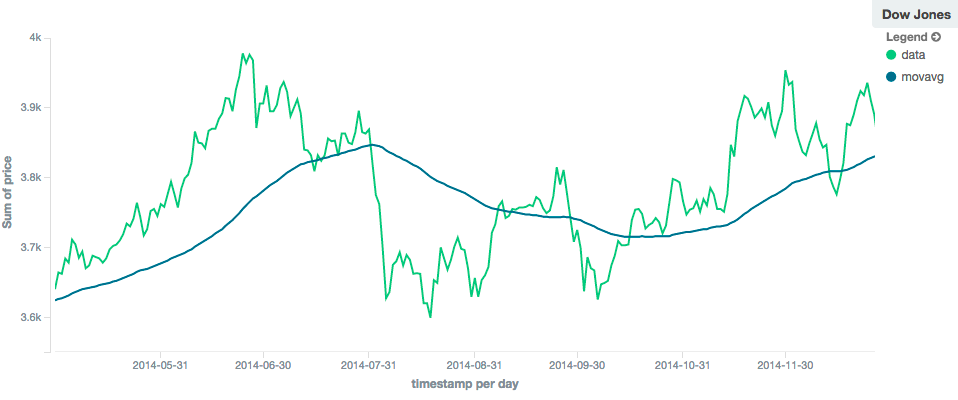

.Moving average with window of size 10

|

.Moving average with window of size 10

|

||||||

image::images/reducers_movavg/movavg_10window.png[]

|

image::images/pipeline_movavg/movavg_10window.png[]

|

||||||

|

|

||||||

In contrast, a `simple` moving average with larger window (`"window": 100`) will smooth out all higher-frequency fluctuations,

|

In contrast, a `simple` moving average with larger window (`"window": 100`) will smooth out all higher-frequency fluctuations,

|

||||||

leaving only low-frequency, long term trends. It also tends to "lag" behind the actual data by a substantial amount:

|

leaving only low-frequency, long term trends. It also tends to "lag" behind the actual data by a substantial amount:

|

||||||

|

|

||||||

[[movavg_100window]]

|

[[movavg_100window]]

|

||||||

.Moving average with window of size 100

|

.Moving average with window of size 100

|

||||||

image::images/reducers_movavg/movavg_100window.png[]

|

image::images/pipeline_movavg/movavg_100window.png[]

|

||||||

|

|

||||||

|

|

||||||

==== Linear

|

==== Linear

|

||||||

@ -143,7 +143,7 @@ will closely track the data and only smooth out small scale fluctuations:

|

|||||||

|

|

||||||

[[linear_10window]]

|

[[linear_10window]]

|

||||||

.Linear moving average with window of size 10

|

.Linear moving average with window of size 10

|

||||||

image::images/reducers_movavg/linear_10window.png[]

|

image::images/pipeline_movavg/linear_10window.png[]

|

||||||

|

|

||||||

In contrast, a `linear` moving average with larger window (`"window": 100`) will smooth out all higher-frequency fluctuations,

|

In contrast, a `linear` moving average with larger window (`"window": 100`) will smooth out all higher-frequency fluctuations,

|

||||||

leaving only low-frequency, long term trends. It also tends to "lag" behind the actual data by a substantial amount,

|

leaving only low-frequency, long term trends. It also tends to "lag" behind the actual data by a substantial amount,

|

||||||

@ -151,7 +151,7 @@ although typically less than the `simple` model:

|

|||||||

|

|

||||||

[[linear_100window]]

|

[[linear_100window]]

|

||||||

.Linear moving average with window of size 100

|

.Linear moving average with window of size 100

|

||||||

image::images/reducers_movavg/linear_100window.png[]

|

image::images/pipeline_movavg/linear_100window.png[]

|

||||||

|

|

||||||

==== EWMA (Exponentially Weighted)

|

==== EWMA (Exponentially Weighted)

|

||||||

|

|

||||||

@ -181,11 +181,11 @@ The default value of `alpha` is `0.5`, and the setting accepts any float from 0-

|

|||||||

|

|

||||||

[[single_0.2alpha]]

|

[[single_0.2alpha]]

|

||||||

.Single Exponential moving average with window of size 10, alpha = 0.2

|

.Single Exponential moving average with window of size 10, alpha = 0.2

|

||||||

image::images/reducers_movavg/single_0.2alpha.png[]

|

image::images/pipeline_movavg/single_0.2alpha.png[]

|

||||||

|

|

||||||

[[single_0.7alpha]]

|

[[single_0.7alpha]]

|

||||||

.Single Exponential moving average with window of size 10, alpha = 0.7

|

.Single Exponential moving average with window of size 10, alpha = 0.7

|

||||||

image::images/reducers_movavg/single_0.7alpha.png[]

|

image::images/pipeline_movavg/single_0.7alpha.png[]

|

||||||

|

|

||||||

==== Holt-Linear

|

==== Holt-Linear

|

||||||

|

|

||||||

@ -224,11 +224,11 @@ values emphasize short-term trends. This will become more apparently when you a

|

|||||||

|

|

||||||

[[double_0.2beta]]

|

[[double_0.2beta]]

|

||||||

.Double Exponential moving average with window of size 100, alpha = 0.5, beta = 0.2

|

.Double Exponential moving average with window of size 100, alpha = 0.5, beta = 0.2

|

||||||

image::images/reducers_movavg/double_0.2beta.png[]

|

image::images/pipeline_movavg/double_0.2beta.png[]

|

||||||

|

|

||||||

[[double_0.7beta]]

|

[[double_0.7beta]]

|

||||||

.Double Exponential moving average with window of size 100, alpha = 0.5, beta = 0.7

|

.Double Exponential moving average with window of size 100, alpha = 0.5, beta = 0.7

|

||||||

image::images/reducers_movavg/double_0.7beta.png[]

|

image::images/pipeline_movavg/double_0.7beta.png[]

|

||||||

|

|

||||||

==== Prediction

|

==== Prediction

|

||||||

|

|

||||||

@ -256,7 +256,7 @@ of the last value in the series, producing a flat:

|

|||||||

|

|

||||||

[[simple_prediction]]

|

[[simple_prediction]]

|

||||||

.Simple moving average with window of size 10, predict = 50

|

.Simple moving average with window of size 10, predict = 50

|

||||||

image::images/reducers_movavg/simple_prediction.png[]

|

image::images/pipeline_movavg/simple_prediction.png[]

|

||||||

|

|

||||||

In contrast, the `holt` model can extrapolate based on local or global constant trends. If we set a high `beta`

|

In contrast, the `holt` model can extrapolate based on local or global constant trends. If we set a high `beta`

|

||||||

value, we can extrapolate based on local constant trends (in this case the predictions head down, because the data at the end

|

value, we can extrapolate based on local constant trends (in this case the predictions head down, because the data at the end

|

||||||

@ -264,11 +264,11 @@ of the series was heading in a downward direction):

|

|||||||

|

|

||||||

[[double_prediction_local]]

|

[[double_prediction_local]]

|

||||||

.Double Exponential moving average with window of size 100, predict = 20, alpha = 0.5, beta = 0.8

|

.Double Exponential moving average with window of size 100, predict = 20, alpha = 0.5, beta = 0.8

|

||||||

image::images/reducers_movavg/double_prediction_local.png[]

|

image::images/pipeline_movavg/double_prediction_local.png[]

|

||||||

|

|

||||||

In contrast, if we choose a small `beta`, the predictions are based on the global constant trend. In this series, the

|

In contrast, if we choose a small `beta`, the predictions are based on the global constant trend. In this series, the

|

||||||

global trend is slightly positive, so the prediction makes a sharp u-turn and begins a positive slope:

|

global trend is slightly positive, so the prediction makes a sharp u-turn and begins a positive slope:

|

||||||

|

|

||||||

[[double_prediction_global]]

|

[[double_prediction_global]]

|

||||||

.Double Exponential moving average with window of size 100, predict = 20, alpha = 0.5, beta = 0.1

|

.Double Exponential moving average with window of size 100, predict = 20, alpha = 0.5, beta = 0.1

|

||||||

image::images/reducers_movavg/double_prediction_global.png[]

|

image::images/pipeline_movavg/double_prediction_global.png[]

|

||||||

@ -1,7 +1,7 @@

|

|||||||

[[search-aggregations-reducer-sum-bucket-aggregation]]

|

[[search-aggregations-pipeline-sum-bucket-aggregation]]

|

||||||

=== Sum Bucket Aggregation

|

=== Sum Bucket Aggregation

|

||||||

|

|

||||||

A sibling reducer aggregation which calculates the sum across all bucket of a specified metric in a sibling aggregation.

|

A sibling pipeline aggregation which calculates the sum across all bucket of a specified metric in a sibling aggregation.

|

||||||

The specified metric must be numeric and the sibling aggregation must be a multi-bucket aggregation.

|

The specified metric must be numeric and the sibling aggregation must be a multi-bucket aggregation.

|

||||||

|

|

||||||

==== Syntax

|

==== Syntax

|

||||||

|

Before

(image error) Size: 69 KiB After

(image error) Size: 69 KiB

|

|

Before

(image error) Size: 72 KiB After

(image error) Size: 72 KiB

|

|

Before

(image error) Size: 70 KiB After

(image error) Size: 70 KiB

|

|

Before

(image error) Size: 66 KiB After

(image error) Size: 66 KiB

|

|

Before

(image error) Size: 65 KiB After

(image error) Size: 65 KiB

|

|

Before

(image error) Size: 70 KiB After

(image error) Size: 70 KiB

|

|

Before

(image error) Size: 64 KiB After

(image error) Size: 64 KiB

|

|

Before

(image error) Size: 66 KiB After

(image error) Size: 66 KiB

|

|

Before

(image error) Size: 67 KiB After

(image error) Size: 67 KiB

|

|

Before

(image error) Size: 63 KiB After

(image error) Size: 63 KiB

|

|

Before

(image error) Size: 67 KiB After

(image error) Size: 67 KiB

|

@ -20,6 +20,7 @@

|

|||||||

package org.elasticsearch.action;

|

package org.elasticsearch.action;

|

||||||

|

|

||||||

import com.google.common.base.Preconditions;

|

import com.google.common.base.Preconditions;

|

||||||

|

|

||||||

import org.elasticsearch.ElasticsearchException;

|

import org.elasticsearch.ElasticsearchException;

|

||||||

import org.elasticsearch.action.support.PlainListenableActionFuture;

|

import org.elasticsearch.action.support.PlainListenableActionFuture;

|

||||||

import org.elasticsearch.client.Client;

|

import org.elasticsearch.client.Client;

|

||||||

@ -27,7 +28,7 @@ import org.elasticsearch.client.ClusterAdminClient;

|

|||||||

import org.elasticsearch.client.ElasticsearchClient;

|

import org.elasticsearch.client.ElasticsearchClient;

|

||||||

import org.elasticsearch.client.IndicesAdminClient;

|

import org.elasticsearch.client.IndicesAdminClient;

|

||||||

import org.elasticsearch.common.unit.TimeValue;

|

import org.elasticsearch.common.unit.TimeValue;

|

||||||

import org.elasticsearch.search.aggregations.reducers.ReducerBuilder;

|

import org.elasticsearch.search.aggregations.pipeline.PipelineAggregatorBuilder;

|

||||||

import org.elasticsearch.threadpool.ThreadPool;

|

import org.elasticsearch.threadpool.ThreadPool;

|

||||||

|

|

||||||

/**

|

/**

|

||||||

|

|||||||

@ -28,9 +28,9 @@ import org.elasticsearch.common.io.stream.StreamOutput;

|

|||||||

import org.elasticsearch.index.shard.ShardId;

|

import org.elasticsearch.index.shard.ShardId;

|

||||||

import org.elasticsearch.percolator.PercolateContext;

|

import org.elasticsearch.percolator.PercolateContext;

|

||||||

import org.elasticsearch.search.aggregations.InternalAggregations;

|

import org.elasticsearch.search.aggregations.InternalAggregations;

|

||||||

import org.elasticsearch.search.aggregations.reducers.Reducer;

|

import org.elasticsearch.search.aggregations.pipeline.PipelineAggregator;

|

||||||

import org.elasticsearch.search.aggregations.reducers.ReducerStreams;

|

import org.elasticsearch.search.aggregations.pipeline.PipelineAggregatorStreams;

|

||||||

import org.elasticsearch.search.aggregations.reducers.SiblingReducer;

|

import org.elasticsearch.search.aggregations.pipeline.SiblingPipelineAggregator;

|

||||||

import org.elasticsearch.search.highlight.HighlightField;

|

import org.elasticsearch.search.highlight.HighlightField;

|

||||||

import org.elasticsearch.search.query.QuerySearchResult;

|

import org.elasticsearch.search.query.QuerySearchResult;

|

||||||

|

|

||||||

@ -56,7 +56,7 @@ public class PercolateShardResponse extends BroadcastShardOperationResponse {

|

|||||||

private int requestedSize;

|

private int requestedSize;

|

||||||

|

|

||||||

private InternalAggregations aggregations;

|

private InternalAggregations aggregations;

|

||||||

private List<SiblingReducer> reducers;

|

private List<SiblingPipelineAggregator> pipelineAggregators;

|

||||||

|

|

||||||

PercolateShardResponse() {

|

PercolateShardResponse() {

|

||||||

hls = new ArrayList<>();

|

hls = new ArrayList<>();

|

||||||

@ -75,7 +75,7 @@ public class PercolateShardResponse extends BroadcastShardOperationResponse {

|

|||||||

if (result.aggregations() != null) {

|

if (result.aggregations() != null) {

|

||||||

this.aggregations = (InternalAggregations) result.aggregations();

|

this.aggregations = (InternalAggregations) result.aggregations();

|

||||||

}

|

}

|

||||||

this.reducers = result.reducers();

|

this.pipelineAggregators = result.pipelineAggregators();

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

@ -119,8 +119,8 @@ public class PercolateShardResponse extends BroadcastShardOperationResponse {

|

|||||||

return aggregations;

|

return aggregations;

|

||||||

}

|

}

|

||||||

|

|

||||||

public List<SiblingReducer> reducers() {

|

public List<SiblingPipelineAggregator> pipelineAggregators() {

|

||||||

return reducers;

|

return pipelineAggregators;

|

||||||

}

|

}

|

||||||

|

|

||||||

public byte percolatorTypeId() {

|

public byte percolatorTypeId() {

|

||||||

@ -156,14 +156,14 @@ public class PercolateShardResponse extends BroadcastShardOperationResponse {

|

|||||||

}

|

}

|

||||||

aggregations = InternalAggregations.readOptionalAggregations(in);

|

aggregations = InternalAggregations.readOptionalAggregations(in);

|

||||||

if (in.readBoolean()) {

|

if (in.readBoolean()) {

|

||||||

int reducersSize = in.readVInt();

|

int pipelineAggregatorsSize = in.readVInt();

|

||||||

List<SiblingReducer> reducers = new ArrayList<>(reducersSize);

|

List<SiblingPipelineAggregator> pipelineAggregators = new ArrayList<>(pipelineAggregatorsSize);

|

||||||

for (int i = 0; i < reducersSize; i++) {

|

for (int i = 0; i < pipelineAggregatorsSize; i++) {

|

||||||

BytesReference type = in.readBytesReference();

|

BytesReference type = in.readBytesReference();

|

||||||

Reducer reducer = ReducerStreams.stream(type).readResult(in);

|

PipelineAggregator pipelineAggregator = PipelineAggregatorStreams.stream(type).readResult(in);

|

||||||

reducers.add((SiblingReducer) reducer);

|

pipelineAggregators.add((SiblingPipelineAggregator) pipelineAggregator);

|

||||||

}

|

}

|

||||||

this.reducers = reducers;

|

this.pipelineAggregators = pipelineAggregators;

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

@ -190,14 +190,14 @@ public class PercolateShardResponse extends BroadcastShardOperationResponse {

|

|||||||

}

|

}

|

||||||

}

|

}

|

||||||

out.writeOptionalStreamable(aggregations);

|

out.writeOptionalStreamable(aggregations);

|

||||||

if (reducers == null) {

|

if (pipelineAggregators == null) {

|

||||||

out.writeBoolean(false);

|

out.writeBoolean(false);

|

||||||

} else {

|

} else {

|

||||||

out.writeBoolean(true);

|

out.writeBoolean(true);

|

||||||

out.writeVInt(reducers.size());

|

out.writeVInt(pipelineAggregators.size());

|

||||||

for (Reducer reducer : reducers) {

|

for (PipelineAggregator pipelineAggregator : pipelineAggregators) {

|

||||||

out.writeBytesReference(reducer.type().stream());

|

out.writeBytesReference(pipelineAggregator.type().stream());

|

||||||

reducer.writeTo(out);

|

pipelineAggregator.writeTo(out);

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|||||||

@ -86,8 +86,8 @@ import org.elasticsearch.search.aggregations.AggregationPhase;

|

|||||||

import org.elasticsearch.search.aggregations.InternalAggregation;

|

import org.elasticsearch.search.aggregations.InternalAggregation;

|

||||||

import org.elasticsearch.search.aggregations.InternalAggregation.ReduceContext;

|

import org.elasticsearch.search.aggregations.InternalAggregation.ReduceContext;

|

||||||

import org.elasticsearch.search.aggregations.InternalAggregations;

|

import org.elasticsearch.search.aggregations.InternalAggregations;

|

||||||

import org.elasticsearch.search.aggregations.reducers.Reducer;

|

import org.elasticsearch.search.aggregations.pipeline.PipelineAggregator;

|

||||||

import org.elasticsearch.search.aggregations.reducers.SiblingReducer;

|

import org.elasticsearch.search.aggregations.pipeline.SiblingPipelineAggregator;

|

||||||

import org.elasticsearch.search.highlight.HighlightField;

|

import org.elasticsearch.search.highlight.HighlightField;

|

||||||

import org.elasticsearch.search.highlight.HighlightPhase;

|

import org.elasticsearch.search.highlight.HighlightPhase;

|

||||||

import org.elasticsearch.search.internal.SearchContext;

|

import org.elasticsearch.search.internal.SearchContext;

|

||||||

@ -852,11 +852,11 @@ public class PercolatorService extends AbstractComponent {

|

|||||||

}

|

}

|

||||||

InternalAggregations aggregations = InternalAggregations.reduce(aggregationsList, new ReduceContext(bigArrays, scriptService));

|

InternalAggregations aggregations = InternalAggregations.reduce(aggregationsList, new ReduceContext(bigArrays, scriptService));

|

||||||

if (aggregations != null) {

|

if (aggregations != null) {

|

||||||

List<SiblingReducer> reducers = shardResults.get(0).reducers();

|

List<SiblingPipelineAggregator> pipelineAggregators = shardResults.get(0).pipelineAggregators();

|

||||||

if (reducers != null) {

|

if (pipelineAggregators != null) {

|

||||||

List<InternalAggregation> newAggs = new ArrayList<>(Lists.transform(aggregations.asList(), Reducer.AGGREGATION_TRANFORM_FUNCTION));

|

List<InternalAggregation> newAggs = new ArrayList<>(Lists.transform(aggregations.asList(), PipelineAggregator.AGGREGATION_TRANFORM_FUNCTION));

|

||||||

for (SiblingReducer reducer : reducers) {

|

for (SiblingPipelineAggregator pipelineAggregator : pipelineAggregators) {

|

||||||

InternalAggregation newAgg = reducer.doReduce(new InternalAggregations(newAggs), new ReduceContext(bigArrays,

|

InternalAggregation newAgg = pipelineAggregator.doReduce(new InternalAggregations(newAggs), new ReduceContext(bigArrays,

|

||||||

scriptService));

|

scriptService));

|

||||||

newAggs.add(newAgg);

|

newAggs.add(newAgg);

|

||||||

}

|

}

|

||||||

|

|||||||

@ -56,14 +56,14 @@ import org.elasticsearch.search.aggregations.metrics.stats.extended.ExtendedStat

|

|||||||

import org.elasticsearch.search.aggregations.metrics.sum.SumParser;

|

import org.elasticsearch.search.aggregations.metrics.sum.SumParser;

|

||||||

import org.elasticsearch.search.aggregations.metrics.tophits.TopHitsParser;

|

import org.elasticsearch.search.aggregations.metrics.tophits.TopHitsParser;

|

||||||

import org.elasticsearch.search.aggregations.metrics.valuecount.ValueCountParser;

|

import org.elasticsearch.search.aggregations.metrics.valuecount.ValueCountParser;

|

||||||

import org.elasticsearch.search.aggregations.reducers.Reducer;

|

import org.elasticsearch.search.aggregations.pipeline.PipelineAggregator;

|

||||||

import org.elasticsearch.search.aggregations.reducers.bucketmetrics.avg.AvgBucketParser;

|

import org.elasticsearch.search.aggregations.pipeline.bucketmetrics.avg.AvgBucketParser;

|

||||||

import org.elasticsearch.search.aggregations.reducers.bucketmetrics.max.MaxBucketParser;

|

import org.elasticsearch.search.aggregations.pipeline.bucketmetrics.max.MaxBucketParser;

|

||||||

import org.elasticsearch.search.aggregations.reducers.bucketmetrics.min.MinBucketParser;

|

import org.elasticsearch.search.aggregations.pipeline.bucketmetrics.min.MinBucketParser;

|

||||||

import org.elasticsearch.search.aggregations.reducers.bucketmetrics.sum.SumBucketParser;

|

import org.elasticsearch.search.aggregations.pipeline.bucketmetrics.sum.SumBucketParser;

|

||||||

import org.elasticsearch.search.aggregations.reducers.derivative.DerivativeParser;

|

import org.elasticsearch.search.aggregations.pipeline.derivative.DerivativeParser;

|

||||||

import org.elasticsearch.search.aggregations.reducers.movavg.MovAvgParser;

|

import org.elasticsearch.search.aggregations.pipeline.movavg.MovAvgParser;

|

||||||

import org.elasticsearch.search.aggregations.reducers.movavg.models.MovAvgModelModule;

|

import org.elasticsearch.search.aggregations.pipeline.movavg.models.MovAvgModelModule;

|

||||||

|

|

||||||

import java.util.List;

|

import java.util.List;

|

||||||

|

|

||||||

@ -73,7 +73,7 @@ import java.util.List;

|

|||||||

public class AggregationModule extends AbstractModule implements SpawnModules{

|

public class AggregationModule extends AbstractModule implements SpawnModules{

|

||||||

|

|

||||||

private List<Class<? extends Aggregator.Parser>> aggParsers = Lists.newArrayList();

|

private List<Class<? extends Aggregator.Parser>> aggParsers = Lists.newArrayList();

|

||||||

private List<Class<? extends Reducer.Parser>> reducerParsers = Lists.newArrayList();

|

private List<Class<? extends PipelineAggregator.Parser>> pipelineAggParsers = Lists.newArrayList();

|

||||||

|

|

||||||

public AggregationModule() {

|

public AggregationModule() {

|

||||||

aggParsers.add(AvgParser.class);

|

aggParsers.add(AvgParser.class);

|

||||||

@ -108,12 +108,12 @@ public class AggregationModule extends AbstractModule implements SpawnModules{

|

|||||||

aggParsers.add(ScriptedMetricParser.class);

|

aggParsers.add(ScriptedMetricParser.class);

|

||||||

aggParsers.add(ChildrenParser.class);

|

aggParsers.add(ChildrenParser.class);

|

||||||

|

|

||||||

reducerParsers.add(DerivativeParser.class);

|

pipelineAggParsers.add(DerivativeParser.class);

|

||||||

reducerParsers.add(MaxBucketParser.class);

|

pipelineAggParsers.add(MaxBucketParser.class);

|

||||||

reducerParsers.add(MinBucketParser.class);

|

pipelineAggParsers.add(MinBucketParser.class);

|

||||||

reducerParsers.add(AvgBucketParser.class);

|

pipelineAggParsers.add(AvgBucketParser.class);

|

||||||

reducerParsers.add(SumBucketParser.class);

|

pipelineAggParsers.add(SumBucketParser.class);

|

||||||

reducerParsers.add(MovAvgParser.class);

|

pipelineAggParsers.add(MovAvgParser.class);

|

||||||

}

|

}

|

||||||

|

|

||||||

/**

|

/**

|

||||||

@ -131,9 +131,9 @@ public class AggregationModule extends AbstractModule implements SpawnModules{

|

|||||||

for (Class<? extends Aggregator.Parser> parser : aggParsers) {

|

for (Class<? extends Aggregator.Parser> parser : aggParsers) {

|

||||||

multibinderAggParser.addBinding().to(parser);

|

multibinderAggParser.addBinding().to(parser);

|

||||||

}

|

}

|

||||||

Multibinder<Reducer.Parser> multibinderReducerParser = Multibinder.newSetBinder(binder(), Reducer.Parser.class);

|

Multibinder<PipelineAggregator.Parser> multibinderPipelineAggParser = Multibinder.newSetBinder(binder(), PipelineAggregator.Parser.class);

|

||||||

for (Class<? extends Reducer.Parser> parser : reducerParsers) {

|

for (Class<? extends PipelineAggregator.Parser> parser : pipelineAggParsers) {

|

||||||

multibinderReducerParser.addBinding().to(parser);

|

multibinderPipelineAggParser.addBinding().to(parser);

|

||||||

}

|

}

|

||||||

bind(AggregatorParsers.class).asEagerSingleton();

|

bind(AggregatorParsers.class).asEagerSingleton();

|

||||||

bind(AggregationParseElement.class).asEagerSingleton();

|

bind(AggregationParseElement.class).asEagerSingleton();

|

||||||

|

|||||||

@ -23,14 +23,13 @@ import com.google.common.collect.ImmutableMap;

|

|||||||

import org.apache.lucene.search.BooleanClause.Occur;

|

import org.apache.lucene.search.BooleanClause.Occur;

|

||||||

import org.apache.lucene.search.BooleanQuery;

|

import org.apache.lucene.search.BooleanQuery;

|

||||||

import org.apache.lucene.search.Query;

|

import org.apache.lucene.search.Query;

|

||||||

import org.elasticsearch.ElasticsearchException;

|

|

||||||

import org.elasticsearch.common.inject.Inject;

|

import org.elasticsearch.common.inject.Inject;

|

||||||

import org.elasticsearch.common.lucene.search.Queries;

|

import org.elasticsearch.common.lucene.search.Queries;

|

||||||

import org.elasticsearch.search.SearchParseElement;

|

import org.elasticsearch.search.SearchParseElement;

|

||||||

import org.elasticsearch.search.SearchPhase;

|

import org.elasticsearch.search.SearchPhase;

|

||||||

import org.elasticsearch.search.aggregations.bucket.global.GlobalAggregator;

|

import org.elasticsearch.search.aggregations.bucket.global.GlobalAggregator;

|

||||||

import org.elasticsearch.search.aggregations.reducers.Reducer;

|

import org.elasticsearch.search.aggregations.pipeline.PipelineAggregator;

|

||||||

import org.elasticsearch.search.aggregations.reducers.SiblingReducer;

|

import org.elasticsearch.search.aggregations.pipeline.SiblingPipelineAggregator;

|

||||||

import org.elasticsearch.search.aggregations.support.AggregationContext;

|

import org.elasticsearch.search.aggregations.support.AggregationContext;

|

||||||

import org.elasticsearch.search.internal.SearchContext;

|

import org.elasticsearch.search.internal.SearchContext;

|

||||||

import org.elasticsearch.search.query.QueryPhaseExecutionException;

|

import org.elasticsearch.search.query.QueryPhaseExecutionException;

|

||||||

@ -145,19 +144,20 @@ public class AggregationPhase implements SearchPhase {

|

|||||||

}

|

}

|

||||||

context.queryResult().aggregations(new InternalAggregations(aggregations));

|

context.queryResult().aggregations(new InternalAggregations(aggregations));

|

||||||

try {

|

try {

|

||||||

List<Reducer> reducers = context.aggregations().factories().createReducers();

|

List<PipelineAggregator> pipelineAggregators = context.aggregations().factories().createPipelineAggregators();

|

||||||

List<SiblingReducer> siblingReducers = new ArrayList<>(reducers.size());

|

List<SiblingPipelineAggregator> siblingPipelineAggregators = new ArrayList<>(pipelineAggregators.size());

|

||||||

for (Reducer reducer : reducers) {

|

for (PipelineAggregator pipelineAggregator : pipelineAggregators) {

|

||||||

if (reducer instanceof SiblingReducer) {

|

if (pipelineAggregator instanceof SiblingPipelineAggregator) {

|

||||||

siblingReducers.add((SiblingReducer) reducer);

|

siblingPipelineAggregators.add((SiblingPipelineAggregator) pipelineAggregator);

|

||||||

} else {

|

} else {

|

||||||

throw new AggregationExecutionException("Invalid reducer named [" + reducer.name() + "] of type ["

|

throw new AggregationExecutionException("Invalid pipeline aggregation named [" + pipelineAggregator.name()

|

||||||

+ reducer.type().name() + "]. Only sibling reducers are allowed at the top level");

|

+ "] of type [" + pipelineAggregator.type().name()

|

||||||

|

+ "]. Only sibling pipeline aggregations are allowed at the top level");

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

context.queryResult().reducers(siblingReducers);

|

context.queryResult().pipelineAggregators(siblingPipelineAggregators);

|

||||||

} catch (IOException e) {

|

} catch (IOException e) {

|

||||||

throw new AggregationExecutionException("Failed to build top level reducers", e);

|

throw new AggregationExecutionException("Failed to build top level pipeline aggregators", e);

|

||||||

}

|

}

|

||||||

|

|

||||||

// disable aggregations so that they don't run on next pages in case of scrolling

|

// disable aggregations so that they don't run on next pages in case of scrolling

|

||||||

|

|||||||

@ -21,7 +21,7 @@ package org.elasticsearch.search.aggregations;

|

|||||||

import org.apache.lucene.index.LeafReaderContext;

|

import org.apache.lucene.index.LeafReaderContext;

|

||||||

import org.elasticsearch.search.aggregations.bucket.BestBucketsDeferringCollector;

|

import org.elasticsearch.search.aggregations.bucket.BestBucketsDeferringCollector;

|

||||||

import org.elasticsearch.search.aggregations.bucket.DeferringBucketCollector;

|

import org.elasticsearch.search.aggregations.bucket.DeferringBucketCollector;

|

||||||

import org.elasticsearch.search.aggregations.reducers.Reducer;

|

import org.elasticsearch.search.aggregations.pipeline.PipelineAggregator;

|

||||||

import org.elasticsearch.search.aggregations.support.AggregationContext;

|

import org.elasticsearch.search.aggregations.support.AggregationContext;

|

||||||

import org.elasticsearch.search.internal.SearchContext.Lifetime;

|

import org.elasticsearch.search.internal.SearchContext.Lifetime;

|

||||||

import org.elasticsearch.search.query.QueryPhaseExecutionException;

|

import org.elasticsearch.search.query.QueryPhaseExecutionException;

|

||||||

@ -47,7 +47,7 @@ public abstract class AggregatorBase extends Aggregator {

|

|||||||

|

|

||||||

private Map<String, Aggregator> subAggregatorbyName;

|

private Map<String, Aggregator> subAggregatorbyName;

|

||||||

private DeferringBucketCollector recordingWrapper;

|

private DeferringBucketCollector recordingWrapper;

|

||||||

private final List<Reducer> reducers;

|

private final List<PipelineAggregator> pipelineAggregators;

|

||||||

|

|

||||||

/**

|

/**

|

||||||

* Constructs a new Aggregator.

|

* Constructs a new Aggregator.

|

||||||

@ -59,9 +59,9 @@ public abstract class AggregatorBase extends Aggregator {

|

|||||||

* @param metaData The metaData associated with this aggregator

|

* @param metaData The metaData associated with this aggregator

|

||||||

*/

|

*/

|

||||||

protected AggregatorBase(String name, AggregatorFactories factories, AggregationContext context, Aggregator parent,

|

protected AggregatorBase(String name, AggregatorFactories factories, AggregationContext context, Aggregator parent,

|

||||||

List<Reducer> reducers, Map<String, Object> metaData) throws IOException {

|

List<PipelineAggregator> pipelineAggregators, Map<String, Object> metaData) throws IOException {

|

||||||

this.name = name;

|

this.name = name;

|

||||||

this.reducers = reducers;

|

this.pipelineAggregators = pipelineAggregators;

|

||||||

this.metaData = metaData;

|

this.metaData = metaData;

|

||||||

this.parent = parent;

|

this.parent = parent;

|

||||||

this.context = context;

|

this.context = context;

|

||||||

@ -116,8 +116,8 @@ public abstract class AggregatorBase extends Aggregator {

|

|||||||

return this.metaData;

|

return this.metaData;

|

||||||

}

|

}

|

||||||

|

|

||||||

public List<Reducer> reducers() {

|

public List<PipelineAggregator> pipelineAggregators() {

|

||||||

return this.reducers;

|

return this.pipelineAggregators;

|

||||||

}

|

}

|

||||||

|

|

||||||

/**

|

/**

|

||||||

|

|||||||

@ -18,8 +18,8 @@

|

|||||||

*/

|

*/

|

||||||

package org.elasticsearch.search.aggregations;

|

package org.elasticsearch.search.aggregations;

|

||||||

|

|

||||||

import org.elasticsearch.search.aggregations.reducers.Reducer;

|

import org.elasticsearch.search.aggregations.pipeline.PipelineAggregator;

|

||||||

import org.elasticsearch.search.aggregations.reducers.ReducerFactory;

|

import org.elasticsearch.search.aggregations.pipeline.PipelineAggregatorFactory;

|

||||||

import org.elasticsearch.search.aggregations.support.AggregationContext;

|

import org.elasticsearch.search.aggregations.support.AggregationContext;

|

||||||

import org.elasticsearch.search.aggregations.support.AggregationPath;

|

import org.elasticsearch.search.aggregations.support.AggregationPath;

|

||||||

|

|

||||||

@ -41,23 +41,23 @@ public class AggregatorFactories {

|

|||||||

|

|

||||||

private AggregatorFactory parent;

|

private AggregatorFactory parent;

|

||||||

private AggregatorFactory[] factories;

|

private AggregatorFactory[] factories;

|

||||||

private List<ReducerFactory> reducerFactories;

|

private List<PipelineAggregatorFactory> pipelineAggregatorFactories;

|

||||||

|

|

||||||

public static Builder builder() {

|

public static Builder builder() {

|

||||||

return new Builder();

|

return new Builder();

|

||||||

}

|

}

|

||||||

|

|

||||||

private AggregatorFactories(AggregatorFactory[] factories, List<ReducerFactory> reducers) {

|

private AggregatorFactories(AggregatorFactory[] factories, List<PipelineAggregatorFactory> pipelineAggregators) {

|

||||||

this.factories = factories;

|

this.factories = factories;

|

||||||

this.reducerFactories = reducers;

|

this.pipelineAggregatorFactories = pipelineAggregators;

|

||||||

}

|

}

|

||||||

|

|

||||||

public List<Reducer> createReducers() throws IOException {

|

public List<PipelineAggregator> createPipelineAggregators() throws IOException {

|

||||||

List<Reducer> reducers = new ArrayList<>();

|

List<PipelineAggregator> pipelineAggregators = new ArrayList<>();

|

||||||

for (ReducerFactory factory : this.reducerFactories) {

|

for (PipelineAggregatorFactory factory : this.pipelineAggregatorFactories) {

|

||||||

reducers.add(factory.create());

|

pipelineAggregators.add(factory.create());

|

||||||

}

|

}

|

||||||

return reducers;

|

return pipelineAggregators;

|

||||||

}

|

}

|

||||||

|

|

||||||

/**

|

/**

|

||||||

@ -103,8 +103,8 @@ public class AggregatorFactories {

|

|||||||

for (AggregatorFactory factory : factories) {

|

for (AggregatorFactory factory : factories) {

|

||||||

factory.validate();

|

factory.validate();

|

||||||

}

|

}

|

||||||

for (ReducerFactory factory : reducerFactories) {

|

for (PipelineAggregatorFactory factory : pipelineAggregatorFactories) {

|

||||||

factory.validate(parent, factories, reducerFactories);

|

factory.validate(parent, factories, pipelineAggregatorFactories);

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

@ -112,10 +112,10 @@ public class AggregatorFactories {

|

|||||||

|

|

||||||

private static final AggregatorFactory[] EMPTY_FACTORIES = new AggregatorFactory[0];

|

private static final AggregatorFactory[] EMPTY_FACTORIES = new AggregatorFactory[0];

|

||||||

private static final Aggregator[] EMPTY_AGGREGATORS = new Aggregator[0];

|

private static final Aggregator[] EMPTY_AGGREGATORS = new Aggregator[0];

|

||||||

private static final List<ReducerFactory> EMPTY_REDUCERS = new ArrayList<>();

|

private static final List<PipelineAggregatorFactory> EMPTY_PIPELINE_AGGREGATORS = new ArrayList<>();

|

||||||

|

|

||||||

private Empty() {

|

private Empty() {

|

||||||

super(EMPTY_FACTORIES, EMPTY_REDUCERS);

|

super(EMPTY_FACTORIES, EMPTY_PIPELINE_AGGREGATORS);

|

||||||

}

|

}

|

||||||

|

|

||||||

@Override

|

@Override

|

||||||

@ -134,7 +134,7 @@ public class AggregatorFactories {

|

|||||||

|

|

||||||

private final Set<String> names = new HashSet<>();

|

private final Set<String> names = new HashSet<>();

|

||||||

private final List<AggregatorFactory> factories = new ArrayList<>();

|

private final List<AggregatorFactory> factories = new ArrayList<>();

|

||||||

private final List<ReducerFactory> reducerFactories = new ArrayList<>();

|

private final List<PipelineAggregatorFactory> pipelineAggregatorFactories = new ArrayList<>();

|

||||||

|

|

||||||

public Builder addAggregator(AggregatorFactory factory) {

|

public Builder addAggregator(AggregatorFactory factory) {

|

||||||

if (!names.add(factory.name)) {

|

if (!names.add(factory.name)) {

|

||||||

@ -144,43 +144,43 @@ public class AggregatorFactories {

|

|||||||

return this;

|

return this;

|

||||||

}

|

}

|

||||||

|

|

||||||

public Builder addReducer(ReducerFactory reducerFactory) {

|

public Builder addPipelineAggregator(PipelineAggregatorFactory pipelineAggregatorFactory) {

|

||||||

this.reducerFactories.add(reducerFactory);

|

this.pipelineAggregatorFactories.add(pipelineAggregatorFactory);

|

||||||

return this;

|

return this;

|

||||||

}

|

}

|

||||||

|

|

||||||

public AggregatorFactories build() {

|

public AggregatorFactories build() {

|

||||||

if (factories.isEmpty() && reducerFactories.isEmpty()) {

|

if (factories.isEmpty() && pipelineAggregatorFactories.isEmpty()) {

|

||||||

return EMPTY;

|

return EMPTY;

|

||||||

}

|

}

|

||||||

List<ReducerFactory> orderedReducers = resolveReducerOrder(this.reducerFactories, this.factories);

|

List<PipelineAggregatorFactory> orderedpipelineAggregators = resolvePipelineAggregatorOrder(this.pipelineAggregatorFactories, this.factories);

|

||||||

return new AggregatorFactories(factories.toArray(new AggregatorFactory[factories.size()]), orderedReducers);

|

return new AggregatorFactories(factories.toArray(new AggregatorFactory[factories.size()]), orderedpipelineAggregators);

|

||||||

}

|

}

|

||||||

|

|

||||||

private List<ReducerFactory> resolveReducerOrder(List<ReducerFactory> reducerFactories, List<AggregatorFactory> aggFactories) {

|

private List<PipelineAggregatorFactory> resolvePipelineAggregatorOrder(List<PipelineAggregatorFactory> pipelineAggregatorFactories, List<AggregatorFactory> aggFactories) {

|

||||||

Map<String, ReducerFactory> reducerFactoriesMap = new HashMap<>();

|

Map<String, PipelineAggregatorFactory> pipelineAggregatorFactoriesMap = new HashMap<>();

|

||||||

for (ReducerFactory factory : reducerFactories) {

|

for (PipelineAggregatorFactory factory : pipelineAggregatorFactories) {

|

||||||

reducerFactoriesMap.put(factory.getName(), factory);

|

pipelineAggregatorFactoriesMap.put(factory.getName(), factory);

|

||||||

}

|

}

|

||||||

Set<String> aggFactoryNames = new HashSet<>();

|

Set<String> aggFactoryNames = new HashSet<>();

|

||||||

for (AggregatorFactory aggFactory : aggFactories) {

|

for (AggregatorFactory aggFactory : aggFactories) {

|

||||||

aggFactoryNames.add(aggFactory.name);

|

aggFactoryNames.add(aggFactory.name);

|

||||||

}

|

}

|

||||||

List<ReducerFactory> orderedReducers = new LinkedList<>();

|

List<PipelineAggregatorFactory> orderedPipelineAggregatorrs = new LinkedList<>();

|

||||||

List<ReducerFactory> unmarkedFactories = new ArrayList<ReducerFactory>(reducerFactories);

|

List<PipelineAggregatorFactory> unmarkedFactories = new ArrayList<PipelineAggregatorFactory>(pipelineAggregatorFactories);

|

||||||

Set<ReducerFactory> temporarilyMarked = new HashSet<ReducerFactory>();

|

Set<PipelineAggregatorFactory> temporarilyMarked = new HashSet<PipelineAggregatorFactory>();

|

||||||

while (!unmarkedFactories.isEmpty()) {

|

while (!unmarkedFactories.isEmpty()) {

|

||||||

ReducerFactory factory = unmarkedFactories.get(0);

|

PipelineAggregatorFactory factory = unmarkedFactories.get(0);

|

||||||

resolveReducerOrder(aggFactoryNames, reducerFactoriesMap, orderedReducers, unmarkedFactories, temporarilyMarked, factory);

|

resolvePipelineAggregatorOrder(aggFactoryNames, pipelineAggregatorFactoriesMap, orderedPipelineAggregatorrs, unmarkedFactories, temporarilyMarked, factory);

|

||||||

}

|

}

|

||||||

return orderedReducers;

|

return orderedPipelineAggregatorrs;

|

||||||

}

|

}

|

||||||

|

|

||||||

private void resolveReducerOrder(Set<String> aggFactoryNames, Map<String, ReducerFactory> reducerFactoriesMap,

|

private void resolvePipelineAggregatorOrder(Set<String> aggFactoryNames, Map<String, PipelineAggregatorFactory> pipelineAggregatorFactoriesMap,

|

||||||

List<ReducerFactory> orderedReducers, List<ReducerFactory> unmarkedFactories, Set<ReducerFactory> temporarilyMarked,

|

List<PipelineAggregatorFactory> orderedPipelineAggregators, List<PipelineAggregatorFactory> unmarkedFactories, Set<PipelineAggregatorFactory> temporarilyMarked,

|

||||||

ReducerFactory factory) {

|

PipelineAggregatorFactory factory) {

|

||||||

if (temporarilyMarked.contains(factory)) {

|

if (temporarilyMarked.contains(factory)) {

|

||||||

throw new IllegalStateException("Cyclical dependancy found with reducer [" + factory.getName() + "]");

|

throw new IllegalStateException("Cyclical dependancy found with pipeline aggregator [" + factory.getName() + "]");

|

||||||

} else if (unmarkedFactories.contains(factory)) {

|

} else if (unmarkedFactories.contains(factory)) {

|

||||||

temporarilyMarked.add(factory);

|

temporarilyMarked.add(factory);

|

||||||

String[] bucketsPaths = factory.getBucketsPaths();

|

String[] bucketsPaths = factory.getBucketsPaths();

|

||||||

@ -190,9 +190,9 @@ public class AggregatorFactories {

|

|||||||

if (bucketsPath.equals("_count") || bucketsPath.equals("_key") || aggFactoryNames.contains(firstAggName)) {

|

if (bucketsPath.equals("_count") || bucketsPath.equals("_key") || aggFactoryNames.contains(firstAggName)) {

|

||||||

continue;

|

continue;

|

||||||

} else {

|

} else {

|

||||||

ReducerFactory matchingFactory = reducerFactoriesMap.get(firstAggName);

|

PipelineAggregatorFactory matchingFactory = pipelineAggregatorFactoriesMap.get(firstAggName);

|

||||||

if (matchingFactory != null) {

|

if (matchingFactory != null) {

|

||||||

resolveReducerOrder(aggFactoryNames, reducerFactoriesMap, orderedReducers, unmarkedFactories,

|

resolvePipelineAggregatorOrder(aggFactoryNames, pipelineAggregatorFactoriesMap, orderedPipelineAggregators, unmarkedFactories,

|

||||||

temporarilyMarked, matchingFactory);

|

temporarilyMarked, matchingFactory);

|

||||||

} else {

|

} else {

|

||||||

throw new IllegalStateException("No aggregation found for path [" + bucketsPath + "]");

|

throw new IllegalStateException("No aggregation found for path [" + bucketsPath + "]");

|

||||||

@ -201,7 +201,7 @@ public class AggregatorFactories {

|

|||||||

}

|

}

|

||||||

unmarkedFactories.remove(factory);

|

unmarkedFactories.remove(factory);

|

||||||

temporarilyMarked.remove(factory);

|

temporarilyMarked.remove(factory);

|

||||||

orderedReducers.add(factory);

|

orderedPipelineAggregators.add(factory);

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|||||||

@ -23,7 +23,7 @@ import org.apache.lucene.search.Scorer;

|

|||||||

import org.elasticsearch.common.lease.Releasables;

|

import org.elasticsearch.common.lease.Releasables;

|

||||||

import org.elasticsearch.common.util.BigArrays;

|

import org.elasticsearch.common.util.BigArrays;

|

||||||

import org.elasticsearch.common.util.ObjectArray;

|

import org.elasticsearch.common.util.ObjectArray;

|

||||||

import org.elasticsearch.search.aggregations.reducers.Reducer;

|

import org.elasticsearch.search.aggregations.pipeline.PipelineAggregator;

|

||||||

import org.elasticsearch.search.aggregations.support.AggregationContext;

|

import org.elasticsearch.search.aggregations.support.AggregationContext;

|

||||||

import org.elasticsearch.search.internal.SearchContext.Lifetime;

|

import org.elasticsearch.search.internal.SearchContext.Lifetime;

|

||||||

|

|

||||||

@ -86,7 +86,7 @@ public abstract class AggregatorFactory {

|

|||||||

}

|

}

|

||||||

|

|

||||||

protected abstract Aggregator createInternal(AggregationContext context, Aggregator parent, boolean collectsFromSingleBucket,

|

protected abstract Aggregator createInternal(AggregationContext context, Aggregator parent, boolean collectsFromSingleBucket,

|

||||||

List<Reducer> reducers, Map<String, Object> metaData) throws IOException;

|

List<PipelineAggregator> pipelineAggregators, Map<String, Object> metaData) throws IOException;

|

||||||

|

|

||||||

/**

|

/**

|

||||||

* Creates the aggregator

|

* Creates the aggregator

|

||||||

@ -99,7 +99,7 @@ public abstract class AggregatorFactory {

|

|||||||

* @return The created aggregator

|

* @return The created aggregator

|

||||||

*/

|

*/

|

||||||

public final Aggregator create(AggregationContext context, Aggregator parent, boolean collectsFromSingleBucket) throws IOException {

|

public final Aggregator create(AggregationContext context, Aggregator parent, boolean collectsFromSingleBucket) throws IOException {

|

||||||

return createInternal(context, parent, collectsFromSingleBucket, this.factories.createReducers(), this.metaData);

|

return createInternal(context, parent, collectsFromSingleBucket, this.factories.createPipelineAggregators(), this.metaData);

|

||||||

}

|

}

|

||||||

|

|

||||||

public void doValidate() {

|

public void doValidate() {

|

||||||

|

|||||||

@ -24,8 +24,8 @@ import org.elasticsearch.common.collect.MapBuilder;

|

|||||||

import org.elasticsearch.common.inject.Inject;

|

import org.elasticsearch.common.inject.Inject;

|

||||||

import org.elasticsearch.common.xcontent.XContentParser;

|

import org.elasticsearch.common.xcontent.XContentParser;

|

||||||

import org.elasticsearch.search.SearchParseException;

|

import org.elasticsearch.search.SearchParseException;

|

||||||

import org.elasticsearch.search.aggregations.reducers.Reducer;

|

import org.elasticsearch.search.aggregations.pipeline.PipelineAggregator;

|

||||||

import org.elasticsearch.search.aggregations.reducers.ReducerFactory;

|

import org.elasticsearch.search.aggregations.pipeline.PipelineAggregatorFactory;

|

||||||

import org.elasticsearch.search.internal.SearchContext;

|

import org.elasticsearch.search.internal.SearchContext;

|

||||||

|

|

||||||

import java.io.IOException;

|

import java.io.IOException;

|

||||||

@ -41,7 +41,7 @@ public class AggregatorParsers {

|

|||||||

|

|

||||||

public static final Pattern VALID_AGG_NAME = Pattern.compile("[^\\[\\]>]+");

|

public static final Pattern VALID_AGG_NAME = Pattern.compile("[^\\[\\]>]+");

|

||||||

private final ImmutableMap<String, Aggregator.Parser> aggParsers;

|

private final ImmutableMap<String, Aggregator.Parser> aggParsers;

|

||||||

private final ImmutableMap<String, Reducer.Parser> reducerParsers;

|

private final ImmutableMap<String, PipelineAggregator.Parser> pipelineAggregatorParsers;

|

||||||

|

|

||||||

|

|

||||||

/**

|

/**

|

||||||

@ -53,17 +53,17 @@ public class AggregatorParsers {

|

|||||||

* ).

|

* ).

|

||||||

*/

|

*/

|

||||||

@Inject

|

@Inject

|

||||||

public AggregatorParsers(Set<Aggregator.Parser> aggParsers, Set<Reducer.Parser> reducerParsers) {

|

public AggregatorParsers(Set<Aggregator.Parser> aggParsers, Set<PipelineAggregator.Parser> pipelineAggregatorParsers) {

|

||||||

MapBuilder<String, Aggregator.Parser> aggParsersBuilder = MapBuilder.newMapBuilder();

|

MapBuilder<String, Aggregator.Parser> aggParsersBuilder = MapBuilder.newMapBuilder();

|

||||||

for (Aggregator.Parser parser : aggParsers) {

|

for (Aggregator.Parser parser : aggParsers) {

|

||||||

aggParsersBuilder.put(parser.type(), parser);

|

aggParsersBuilder.put(parser.type(), parser);

|

||||||

}

|

}

|

||||||

this.aggParsers = aggParsersBuilder.immutableMap();

|

this.aggParsers = aggParsersBuilder.immutableMap();

|

||||||

MapBuilder<String, Reducer.Parser> reducerParsersBuilder = MapBuilder.newMapBuilder();

|

MapBuilder<String, PipelineAggregator.Parser> pipelineAggregatorParsersBuilder = MapBuilder.newMapBuilder();

|

||||||

for (Reducer.Parser parser : reducerParsers) {

|

for (PipelineAggregator.Parser parser : pipelineAggregatorParsers) {

|

||||||

reducerParsersBuilder.put(parser.type(), parser);

|

pipelineAggregatorParsersBuilder.put(parser.type(), parser);

|

||||||

}

|

}

|

||||||

this.reducerParsers = reducerParsersBuilder.immutableMap();

|

this.pipelineAggregatorParsers = pipelineAggregatorParsersBuilder.immutableMap();

|

||||||

}

|

}

|

||||||

|

|

||||||

/**

|

/**

|

||||||

@ -77,14 +77,15 @@ public class AggregatorParsers {

|

|||||||

}

|

}

|

||||||

|

|

||||||

/**

|

/**

|

||||||

* Returns the parser that is registered under the given reducer type.

|

* Returns the parser that is registered under the given pipeline aggregator

|

||||||

|

* type.

|

||||||

*

|

*

|

||||||

* @param type

|

* @param type

|

||||||

* The reducer type

|

* The pipeline aggregator type

|

||||||

* @return The parser associated with the given reducer type.

|

* @return The parser associated with the given pipeline aggregator type.

|

||||||

*/

|

*/

|

||||||

public Reducer.Parser reducer(String type) {

|

public PipelineAggregator.Parser pipelineAggregator(String type) {

|

||||||

return reducerParsers.get(type);

|

return pipelineAggregatorParsers.get(type);

|

||||||

}

|

}

|

||||||

|

|

||||||

/**

|

/**

|

||||||

@ -125,7 +126,7 @@ public class AggregatorParsers {

|

|||||||

}

|

}

|

||||||

|

|

||||||

AggregatorFactory aggFactory = null;

|

AggregatorFactory aggFactory = null;

|