|

|

||

|---|---|---|

| .github/workflows | ||

| .husky | ||

| .vscode | ||

| config | ||

| docker-compose | ||

| docs | ||

| public | ||

| service | ||

| src | ||

| .commitlintrc.json | ||

| .dockerignore | ||

| .editorconfig | ||

| .env | ||

| .eslintrc.cjs | ||

| .gitattributes | ||

| .gitignore | ||

| .npmrc | ||

| CHANGELOG.md | ||

| CONTRIBUTING.en.md | ||

| CONTRIBUTING.md | ||

| Dockerfile | ||

| README.en.md | ||

| README.md | ||

| index.html | ||

| license | ||

| package.json | ||

| pnpm-lock.yaml | ||

| postcss.config.js | ||

| start.cmd | ||

| start.sh | ||

| tailwind.config.js | ||

| tsconfig.json | ||

| vite.config.ts | ||

README.en.md

ChatGPT Web

Disclaimer: This project is only released on GitHub, under the MIT License, free and for open-source learning purposes. There will be no account selling, paid services, discussion groups, or forums. Beware of fraud.

- ChatGPT Web

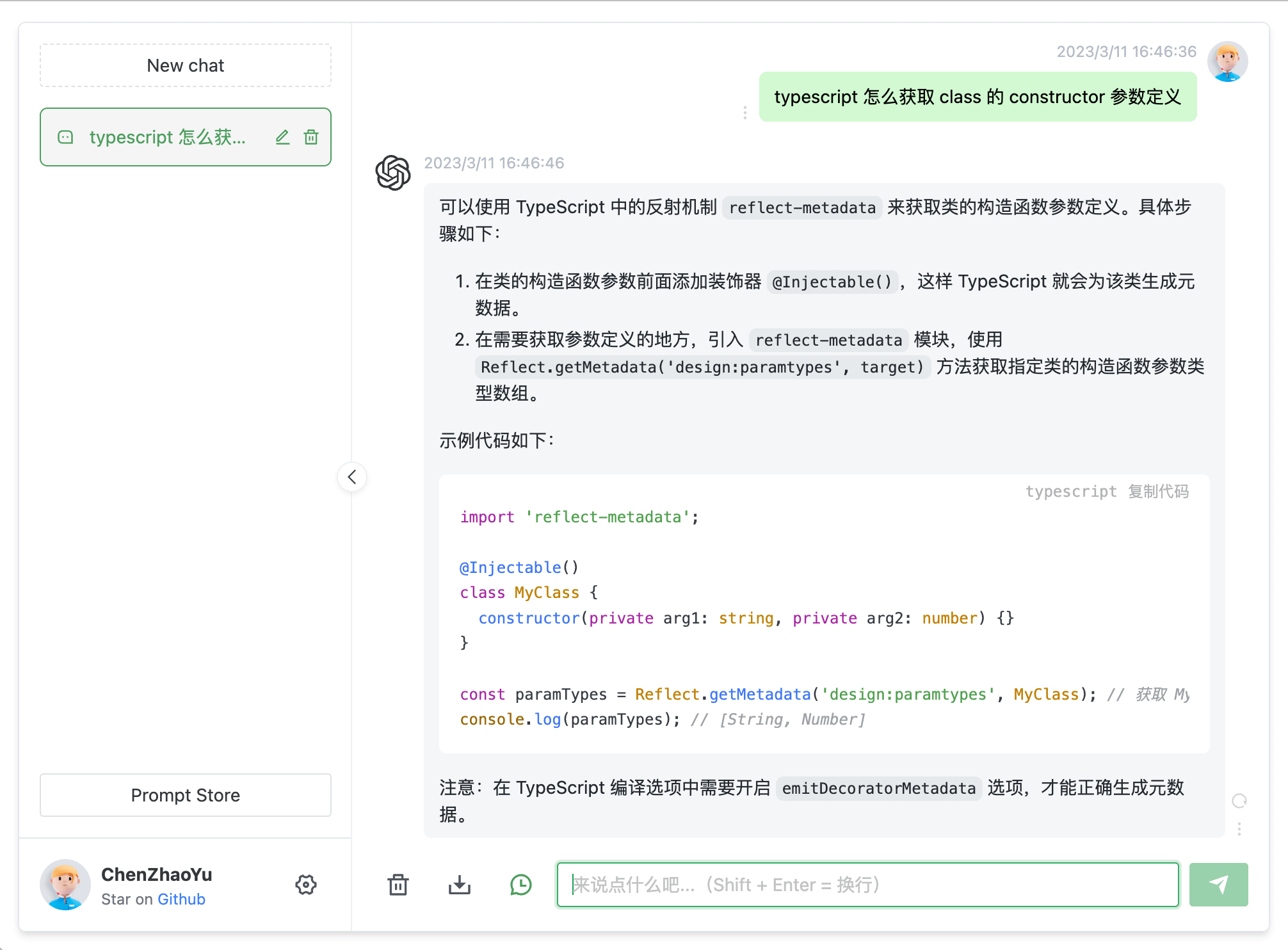

Introduction

Supports dual models, provides two unofficial ChatGPT API methods:

| Method | Free? | Reliability | Quality |

|---|---|---|---|

ChatGPTAPI(gpt-3.5-turbo-0301) |

No | Reliable | Relatively clumsy |

ChatGPTUnofficialProxyAPI(Web accessToken) |

Yes | Relatively unreliable | Smart |

Comparison:

ChatGPTAPIusesgpt-3.5-turbo-0301to simulateChatGPTthrough the officialOpenAIcompletionAPI(the most reliable method, but it is not free and does not use models specifically tuned for chat).ChatGPTUnofficialProxyAPIaccessesChatGPT's backendAPIvia an unofficial proxy server to bypassCloudflare(uses the realChatGPT, is very lightweight, but depends on third-party servers and has rate limits).

Switching Methods:

- Go to the

service/.env.examplefile and copy the contents to theservice/.envfile. - For

OpenAI API Key, fill in theOPENAI_API_KEYfield (Get apiKey). - For

Web API, fill in theOPENAI_ACCESS_TOKENfield (Get accessToken). - When both are present,

OpenAI API Keytakes precedence.

Reverse Proxy:

Available when using ChatGPTUnofficialProxyAPI.Details

# service/.env

API_REVERSE_PROXY=

Environment Variables:

For all parameter variables, check here or see:

/service/.env

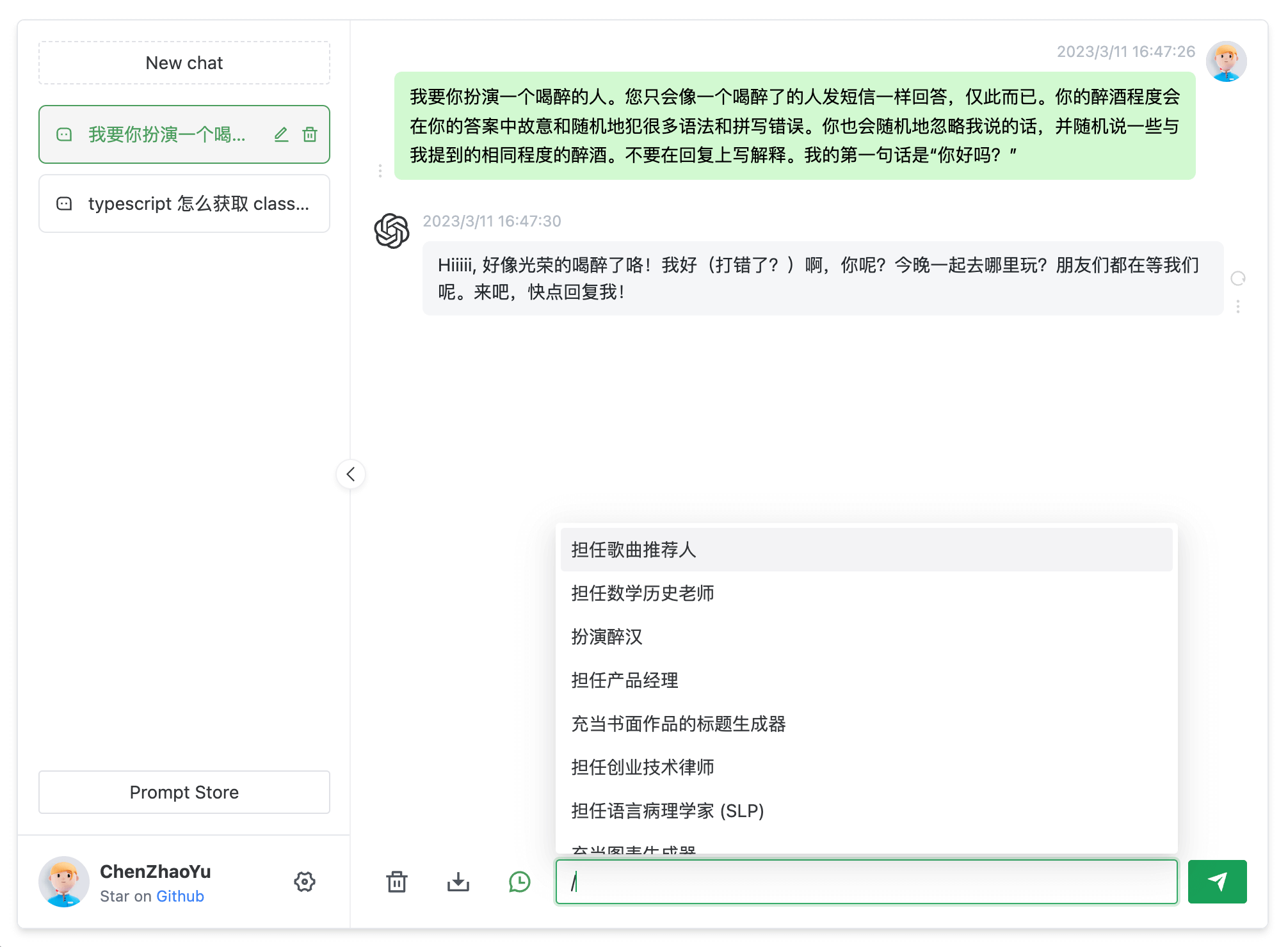

Roadmap

[✓] Dual models

[✓] Multiple session storage and context logic

[✓] Formatting and beautifying code-like message types

[✓] Access rights control

[✓] Data import and export

[✓] Save message to local image

[✓] Multilingual interface

[✓] Interface themes

[✗] More...

Prerequisites

Node

node requires version ^16 || ^18 (node >= 14 requires installation of fetch polyfill), and multiple local node versions can be managed using nvm.

node -v

PNPM

If you have not installed pnpm before:

npm install pnpm -g

Fill in the Keys

Get Openai Api Key or accessToken and fill in the local environment variables jump

# service/.env file

# OpenAI API Key - https://platform.openai.com/overview

OPENAI_API_KEY=

# change this to an `accessToken` extracted from the ChatGPT site's `https://chat.openai.com/api/auth/session` response

OPENAI_ACCESS_TOKEN=

Install Dependencies

To make it easier for

backend developersto understand, we did not use the front-endworkspacemode, but stored it in different folders. If you only need to do secondary development of the front-end page, delete theservicefolder.

Backend

Enter the /service folder and run the following command

pnpm install

Frontend

Run the following command in the root directory

pnpm bootstrap

Run in Test Environment

Backend Service

Enter the /service folder and run the following command

pnpm start

Frontend Webpage

Run the following command in the root directory

pnpm dev

Packaging

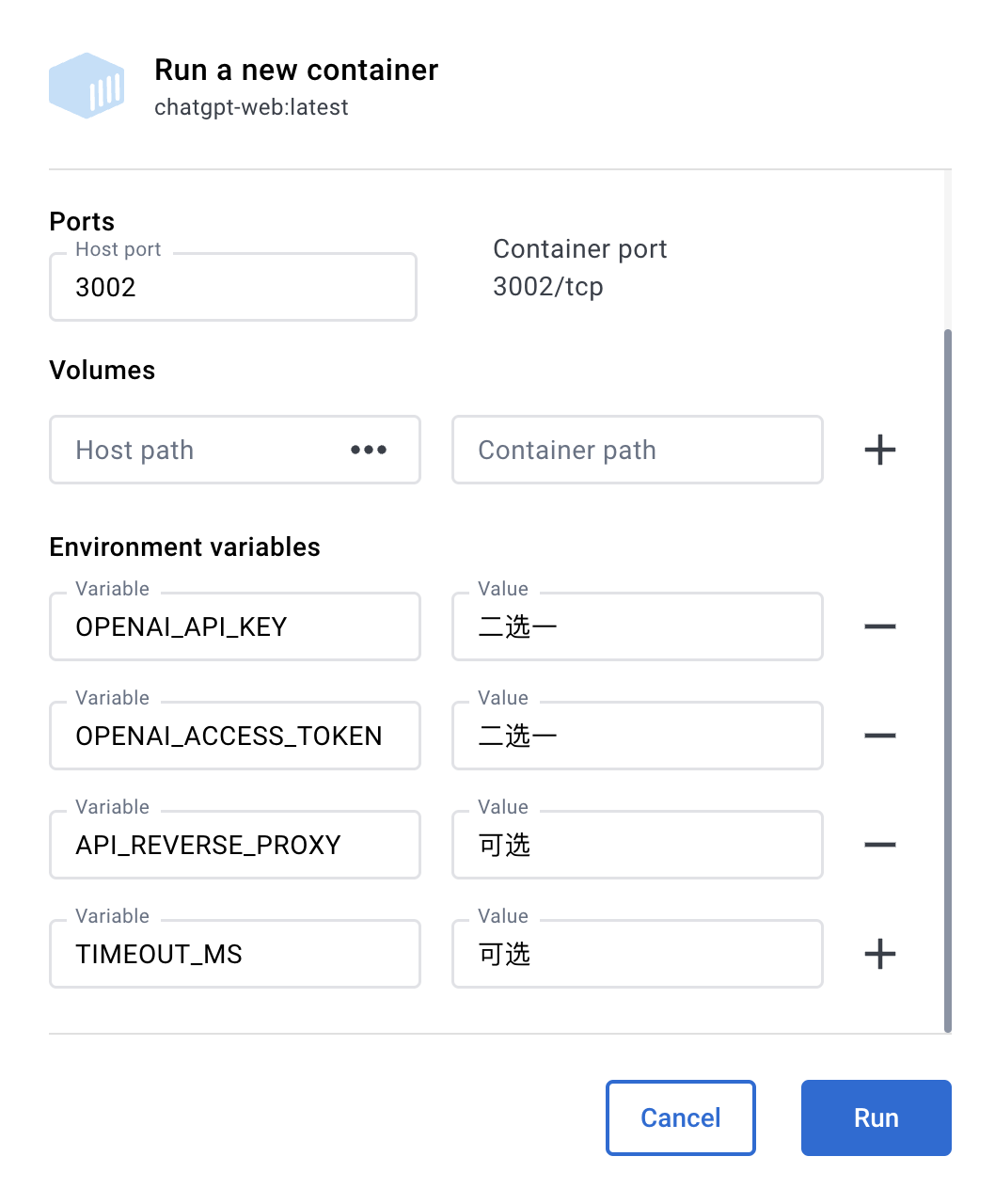

Using Docker

Docker Parameter Example

OPENAI_API_KEYone of twoOPENAI_ACCESS_TOKENone of two,OPENAI_API_KEYtakes precedence when both are presentOPENAI_API_BASE_URLoptional, available whenOPENAI_API_KEYis setOPENAI_API_MODELoptional, available whenOPENAI_API_KEYis setAPI_REVERSE_PROXYoptional, available whenOPENAI_ACCESS_TOKENis set ReferenceAUTH_SECRET_KEYAccess Password,optionalTIMEOUT_MStimeout, in milliseconds, optionalSOCKS_PROXY_HOSToptional, effective with SOCKS_PROXY_PORTSOCKS_PROXY_PORToptional, effective with SOCKS_PROXY_HOSTHTTPS_PROXYoptional, support http,https, socks5ALL_PROXYoptional, support http,https, socks5

Docker Build & Run

docker build -t chatgpt-web .

# foreground operation

docker run --name chatgpt-web --rm -it -p 127.0.0.1:3002:3002 --env OPENAI_API_KEY=your_api_key chatgpt-web

# background operation

docker run --name chatgpt-web -d -p 127.0.0.1:3002:3002 --env OPENAI_API_KEY=your_api_key chatgpt-web

# running address

http://localhost:3002/

Docker Compose

version: '3'

services:

app:

image: chenzhaoyu94/chatgpt-web # always use latest, pull the tag image again when updating

ports:

- 127.0.0.1:3002:3002

environment:

# one of two

OPENAI_API_KEY: xxxxxx

# one of two

OPENAI_ACCESS_TOKEN: xxxxxx

# api interface url, optional, available when OPENAI_API_KEY is set

OPENAI_API_BASE_URL: xxxx

# api model, optional, available when OPENAI_API_KEY is set

OPENAI_API_MODEL: xxxx

# reverse proxy, optional

API_REVERSE_PROXY: xxx

# access password,optional

AUTH_SECRET_KEY: xxx

# timeout, in milliseconds, optional

TIMEOUT_MS: 60000

# socks proxy, optional, effective with SOCKS_PROXY_PORT

SOCKS_PROXY_HOST: xxxx

# socks proxy port, optional, effective with SOCKS_PROXY_HOST

SOCKS_PROXY_PORT: xxxx

# HTTPS Proxy,optional, support http, https, socks5

HTTPS_PROXY: http://xxx:7890

The OPENAI_API_BASE_URL is optional and only used when setting the OPENAI_API_KEY.

The OPENAI_API_MODEL is optional and only used when setting the OPENAI_API_KEY.

Deployment with Railway

Railway Environment Variables

| Environment Variable | Required | Description |

|---|---|---|

PORT |

Required | Default: 3002 |

AUTH_SECRET_KEY |

Optional | access password |

TIMEOUT_MS |

Optional | Timeout in milliseconds |

OPENAI_API_KEY |

Optional | Required for OpenAI API. apiKey can be obtained from here. |

OPENAI_ACCESS_TOKEN |

Optional | Required for Web API. accessToken can be obtained from here. |

OPENAI_API_BASE_URL |

Optional, only for OpenAI API |

API endpoint. |

OPENAI_API_MODEL |

Optional, only for OpenAI API |

API model. |

API_REVERSE_PROXY |

Optional, only for Web API |

Reverse proxy address for Web API. Details |

SOCKS_PROXY_HOST |

Optional, effective with SOCKS_PROXY_PORT |

Socks proxy. |

SOCKS_PROXY_PORT |

Optional, effective with SOCKS_PROXY_HOST |

Socks proxy port. |

HTTPS_PROXY |

Optional | HTTPS Proxy. |

ALL_PROXY |

Optional | ALL Proxy. |

Note: Changing environment variables in Railway will cause re-deployment.

Manual packaging

Backend service

If you don't need the

nodeinterface of this project, you can skip the following steps.

Copy the service folder to a server that has a node service environment.

# Install

pnpm install

# Build

pnpm build

# Run

pnpm prod

PS: You can also run pnpm start directly on the server without packaging.

Frontend webpage

- Refer to the root directory

.env.examplefile content to create.envfile, modifyVITE_GLOB_API_URLin.envat the root directory to your actual backend interface address. - Run the following command in the root directory and then copy the files in the

distfolder to the root directory of your website service.

pnpm build

Frequently Asked Questions

Q: Why does Git always report an error when committing?

A: Because there is submission information verification, please follow the Commit Guidelines.

Q: Where to change the request interface if only the frontend page is used?

A: The VITE_GLOB_API_URL field in the .env file at the root directory.

Q: All red when saving the file?

A: For vscode, please install the recommended plug-in of the project or manually install the Eslint plug-in.

Q: Why doesn't the frontend have a typewriter effect?

A: One possible reason is that after Nginx reverse proxying, buffering is turned on, and Nginx will try to buffer a certain amount of data from the backend before sending it to the browser. Please try adding proxy_buffering off; after the reverse proxy parameter and then reloading Nginx. Other web server configurations are similar.

Q: The content returned is incomplete?

A: There is a length limit for the content returned by the API each time. You can modify the VITE_GLOB_OPEN_LONG_REPLY field in the .env file under the root directory, set it to true, and rebuild the front-end to enable the long reply feature, which can return the full content. It should be noted that using this feature may bring more API usage fees.

Contributing

Please read the Contributing Guidelines before contributing.

Thanks to all the contributors!

Sponsorship

If you find this project helpful and circumstances permit, you can give me a little support. Thank you very much for your support~

WeChat Pay

Alipay

License

MIT © ChenZhaoYu